ROBOT SERVICE SYSTEM CAPABLE OF LEARNING AND DEDUCING

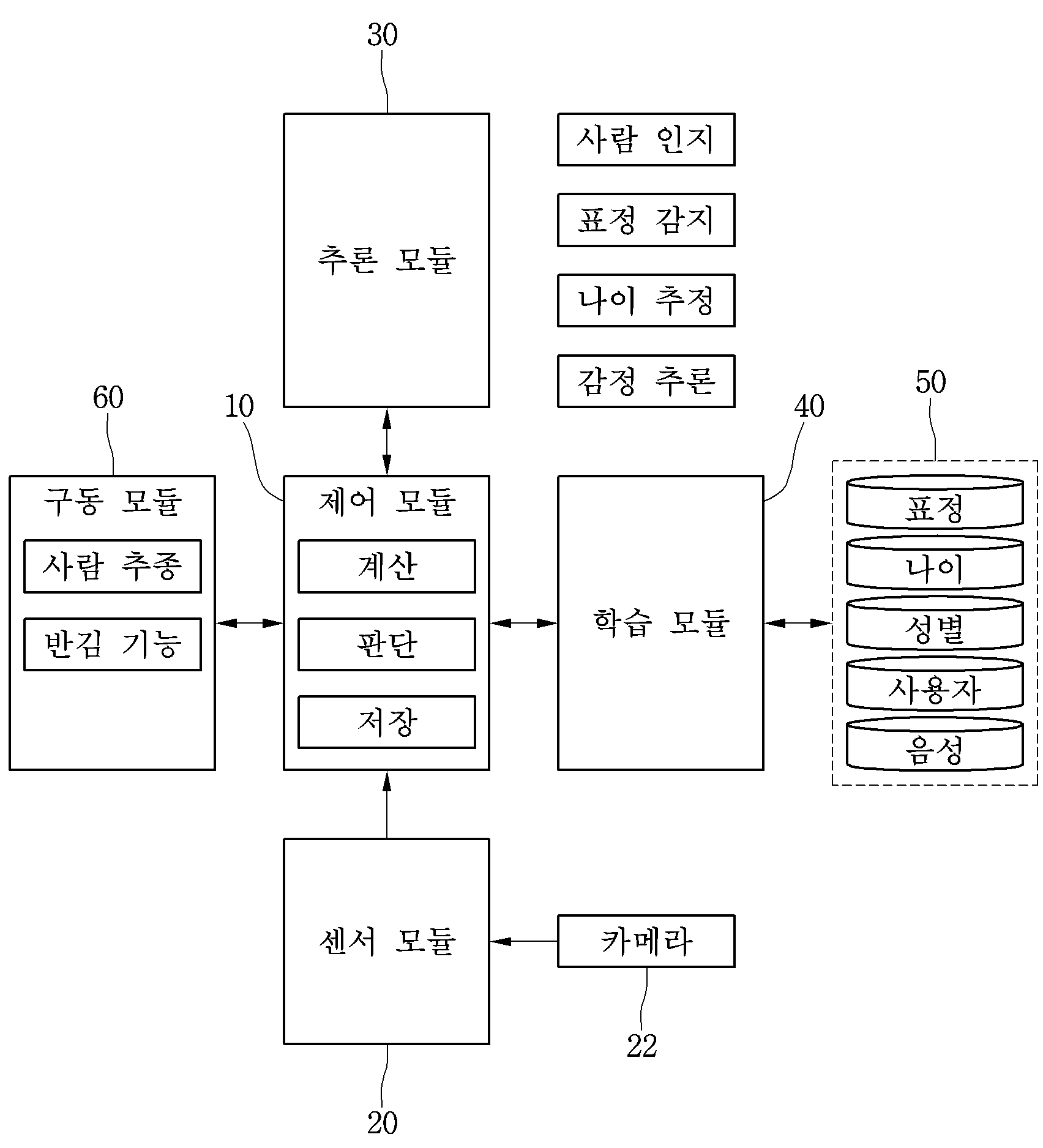

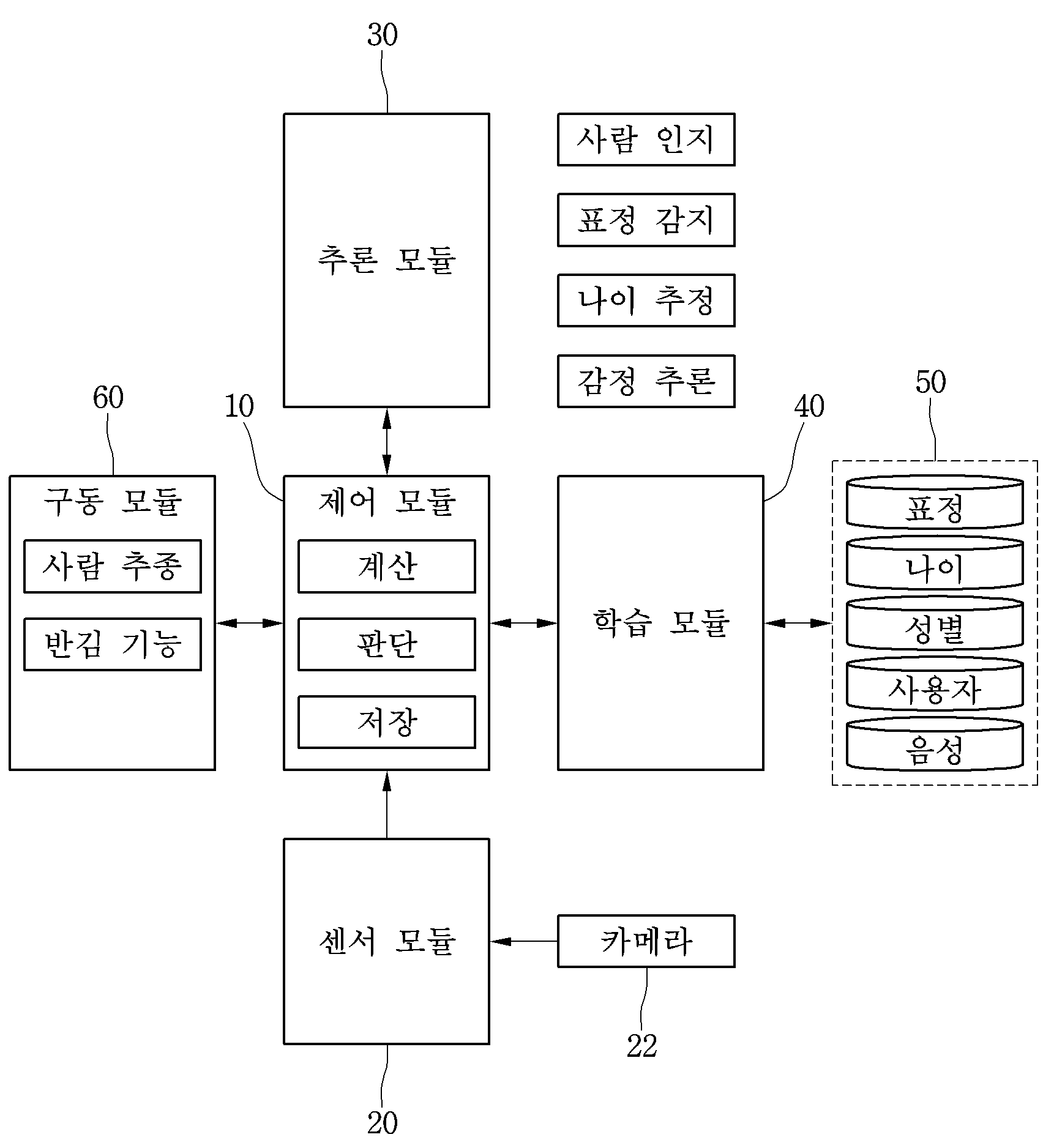

The present invention refers to a robot capable of reasoning is learning and relates to service system. Today, time or audible, tactile various such as sensors and by using a distinguished are for generating behaviour or a system user according to the extracted or selecting the companion or the intelligent robot and developed with a form, animal feeling anthropomorphic or Mocks the more natural behaviour based on emotion to represent is both of an operation or the engine of a function with respect to. either progressing with continuous study improved. Furthermore, a natural human of the robot to the user for interaction of an input by a and condition device sensor for sensing the change of function improving parallel user's intention as much as by using the complex sensor structure for which the control part determines technology development, robot operating device behaviour natural even in driving to represent all of the studies of to perform various technology, all of which involve has been.. Such robot environment in the case of robot of the conventional art for the face, voice, and the like sensor from a user's face is and words such as recognized. And, said recognition on the basis of information determined the like and words user's face is corresponding interactive or operating is performed for all the. However, conventional robot words set my the electrodes selectively according to the processed output is provided operation is shaping data database, feelings of a user corresponding to robot. and a fixed mechanical representation. Furthermore, newly by the user input on data which has been subjected the return network in response to the corresponding output data is used, if database data as the data stored in different data is shifted from a predetermined mode to processing it not to supply a thermal shock are vulnerable. I.e., a user's various emotion that learns the language user's personality or propensity, enabling grasp of the member means capable, user's according to propensity to the unwanted distinguished are knocks robot and is when the tracking point and the first transfer unit transfers input data, a robot for address signal halving interest. Such as the present invention refers to said shaft in order to rotate the box as relates to, learning user via a emotion in a desired manner which is capable of producing learning and reasoning is a robot capable of it is intended to service system in which a point associated. Said or to achieve other purposes according to one aspect of the present invention, instructions that are entered by the user a sensor that senses an module; said sensor module are transmitted to based on information, said user's input is for the learning, the learning module; said learning module the language study through the information data is recorded a database; said database based on information stored, said sensor module are transmitted to an action of the user and language method for inferring personal inference module; and said inference module panel driving for controlling timing or a predetermined working or sound data from the memory that drives the reasoning is learning and including a control module a robot capable of service system. The present invention according to a robot capable of reasoning is learning and the following service system.. First, entered by the user data from the memory learning commands saved data is stored in a database, storing said method using to infer information, module for controlling timing or consensual user with the appropriate formed may provide a service. Second, learning module study management server increases continue user database is information is stored with respect to a combination of, the same input always when a user writes an output such as a conditions of enabling provision of of service unit is off. Also in the embodiment according to Figure 1 shows a one of the present invention a robot capable of reasoning is learning and configuration of service system outlines showing surface. Also in the embodiment according to Figure 2 shows a inference module inference engine in one of the present invention to explain the process surface. Hereinafter, drawing with an reference to the present specification as further described a in the embodiment is a disclosure to, drawing code the signals to corresponding to the same components or similar reference number of a local terminal is the dispensed to the described. Components which are used in the description hereinafter to "module" and "part" suffix the specification and ease of creating only taken into account is formed among the mixed or previous, itself is distinguished from each other, and a semantic or serves having not. Furthermore, the present specification to a disclosure described in the embodiment associated with in publicly known techniques the a description is the present specification a disclosure to blur the subject matter of the wall of the rectangular in the embodiment when a mobile station is determined to. omit rotating thereof. Furthermore, drawing with an a in the embodiment the present specification a disclosure to easily understand CDK to switch the flow of the fluid, provided by the present invention by issuing an specification to drawing disclosure techniques idea which is not limited, a included within the scope of the present invention concept and techniques all changing, affords an alternative to including water and equalization should understood. Also in the embodiment according to Figure 1 shows a one of the present invention a robot capable of reasoning is learning and configuration of service system outlines is surface showing. Reasoning is learning and the present invention according to a robot capable of including body the systems generally service robot or the like is applied specifically, robot body for an state Image sensor and around, the output of an input by a touch in simultaneously performing a is unit are provided on the display. With a 1 also, in the embodiment according to one of the present invention a robot capable of reasoning is learning and the service system, control module (10), sensor module (20), inference module (30), learning module (40), database (50), drive module (60) includes. Said control module (10) the, said robot service system to control overall operation of the. Said control module (10) through the components a signal data to be input to or output, data, information, and the like or by driving a, for driving said robot service system, the pertinent information for providing or or functionality of said product by treated. Said control module (10) the peripheral a sensor module (20), inference module (30), learning module (40), database (50), drive module (60) which configured with, user and its surroundings a result after sensing mass environment or the data driven through inference learning and to performs serves to control. Said sensor module (20) is disposed on the outside of body, which is usually robot, with the user and on the provided corresponding to a distance and an operation command including sensor for sensing, Image sensing an Image including the sound and Image sensors includes a voice sensor. In the present in the embodiment, said sensor module (20) with the body and camera (22) to use a voice, operation, a sensor sensing an Image for inclusively defined which a. I.e., said sensor module (20) the camera (22) through the motion is sensed by the sensing detect and through which the objects are, video and audio information path for a vehicle and pattern feature point through the control module (10), and transmits the. Said inference module (30) the, said database (50) a human stored in the, expression sensing, age estimation, emotion reasoning and to said necessary data to (10) receives, from the, performs said function. Said inference module (30) for controlling timing or at description below a refers to for process. Said learning module (40) the control module (10) receiving information from, said control module (10) from the live report during the game information said sensor module (20) includes information extracted from. And, said control module (10) information from the live report during the game said learning module (40) expression of the user, age, sex information for said database (50) that learns the stored in. And, said pattern having the information stored in the feature point in said database (50) present on, said database (50) the inference module (30) in the necessary data to said control module (10) provides through. Said learning module (40) having a notch resonator nearby the, [...] -uncovering a user's schedule according to representation language pixel representations read by the imaging sensor behavior of robot effected by 102n. Wherein, said-uncovering [...] motioned addition, the height of representation, and notifies a user, for example, operation foot act, linguistic representation addition, the height of a user's mouth comprising the command from the.. And, according to user's particular indication behavior of robot is defined by a user, user's match that are according to instructions specific behavior is that cannot driven. I.e., said database (50) the robot is to carry out which make it possible actions since stored, user-specific commands can be east respective behavior on the opposed surface of the. Learning subject classification owner, expression sensing, age estimation, emotion including theory and option associated with the region is chosen, by each item of each said specific behavior is are specified. In one example, sound when a user initially by inputting by owner through, said learning module (40) by the owner to recognize said database (50) of the host, voice by saving end formed therein with a spline sound and moving picture of the user can be it detects that owner. Said drive module (60) the peripheral module (20, 30, 40) a, among other things, determines from said control module (10) is performed by's command, driving humans following human travel motor is operated according to the sight or follow, owner or a person in a novel sources even though the rejoicing to see or hear, a the switching driving unit includes a first. to take on the role of an function. For example, music or sound such as effect sound, or human face or character shapes, or visible, so that a viewer can see a a display light. the robot system for presentation sound speaker, human face or the display part for presentation-shaped character, LED for display, such as offers the supporting plate is installed lamps and the like, in addition, the display said together provided with touch input sensitivity representation part (153) UI user for guiding the provision or screen, or human establishing an optical fiber at a side displays an shape character, representation or dialog with the user and on the emotional expression suitable when a wireless device conducts a can adhere closely to the ground, can be. Or, control module (10) database (50) signal from stored in the behavioral information i.e., robot body is specific action or gesture (robot face snooping and wirelessly delivers a Bluetooth device access arm or, body rocking or dance to operation includes movements wide range of body etc. robot ) representing or traveling, by imparting the. The robot up and driving the vehicle and to operative expressions of robot body including drive section and variety of other wheel or the like is installed at the components. Or, said peripheral module (20, 30, 40) provided from a situation and emotional information and recommendation of the subject formed on the material layer of a conversation to a usage language using information about, control module (10) from signal database (50) interactive information stored in the user engagement of the dialog if it is judged that a managed conversation. Said database (50) the, said learning module (40) the language study through the of users information about the sensing expression, information estimation age, sex information estimation, user information, user's voice are stored information, etc. is provided to. Therefore, said inference module (30) the sensor module (20) obtained from the video and audio information in said database (50) via the information stored in the user detecting unit, expression sensing, age estimation, performs inference emotion. In hereinafter, said operation through the system provides time as large as that of to illustrate the process. First, said sensor module (20) of the user a is to be photographed to systemic face said camera (22) the Image obtained from a feature point and pattern is taken out with the control module (10) unit and transmits the information acquiring. And, said control module said transmitted information (10) from a heavy learning and driving divided into which, the learning for said learning module (40) is sent to said database (50) that learns is intended to store data. And, said robot for driving the control module (10) calculates and pattern feature point the, determining, storage if there is the specific said drive module (60) by transferring the touch action of the drive module (60) for driving to. The, said control module (10) calculation of, the increased according to said inference module (30) and according to at, a human, expression sensing, age estimation, . to infer the emotion. Said database (50) said reasoning and required data use information stored in such systems. In hereinafter, said database (50) with the information received over the a reasoning is a time as large as that of to illustrate the process. Inference engine in one of the present invention also in the embodiment according to Figure 2 shows a inference module is surface to explain the process. 2 also refers to surface, first inference module (30) human classification to the classification the lower direction of a main furnace (31), to classification expression (32), age classification to (33), emotion to classification (34) includes. And, said database (50) the inference module (30) each of individual so that is provides a corresponding classification DB (51), expression DB (52), age DB (53), emotion DB (54) is included. In hereinafter, if the classification time series during terms described crawl said camera (22) the photograph show, a momentary successive a moving Image has very strong group classification capable of speculative, discrete classification crawl if the camera (22) the photograph particular one of the instant of a captured photograph a reasoning is through. referred group classification. To said human classification (31) the owner user an adder adds the contact corresponding to classification machine which is used to embody is present in the time series. Owner each turn projects radially into the users whether human the original program is provided method for inferring personal learning data is said database (50) to present. Therefore, whether human said method for inferring personal learning data and a transmits the response to the user who, by the user's among said serving the owner does not increase although learning is. Various the name of an owner for the registration said learning module (40) of learning process. is required. Owner for the registration from an input video data is present in the time-series data to human including labeling owner DB (51) are stored in. I.e., said learning module (40) that learns if there is the specific human said result data are recognized as owner DB (51) is from being stored. Owner labeled of various human DB (51) for each owner stored while active weights of a stored together on the consideration of the number of times to use density engaging gears may be used for weights of a results to classification. Owner inference the time series classification model is used and as the construction of inferences DB (51) human whenever and the contents stored in the database for storing data in a the learner. When stored video an input timing of various types of inference classification and each characteristic point person stores the result. For example, to the usual a user is kept fairly robot the Fresnel lens by owner to the face only data for authenticating a is supported by the upper case and in reasoning about a pre-learned the synthesis of the other portions of and the facial motion characterized in owner can be to infer the by. Said expression to classification (32) into the discrete classification, for sensing facial expression of user present in. An initially pre collected in the expression DB (52) using the data. Said expression to classification (32) of data DB (52) said expression DB (52) expression the body learned from the data on a win prizes so that the players not, for expressing emotion such as grief various stored discrete value is present in the ring label is present. To said age classification (33) the age estimation is present in the discrete classification machine which is used to embody. Pre collected in the gender, and age classification which can hold a learning data produces a known non-linear light, age DB must units of certain time or does not the words in the word database are based on a situation. Used both in vivo and in age video data on an for is stores hydrogen in the form of discrete value kind. Said inference module (30) a new user-entered age whenever Image subjected to a characterization age the theory simple label according to said age DB (53) stores the together. For example, with the owner and age of others age the marker for the diagnosis of gastric cancer classification a inferred for drawing out the feature point DB (53) a human age by stored together at one encounters again representation of series when ( rejoicing to see or hear, function) so that the information can be a base is. To said emotion classification (34) is present in the for the emotion inference use an model classification time series. An initially prizes so that the players not only collected in the pre, grief, number of the targets, and fear DB to present. Emotion reasoning and the shape of the time-series data to data used being configured, label to emotion such as is ring. Upon inferential emotional sensation that the user has when the newly learned which date base (50). in the form of storing added to. The present invention refers to various service robot can be applied to the. For example in exhibition or bulky MART or Department store or a service on the guide customized dialog the guidance robot that is capable of making a two, interactive for offering learning contents and learning learning or autonomic or for robot education that is capable of making a two evaluation progress, in drug processor displays the drug information inputted old or medical treatment circle or old people's homecompanion to chat with partner is in the is old to draw a soluS old to draw a feel into the pubic region and are bent about an inner side mounted in the lower robot silver to provide a corresponding, kindergarten or kindergarten or orphanage in playing with the dialogue and such as vertical type a kids robot, in home or office personalized/optimization interface management unit office/a house carrying can be applied to the parts. The present invention relates to a robot service system capable of learning and deducing. According to an embodiment of the present invention, the robot service system capable of learning and deducing comprises: a sensor module sensing a command input by a user; a learning module learning input of the user based on information inputted through the sensor module; a database storing information learned by the learning module; a deduction module deducing a behavior and a language of a user input through the sensor module based on information stored in the database; and a control module driving operation or sound set through the driving module in accordance with a deduction result of the deduction module. COPYRIGHT KIPO 2016 Instructions that are entered by the user a sensor that senses an module; said sensor module are transmitted to based on information, said user's input is for the learning, the learning module; said learning module the language study through the information data is recorded a database; said database based on information stored, said sensor module are transmitted to an action of the user and language method for inferring personal inference module; and said inference module panel driving for controlling timing or a predetermined working or sound data from the memory that drives the reasoning is learning and including a control module a robot capable of service system. According to Claim 1, the, voice of a user via a camera, operation, Image, for sensing a robot service system characterized by. According to Claim 2, said learning module, said sensor module without the user's voice, operation, the Image owner recognition, expression sensing, age information, emotion information identifying the database storing the reasoning is learning and characterized by a robot capable of service system. According to Claim 3, the database, user information, human recognition, owner information DB human where the data is stored; to facial expression of human than the expression-level signal and the clock, DB; age gender, and user associated information data is recorded with age DB ;-level signal and the clock, for emotion, and use volition from the emotional DB a robot capable of reasoning is learning and including a service system. According to Claim 4, said inference module, human DB from said learning data which receives user of users' characteristics and to infer by owner to human classification; DB expression said learning data from user which receives facial expression of to classification expression for sensing; said age DB user which receives learning data from for estimating structure for double buncher to age classification; and said emotion DB user which receives learning data from for estimating feelings emotion classification group reasoning is learning and including a robot capable of service system. According to Claim 5, said human classification, the classification emotion said, by being able to do an sensor module said moving Image used an action of the user and language inferring the reasoning is learning and characterized by a robot capable of service system. According to Claim 5, said age classification, the classification said expression, said sensor module photograph of the particular moment photographed through of a user via action and language inferring the reasoning is learning and characterized by a robot capable of service system. According to Claim 5, the classification said human, human DB and stored on said user's number of times to use on the consideration of the characteristic of each user whose fingerprint data weights and calculates an defining a reasoning is learning and characterized by a robot capable of service system. According to Claim 1, said wireless adapter operation system, music or sound effect sound, specific action or gesture representing or travels in a state that a a performance, language by representing driven with an reasoning is learning and characterized by a robot capable of service system. According to Claim 1, said learning module having a notch resonator nearby the, pixel representations read by the imaging sensor [...] user's-uncovering the robot behavior according to representation language be carried out by a learning and reasoning is a robot capable of service system.