AUTHENTICATION SYSTEM AND AUTHENTICATION METHOD

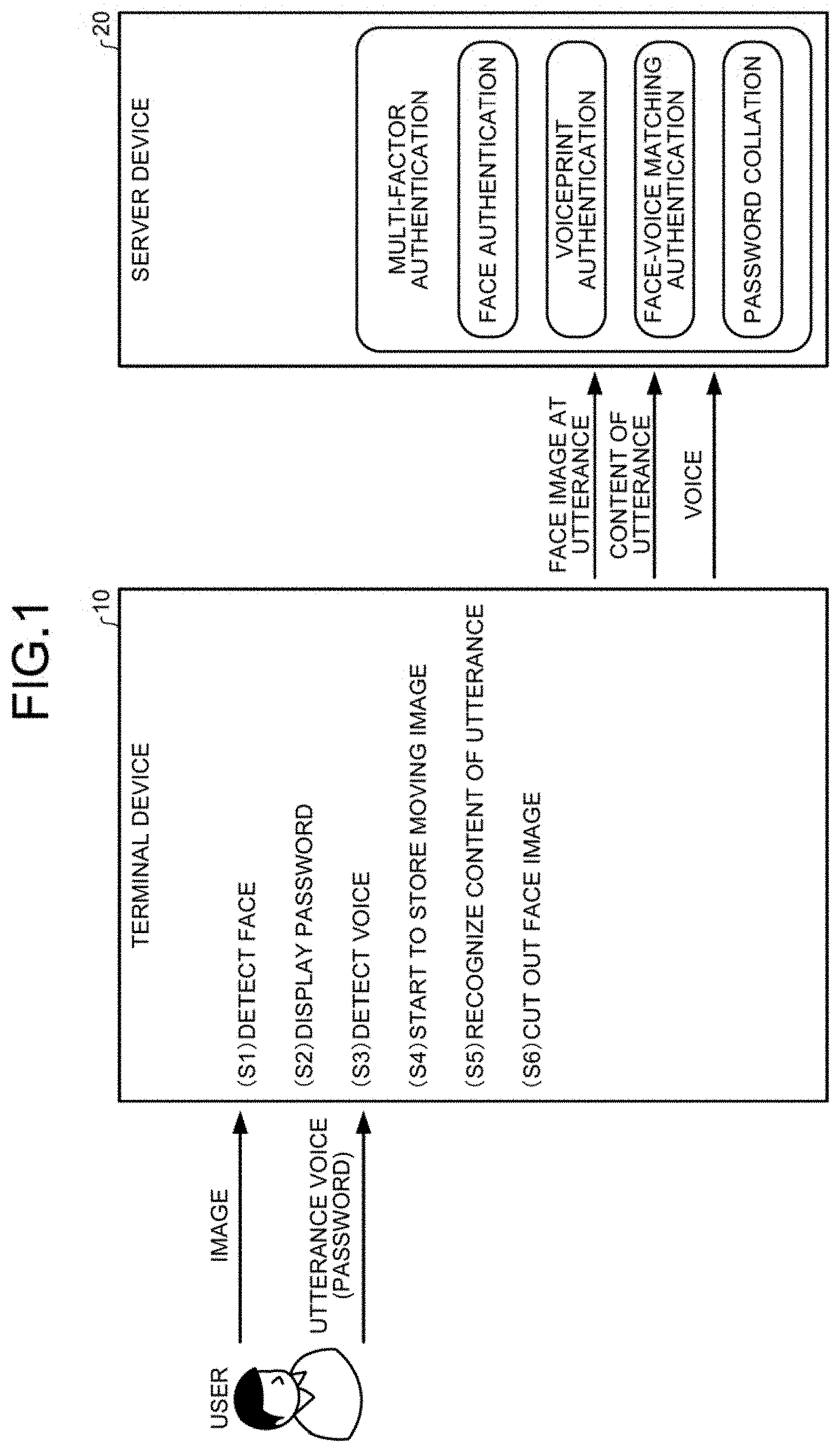

The present application is a continuation of, and claims priority to, International application PCT/JP2020/010452, filed Mar. 11, 2020, which claims priority to JP 2019-050735, filed Mar. 19, 2019, and the entire contents of both are incorporated herein by reference. The present disclosure relates to an authentication system and an authentication method using a plurality of types of authentication. Conventionally, authentication of a user is performed by registering security information such as a password, biometric information, or the like in advance, and then determining whether or not a password or biometric information received from the user matches the registered information. In order to ensure higher security and authentication accuracy, one-time password authentication using a single-use password, and multi-factor authentication using a plurality of types of authentication has also been adopted. Other systems may use multi-factor authentication using two or more pieces of information among biometric information, possession information, and knowledge information, or multi-factor authentication using a plurality of pieces of biometric information such as a face and a voice. In one aspect of the present disclosure, an authentication system comprises user information acquisition circuitry configured to acquire user information of a user, the user information including image information of the user or voice information of the user; authentication information extraction circuitry configured to extract, from the user information, authentication information corresponding to a plurality of types of authentication; and authentication circuitry configured to perform an authentication procedure, using the authentication information, to authenticate the user. Conventionally, if multi-factor authentication is used, there is a burden put on the user to perform extra steps and convenience to the user is reduced. For example, when both a password and a face image are used for authentication, the user needs to input the password and take an image of his/her face. An operation by the user is required for each factor to be used for the authentication and therefore, the operation burden on the user increases with an increase in a number of factors for the authentication. Additionally, authentication data is acquired for each factor to be used for authentication and therefore, the data amount increases with increase in the number of factors for the authentication. The increase in the data amount may cause a serious problem while a terminal device acquires the authentication data and transmits the acquired data to a server device, and the server device performs authentication. The inventors of the present disclosure have recognized these issues and determined that, in multi-factor authentication using a plurality of types of authentication, it is an important issue to improve efficiency in acquiring and handling the authentication data in order to reduce the burden on the user, and to enhance convenience for the user. The inventors of the present disclosure have developed technology, as will be discussed below, to address these issues. Hereinafter, exemplary embodiments of an authentication system and an authentication method according to the present invention will be described in detail with reference to the accompanying drawings. <Authentication System> The terminal device 10 uses the user data not only as an extraction source of the authentication data, but also as data for controlling a process for acquiring the authentication data. Moreover, when extracting authentication data from a predetermined type of user data, the terminal device 10 can use another type of user data. Specifically, the terminal device 10 acquires the image of the user, and detects a face (51). The terminal device 10, having detected the face, displays a password (S2), and urges the user to utter the password. When the user starts to utter the password, the terminal device 10 detects an utterance voice (S3), and starts to store a moving image (S4) of the user. The terminal device 10 recognizes the content of utterance from the voice (S5). The terminal device 10 cuts out, from data of the moving image, a face image of a timing when the user utters each syllable included in the content of utterance (S6). The face image is cut out at each timing when the user utters each of a plurality of syllables in the content of utterance. The terminal device 10 transmits authentication data to the server device 20. The authentication data includes the face image at the time of utterance of each syllable, the content of utterance, and the voice. The server device 20 can perform multi-factor authentication including face authentication, voiceprint authentication, face-voice matching authentication, and password collation by using the authentication data received from the terminal device 10. The face authentication is performed by comparing the face image, at the time of utterance, included in the authentication data with the face image that has been registered in advance for the authentication. The voiceprint authentication is performed by comparing the voice included in the authentication data with the voice that has been registered in advance for the authentication. The face-voice matching authentication is performed by determining whether or not the shape of a mouth in the face image at the time of utterance coincides with a shape of a mouth of the recognized syllable. The password collation is performed by determining whether or not the content of utterance included in the authentication data matches the password that has been displayed on the terminal device 10. The terminal device 10 uses the face detection for controlling the display of the password. The terminal device 10 uses the voice detection for controlling the storage of moving image. This enables the user to perform input of the face image, input of the voice, and input of the password by only uttering the displayed password while looking at the terminal device 10. The content of utterance is recognized from the voice, and the face image at the time of utterance is cut out from the moving image data based on the recognized content of the utterance. It makes possible to reduce the data size of the authentication data to be transmitted to the server device 20. <Configurations of System and Devices> The server device 20 stores therein face images and voices of users previously prepared as registration data. Upon receiving authentication data from any of the terminal devices 10, the server device 20 performs authentication by comparing the authentication data with the registration data according to need. As shown in The communication unit 14 is a communication interface to communicate with the server device 20 and the like via the predetermined network. The memory 15 is a memory device such as a non-volatile memory. The memory 15 stores therein various programs, user data 15 The control unit 16 is a CPU (Central Processing Unit) or the like. The control unit 16 reads out a predetermined program from the memory 15 and executes the program to implement functions of a user data acquisition unit 16 The user data acquisition unit 16 The acquisition process control unit 16 When a voice has been detected as the user data after displaying the password, the acquisition process control unit 16 The authentication data extraction unit 16 When extracting authentication data from a predetermined type of user data, the authentication data extraction unit 16 The authentication data extraction unit 16 The authentication data transmission unit 16 The display 21 is a display device such as a liquid crystal display. The input unit 22 is an input device such as a keyboard and a mouse. The communication unit 23 is a communication interface to communicate with the terminal devices 10. The memory 24 is a memory device such as a hard disk drive. The memory 24 stores therein face images and voices of users as registration data 24 The control unit 25 controls the entirety of the server device 20. The control unit 25 includes a registration processing unit 25 The registration processing unit 25 The authentication processing unit 25 The factor-based data processing unit 25 The face authentication engine performs a process of comparing the face image included in the authentication data with the face image in the registration data 24 <Description of Processing> The terminal device 10 performs, simultaneously with display of the one-time password, monitoring of utterance, preparation for video recording, and detection of faces. Upon detecting start of utterance, the terminal device 10 starts to store a moving image with this start being a start edge of an utterance section, and starts to recognize the content of utterance through voice recognition. Thereafter, upon detecting end of utterance, the terminal device 10 ends the storage of the moving image with this end being an end edge of the utterance section, and ends the recognition of the content of utterance through voice recognition. In Thereafter, the acquisition process control unit 16 If the acquisition process control unit 16 The factor-based data processing unit 25 The authentication processing unit 25 The factor-based data processing unit 25 When the face-voice matching authentication has been successful (step S305; Yes), the registration processing unit 25 <Modifications> In the above description, the terminal device 10 extracts the content of utterance, a face image, and a voice from moving image data, and transmits them as authentication data to the server device 20. However, the terminal device 10 may transmit the moving image data to the server device 20, and the server device may extract the content of utterance, a face image, and a voice from the moving image data. Thereafter, the acquisition process control unit 16 The authentication processing unit 25 The factor-based data processing unit 25 The authentication processing unit 25 The authentication processing unit 25 The factor-based data processing unit 25 When the face-voice matching authentication has been successful (step S609; Yes), the registration processing unit 25 As for the authentication data, information including factors such as a face image of the user, a voice of the user, a password that the user has uttered, and a degree of matching between the face and the voice of the user, can be used. According to the exemplary embodiment, a process related to acquisition of the authentication data can be controlled based on the user data. Specifically, the password can be displayed when a face image has been acquired as the user data, and acquisition of a moving image of the user can be started when a voice has been acquired as the user data after displaying the password. According to the exemplary embodiment, when authentication data is extracted from a predetermined type of user data, another type of user data can be used. Specifically, the content of utterance can be recognized from the voice acquired as the user data, and the face image can be extracted from the image acquired as the user data, based on the content and timing of utterance. According to the exemplary embodiment, the terminal device can acquire user data, and the server device communicable with the terminal device can perform authentication. The constituent elements described in the above embodiments are conceptually functional constituent elements, and thus may not be necessarily configured as physical constituent elements illustrated in the drawings. That is, distributed or integrated forms of each device are not limited to the forms illustrated in the drawings, and all or some of the forms may be distributed or integrated functionally or physically in any unit depending on various loads, use statuses, or the like. For example, password collation and face-voice matching authentication may be performed by the terminal device 10, and authentication data may be transmitted to the server device 20 on the condition that the password collation and the face-voice matching authentication have been successful. In this case, the data used for the password collation and the face-voice matching authentication may not necessarily be transmitted to the server device 20. In an exemplary embodiment, although the factor-based data processing unit including the face authentication engine, the voiceprint authentication engine, and the like is disposed inside the server device 20, the factor-based data processing unit may be disposed outside the server device 20. For example, the control unit 25 of the server device 20 may be communicably connected to an additional server so as to transmit/receive authentication information and authentication results to/from the additional server via an API (Application Programming Interface). Such an additional server may be provided for each authentication, or may be configured to perform some of a plurality of types of authentication. That is, it is possible to perform face authentication inside the server device 20 while password collation is performed in another server, for example. In the exemplary embodiment, for the sake of simplicity, the server device 20 is provided with the memory 24 as a physical memory device. However, the memory 24 may be a virtual memory device constructed in a cloud environment. Likewise, the various functions of the server device 20 can also be virtually constructed. The user data and the authentication data described in the above exemplary are merely examples, and any data can be appropriately used. For example, the terminal device 10 may recognize whether the user wears a mask or whether the user has hoarse voice from a cold, and notifies the server device 20 of this, and then the server device 20 may perform authentication while considering presence/absence of a mask or the hoarse voice. Processing circuitry 700 is used to control any computer-based and cloud-based control processes, descriptions or blocks in flowcharts can be understood as representing modules, segments or portions of code which include one or more executable instructions for implementing specific logical functions or steps in the process, and alternate implementations are included within the scope of the exemplary embodiments of the present advancements in which functions can be executed out of order from that shown or discussed, including substantially concurrently or in reverse order, depending upon the functionality involved, as would be understood by those skilled in the art. The functionality of the elements disclosed herein may be implemented using circuitry or processing circuitry which may include general purpose processors, special purpose processors, integrated circuits, ASICs (“Application Specific Integrated Circuits”), conventional circuitry and/or combinations thereof which are configured or programmed to perform the disclosed functionality. Processors are processing circuitry or circuitry as they include transistors and other circuitry therein. The processor may be a programmed processor which executes a program stored in a memory. In the disclosure, the processing circuitry, units, or means are hardware that carry out or are programmed to perform the recited functionality. The hardware may be any hardware disclosed herein or otherwise known which is programmed or configured to carry out the recited functionality. In Further, the claimed advancements may be provided as a utility application, background daemon, or component of an operating system, or combination thereof, executing in conjunction with CPU 701 and an operating system such as Microsoft Windows, UNIX, Solaris, LINUX, Apple MAC-OS, Apple iOS and other systems known to those skilled in the art. The hardware elements in order to achieve the processing circuitry 700 may be realized by various circuitry elements. Further, each of the functions of the above described embodiments may be implemented by circuitry, which includes one or more processing circuits. A processing circuit includes a particularly programmed processor, for example, processor (CPU) 701, as shown in In Alternatively, or additionally, the CPU 701 may be implemented on an FPGA, ASIC, PLD or using discrete logic circuits, as one of ordinary skill in the art would recognize. Further, CPU 701 may be implemented as multiple processors cooperatively working in parallel to perform the instructions of the inventive processes described above. The processing circuitry 700 in The processing circuitry 700 further includes a display controller 708, such as a graphics card or graphics adaptor for interfacing with display 709, such as a monitor. An I/O interface 712 interfaces with a keyboard and/or mouse 714 as well as a touch screen panel 716 on or separate from display 709. I/O interface 712 also connects to a variety of peripherals 718. The storage controller 724 connects the storage medium disk 704 with communication bus 726, which may be an ISA, EISA, VESA, PCI, or similar, for interconnecting all of the components of the processing circuitry 700. A description of the general features and functionality of the display 709, keyboard and/or mouse 714, as well as the display controller 708, storage controller 724, network controller 706, and I/O interface 712 is omitted herein for brevity as these features are known. The exemplary circuit elements described in the context of the present disclosure may be replaced with other elements and structured differently than the examples provided herein. Moreover, circuitry configured to perform features described herein may be implemented in multiple circuit units (e.g., chips), or the features may be combined in circuitry on a single chipset. The functions and features described herein may also be executed by various distributed components of a system. For example, one or more processors may execute these system functions, wherein the processors are distributed across multiple components communicating in a network. The distributed components may include one or more client and server machines, which may share processing, in addition to various human interface and communication devices (e.g., display monitors, smart phones, tablets, personal digital assistants (PDAs)). The network may be a private network, such as a LAN or WAN, or may be a public network, such as the Internet. Input to the system may be received via direct user input and received remotely either in real-time or as a batch process. Additionally, some implementations may be performed on modules or hardware not identical to those described. Accordingly, other implementations are within the scope that may be claimed. In one aspect of the present disclosure, an authentication system using a plurality of types of authentication includes: a user information acquisition unit configured to acquire image information and/or sound information as user information; an authentication information extraction unit configured to extract, from the user information, authentication information corresponding to the plurality of types of authentication; and an authentication unit configured to perform authentication of a user by using the authentication information. In the above configuration, the authentication information extraction unit extracts, as the authentication information, information including at least one of factors that are a face image of the user, a voice of the user, a password that the user has uttered, and a degree of matching between the face and the voice of the user. In the above configuration, the authentication system further includes a process control unit configured to control a process related to acquisition of the authentication information, based on the user information. In the above configuration, when a face image has been acquired as the user information, the process control unit displays a password. In the above configuration, when a voice has been acquired as the user information after displaying the password, the process control unit starts to acquire a moving image of the user. In the above configuration, when extracting authentication information from a predetermined type of user information, the authentication information extraction unit uses another type of user information. In the above configuration, the authentication information extraction unit recognizes a content of utterance from a voice acquired as the user information, and extracts a face image from an image acquired as the user information, based on the content and timing of utterance. In the above configuration, the user information acquisition unit is included in a terminal device, and the authentication unit is included in a server device capable of communicating with the terminal device. In one aspect of the present disclosure, an authentication method using a plurality of types of authentication includes: acquiring an image and/or a voice of a user as user information; extracting, from the user information, authentication information corresponding to the plurality of types of authentication; and performing authentication of a user by using the authentication information. According to the present disclosure, it is possible to enhance convenience in an authentication system using a plurality of types of authentication. An authentication system includes user information acquisition circuitry configured to acquire user information of a user, the user information including image information of the user or voice information of the user; authentication information extraction circuitry configured to extract, from the user information, authentication information corresponding to a plurality of types of authentication; and authentication circuitry configured to perform an authentication procedure, using the authentication information, to authenticate the user. 1. An authentication system, comprising: user information acquisition circuitry configured to acquire user information of a user, the user information including image information of the user or voice information of the user;

authentication information extraction circuitry configured to extract, from the user information, authentication information corresponding to a plurality of types of authentication; and authentication circuitry configured to perform an authentication procedure, using the authentication information, to authenticate the user. 2. The authentication system according to 3. The authentication system according to 4. The authentication system according to 5. The authentication system according to 6. The authentication system according to 7. The authentication system according to 8. The authentication system according to the user information acquisition circuitry is included in a terminal device, and the authentication circuitry is included in a server device capable of communicating with the terminal device. 9. An authentication method, comprising:

acquiring user information of a user, the user information including image information of the user or voice information of the user; extracting, by processing circuitry from the user information, authentication information corresponding to a plurality of types of authentication; and performing an authentication procedure, using the authentication information, to authenticate the user. 10. The authentication method according to 11. The authentication method according to 12. The authentication method according to 13. The authentication method according to 14. The authentication method according to 15. The authentication method according to recognizing a content of utterance from a voice acquired as the user information; and extracting a face image from an image acquired as the user information, based on the content and timing of the utterance. 16. A terminal device, comprising:

processing circuitry configured to:

acquire user information of a user, the user information including image information of the user or voice information of the user; extract, from the user information as authentication information, information including a face image of the user, a voice of the user, a password that the user has uttered, and/or a degree of matching between the face and the voice of the user; and transmit the authentication information to an authentication server. 17. The terminal device according to a display, wherein the processing circuitry is further configured to control the display to display a password in a case that a face image is acquired as the user information. 18. The terminal device according to 19. The terminal device according to 20. The terminal device according to recognize a content of utterance from a voice acquired as the user information; and extract a face image from an image acquired as the user information, based on the content and timing of the utterance.CROSS-REFERENCE TO RELATED APPLICATIONS

TECHNICAL FIELD

BACKGROUND

SUMMARY

BRIEF DESCRIPTION OF THE DRAWINGS

DETAILED DESCRIPTION OF THE DRAWINGS