Data request servicing using multiple paths of smart network interface cards

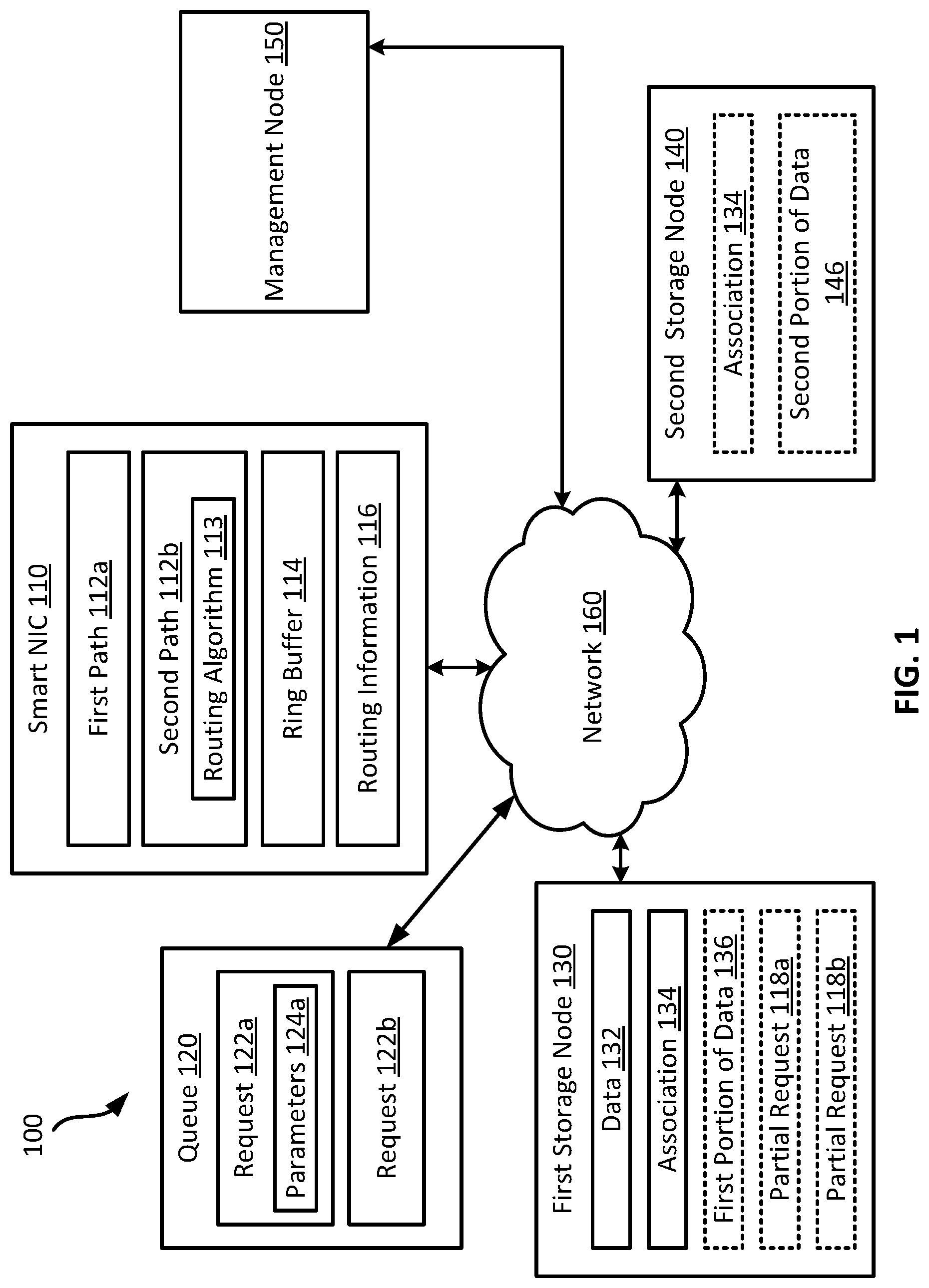

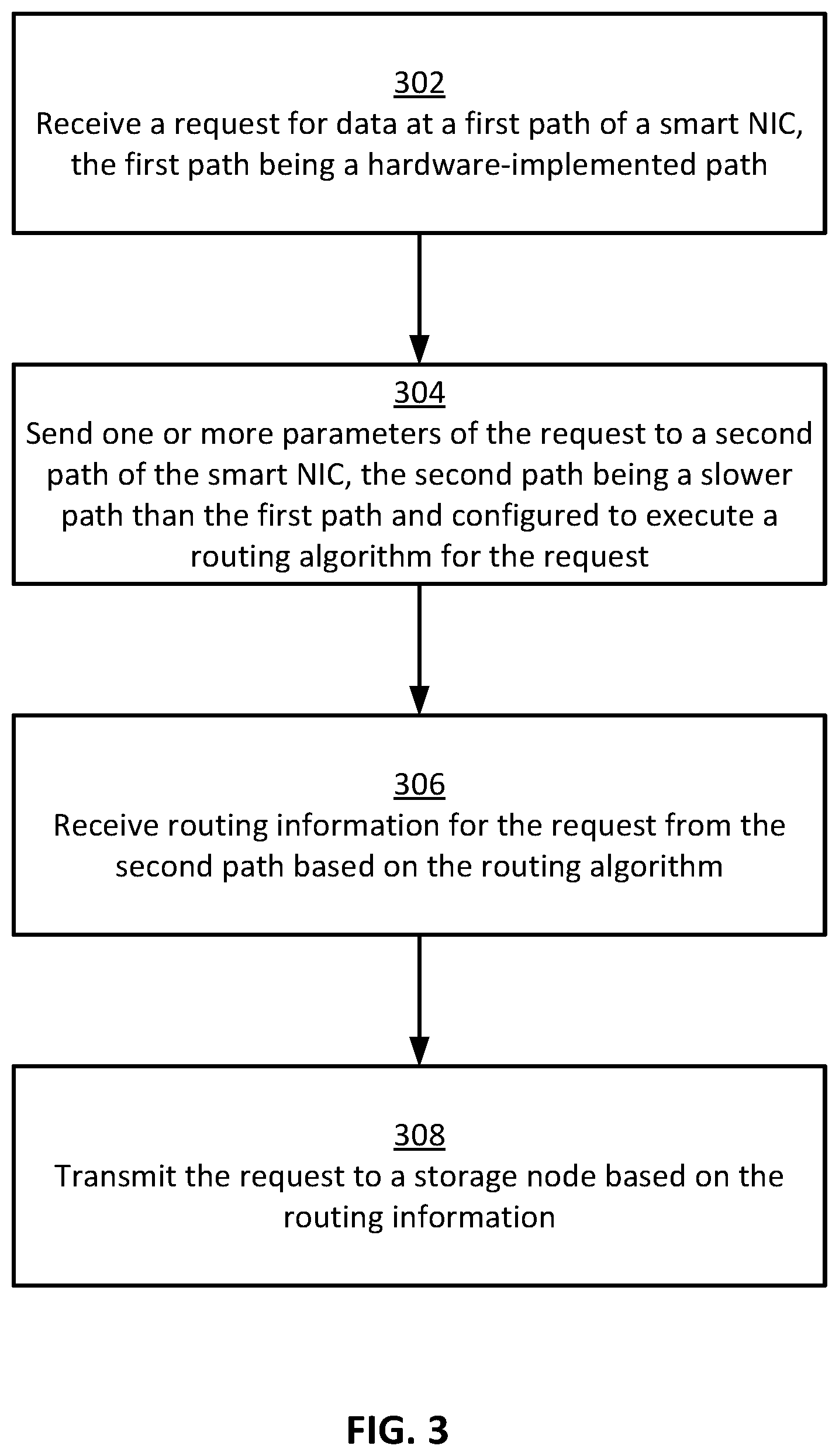

The present disclosure relates generally to smart network interface cards (NICs). More specifically, but not by way of limitation, this disclosure relates to data request servicing using multiple paths of smart NICs. Smart network interface cards (NICs) are devices capable of offloading processes from a host processor in a computing environment. For example, a smart NIC can perform network traffic processing that is typically performed by a central processing unit (CPU). Other processes the smart NIC can perform can include load balancing, encryption, network management, and network function virtualization. Offloading functionality to a smart NIC can allow the CPU to spend more time performing application processing operations, thereby improving performance of the computing environment. Smart network interface cards (NICs) can offload processes from a processor, such as transmitting data requests to storage nodes. A smart NIC may refer to a network interface card that is programmable. For example, a smart NIC can initially include unused computational resources that can be programmed for additional functionality. Smart NICs can include multiple paths for performing operations. One path of the smart NIC can be considered a fast path and can be a hardware-implemented path. The hardware may be a field-programmable gate array (FPGA) that can run dedicated simplified code that does not involve significant interaction with software. As a result, the hardware-implemented path may service input/output (I/O) requests quickly. Another path can be considered a slow path that runs code on an embedded processor, such as an advanced reduced instruction set computer (RISC) machine (ARM). The second path can execute any type of code, but may be an order of magnitude slower than the hardware-implemented path. Smart NICs may use the second path, as opposed to the fast, hardware-implemented path, to service data requests since the slow path can interact with software to run more complex code. For instance, data may be sharded and distributed across multiple storage nodes of a system. Sharding code is often implemented at the application level, so the smart NIC has to interact with the applications to determine where to transmit the data requests. Since the fast path includes limited software interaction capabilities, sharding code may be too complex for the fast path, so the slow path may be used to service data requests. Virtualization code may also be too complex for the fast path. As a result, using a smart NIC to transmit data requests to storage nodes may be inefficient and time consuming. Some examples of the present disclosure can overcome one or more of the abovementioned problems by providing a system that can use both paths of a smart NIC to improve data request servicing. For example, the system can receive a request for data at the first path of the smart NIC. The first path can be a hardware-implemented path. The system can send one or more parameters of the request to a second path of the smart NIC that is a slower path than the first path. The second path can execute a routing algorithm for the request. The routing algorithm can determine routing information for the request. The system can receive the routing information for the request at the first path from the second path based on the routing algorithm. The system can then transmit the request to the storage node based on the routing information. Since the second path executes the routing algorithm, the determination of the routing information can be accurate regardless of movement or other changes for the data because the second path can be aware of the changes. So, the using both the first path and the second path to service data requests can be efficient and accurate. In addition, the system can include a queue of requests that may allow the smart NIC to service requests in wirespeed with minimal latency. For data that is sharded and distributed across multiple storage nodes of the system, the smart NIC may transmit the request to a storage node that includes a portion of the data. The smart NIC may not differentiate sharded data from data that is not sharded. Typically, requests do not cross node boundaries, so the smart NIC can transmit the full request to one storage node without splitting the request into partial requests and transmitting the partial requests to multiple storage nodes. The storage node can generate partial requests for the other portions of the data on other storage nodes and transmit the partial requests to the other storage nodes. Having the storage node handle the partial requests may minimize decision making in the hardware-implemented path and result in efficient servicing of data requests in a reduced amount of time, ultimately resulting in reduced usage of computing resources. One particular example can involve system with a smart NIC and a storage node. The smart NIC can receive a write request for data at a hardware-implemented path. The hardware-implemented path can poll a queue and receive the write request from the queue. The hardware-implemented path can then determine parameters of a client device identifier, an offset, and a length, and the like associated with the write request. The hardware-implemented path can send the parameters to a slower path that executes a routing algorithm for the parameters to determine updated parameters for the data. The updated parameters can indicate a location in the storage node that is associated with the data. The slower path can send routing information with the updated parameters for the write request to the hardware-implemented path. The hardware-implemented path can then transmit the write request to the storage node based on the routing information. By using the slow path only to determine the routing information, time for retrieving data and servicing requests using smart NICs can be reduced. These illustrative examples are given to introduce the reader to the general subject matter discussed here and are not intended to limit the scope of the disclosed concepts. The following sections describe various additional features and examples with reference to the drawings in which like numerals indicate like elements but, like the illustrative examples, should not be used to limit the present disclosure. In some examples, the smart NIC 110 can include a first path 112 The smart NIC 110 can receive requests 122 from the management node 150. The requests 122 can be requests for data stored in one or more storage nodes. For example, the requests 122 may be read or write requests that the management node 150 receives from a client device (not shown). The management node 150 can transmit the requests 122 to the first path 112 The first path 112 The second path 112 The second path 112 In some examples, data objects may be sharded across multiple storage nodes of the system 100. In other words, a data object may be fragmented into smaller pieces, and each piece can be stored on a different storage node. The data object may be fragmented across any number of storage nodes. In such examples, requests 122 for data may also be fragmented, and the fragmentation may happen at the smart NIC 110 or at a storage node. To illustrate, the management node 150 can transmit the request 122 Each storage node in the system 100 can maintain an association 134 between each storage node and data stored on each storage node. The management node 150 can automatically update the association 134 in real time when data is relocated or added to the system 100. So, upon the first storage node 130 receiving the request 122 The first path 112 In this example, the processing device 202 is communicatively coupled with the memory device 204. The processing device 202 can include one processor or multiple processors. Non-limiting examples of the processing device 202 include a Field-Programmable Gate Array (FPGA), an application-specific integrated circuit (ASIC), a microprocessor, etc. The processing device 202 can execute instructions 206 stored in the memory device 204 to perform operations. The instructions 206 can include processor-specific instructions generated by a compiler or an interpreter from code written in any suitable computer-programming language, such as C, C++, C #, etc. The memory device 204 can include one memory or multiple memories. Non-limiting examples of the memory device 204 can include electrically erasable and programmable read-only memory (EEPROM), flash memory, or any other type of non-volatile memory. At least some of the memory device 204 includes a non-transitory computer-readable medium from which the processing device 202 can read the instructions 206. The non-transitory computer-readable medium can include electronic, optical, magnetic, or other storage devices capable of providing the processing device 202 with computer-readable instructions or other program code. Examples of the non-transitory computer-readable medium can include magnetic disks, memory chips, ROM, random-access memory (RAM), an ASIC, optical storage, or any other medium from which a computer processor can read the instructions 206. Although not shown in The processing device 202 can execute the instructions 206 to perform operations. For example, the processing device 202 receive a request 222 for data 232 at the first path 212 of the smart NIC 210. The first path 212 In block 302, the processing device 202 can receive a request 222 for data 232 at a first path 212 In block 304, the processing device 202 can send one or more parameters 224 of the request 222 to a second path 212 In block 306, the processing device 202 can receive the routing information 216 for the request 222 from the second path 212 In block 308, the processing device 202 can transmit the request 222 to the storage node 230 based on the routing information 216. If the routing information 216 indicates that the storage node 230 includes a portion of the data 232 and that another storage node includes another portion of the data 232, the first path 212 The foregoing description of certain examples, including illustrated examples, has been presented only for the purpose of illustration and description and is not intended to be exhaustive or to limit the disclosure to the precise forms disclosed. Numerous modifications, adaptations, and uses thereof will be apparent to those skilled in the art without departing from the scope of the disclosure. For instance, any examples described herein can be combined with any other examples to yield further examples. Data requests can be serviced by multiple paths of smart network interface cards (NICs). For example, a system can receive a request for data at a first path of a smart NIC. The first path can be a hardware-implemented path. The system can send one or more parameters of the request to a second path of the smart NIC. The second path can be a slower path than the first path and configured to execute a routing algorithm for the request. The system can receive routing information for the request from the second path based on the routing algorithm and transmit the request to a storage node based on the routing information. 1. A method comprising:

receiving a request for data at a first path of a smart network interface card (NIC), the first path being a hardware-implemented path; sending one or more parameters of the request to a second path of the smart NIC, the second path being a slower path than the first path and configured to execute a routing algorithm for the request; receiving routing information for the request from the second path based on the routing algorithm; and transmitting the request to a storage node based on the routing information. 2. The method of determining, based on the routing information, the first storage node includes a first portion of the data and a second storage node includes a second portion of the data; and transmitting the request to the first storage node and the second storage node. 3. The method of determining the first storage node includes a first portion of the data and a second storage node includes a second portion of the data; generating, from the request, a first partial request for the first portion of the data and a second partial request for the second portion of the data; and transmitting the second partial request to the second storage node. 4. The method of subsequent to transmitting the request to the storage node, determining the storage node includes the data of the request; and retrieving the data in the storage node. 5. The method of receiving the request from a queue of a plurality of requests for data operations; and sending the one or more parameters of the request to a ring buffer accessible by the second path of the smart NIC. 6. The method of performing, by the first path, a first operation associated with the first request substantially contemporaneously with a second operation for a second request, wherein the first operation comprises:

receiving the first request from the queue; and sending the first request to the second path, and wherein the second operation comprises:

receiving second routing information for the second request from the second path; removing the second request from the queue; and executing the second request based on the second routing information. 7. The method of 8. A non-transitory computer-readable medium comprising program code that is executable by a processor for causing the processor to:

receive a request for data at a first path of a smart network interface card (NIC), the first path being a hardware-implemented path; send one or more parameters of the request to a second path of the smart NIC, the second path being a slower path than the first path and configured to execute a routing algorithm for the request; receive routing information for the request from the second path based on the routing algorithm; and transmit the request to a storage node based on the routing information. 9. The non-transitory computer-readable medium of determine, based on the routing information, the first storage node includes a first portion of the data and a second storage node includes a second portion of the data; and transmit the request to the first storage node and the second storage node. 10. The non-transitory computer-readable medium of determining the first storage node includes a first portion of the data and a second storage node includes a second portion of the data; generating, from the request, a first partial request for the first portion of the data and a second partial request for the second portion of the data; and transmitting the second partial request to the second storage node. 11. The non-transitory computer-readable medium of subsequent to transmitting the request to the storage node, determining the storage node includes the data of the request; and retrieving the data in the storage node. 12. The non-transitory computer-readable medium of receive the request from a queue of a plurality of requests for data operations; and send the one or more parameters of the request to a ring buffer accessible by the second path of the smart NIC. 13. The non-transitory computer-readable medium of perform, by the first path, a first operation associated with the first request substantially contemporaneously with a second operation for a second request, wherein the first operation comprises:

receiving the first request from the queue; and sending the first request to the second path, and wherein the second operation comprises:

receiving second routing information for the second request from the second path; removing the second request from the queue; and executing the second request based on the second routing information. 14. The non-transitory computer-readable medium of 15. A system comprising:

a processing device; and a memory device including instructions executable by the processing device for causing the processing device to:

receive a request for data at a first path of a smart network interface card (NIC), the first path being a hardware-implemented path; send one or more parameters of the request to a second path of the smart NIC, the second path being a slower path than the first path and configured to execute a routing algorithm for the request; receive routing information for the request from the second path based on the routing algorithm; and transmit the request to a storage node based on the routing information. 16. The system of determine, based on the routing information, the first storage node includes a first portion of the data and a second storage node includes a second portion of the data; and transmit the request to the first storage node and the second storage node. 17. The system of determining the first storage node includes a first portion of the data and a second storage node includes a second portion of the data; generating, from the request, a first partial request for the first portion of the data and a second partial request for the second portion of the data; and transmitting the second partial request to the second storage node. 18. The system of subsequent to transmitting the request to the storage node, determining the storage node includes the data of the request; and retrieving the data in the storage node. 19. The system of receive the request from a queue of a plurality of requests for data operations; and send the one or more parameters of the request to a ring buffer accessible by the second path of the smart NIC. 20. The system of perform, by the first path, a first operation associated with the first request simultaneously with a second operation for a second request, wherein the first operation comprises:

receiving the first request from the queue; and sending the first request to the second path, and wherein the second operation comprises:

receiving second routing information for the second request from the second path; removing the second request from the queue; and executing the second request based on the second routing information.TECHNICAL FIELD

BACKGROUND

BRIEF DESCRIPTION OF THE DRAWINGS

DETAILED DESCRIPTION

CPC - классификация

HH0H04H04LH04L4H04L45H04L45/H04L45/1H04L45/12H04L45/123H04L45/2H04L45/24H04L49H04L49/H04L49/9H04L49/90H04L49/901H04L6H04L67H04L67/H04L67/1H04L67/10H04L67/109H04L67/1097IPC - классификация

HH0H04H04LH04L4H04L45H04L45/H04L45/1H04L45/12H04L45/2H04L45/24H04L49H04L49/H04L49/9H04L49/90H04L49/901H04L6H04L67H04L67/H04L67/1H04L67/10H04L67/109H04L67/1097Цитирование НПИ

711/219Huang et al., “AcceISDP: A Reconfigurable Accelerator for Software Data Plane Based on FPGA SmartNIC,” Electronics, vol. 10(16), Aug. 11, 2021: pp. 1-26, <https://doi.org/10.3390/electronics10161927>.

Liu et al., “Offloading Distributed Applications onto Smart NICs using iPipe,” SIGCOMM '19, Aug. 19-23, 2019: pp. 1-16.

Van Tu et al., “Accelerating Virtual Network Functions with Fast-Slow Path Architecture using eXpress Data Path,” IEEE Transactions on Network and Service Management, vol. 17(3), Jun. 5, 2020: pp. 1474-1486.