METHOD AND APPARATUS FOR DYNAMIC MEDIA STREAMING

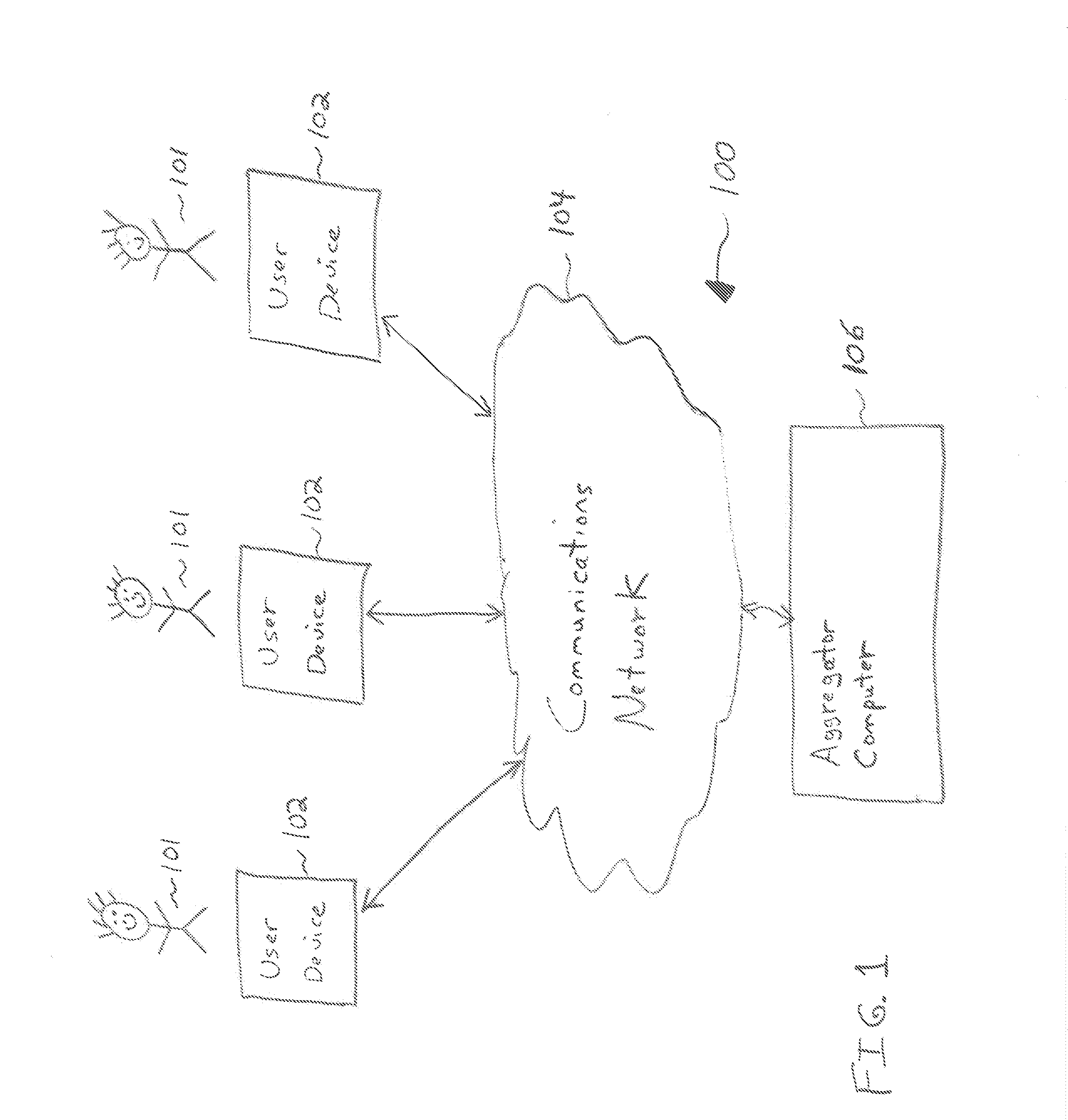

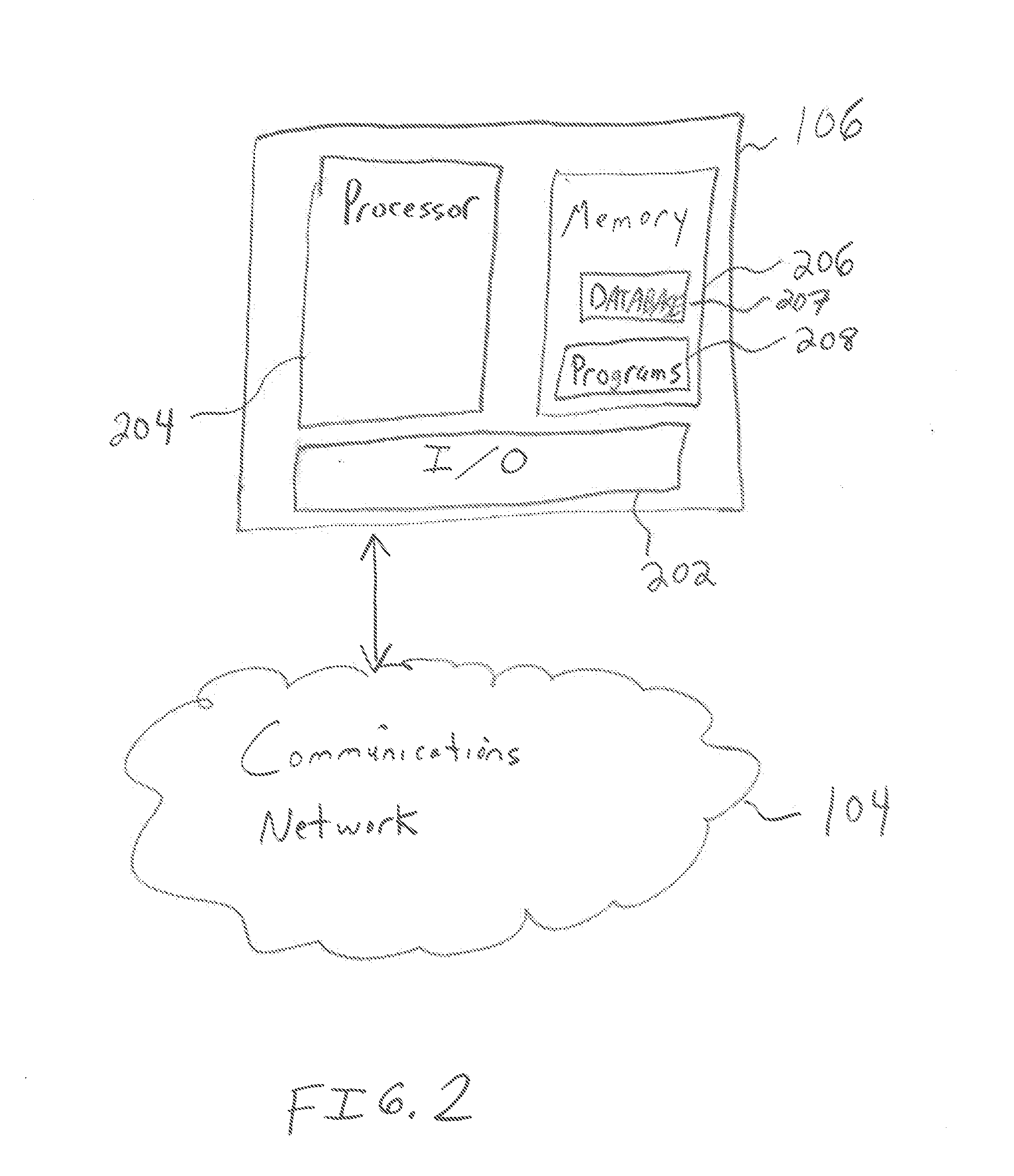

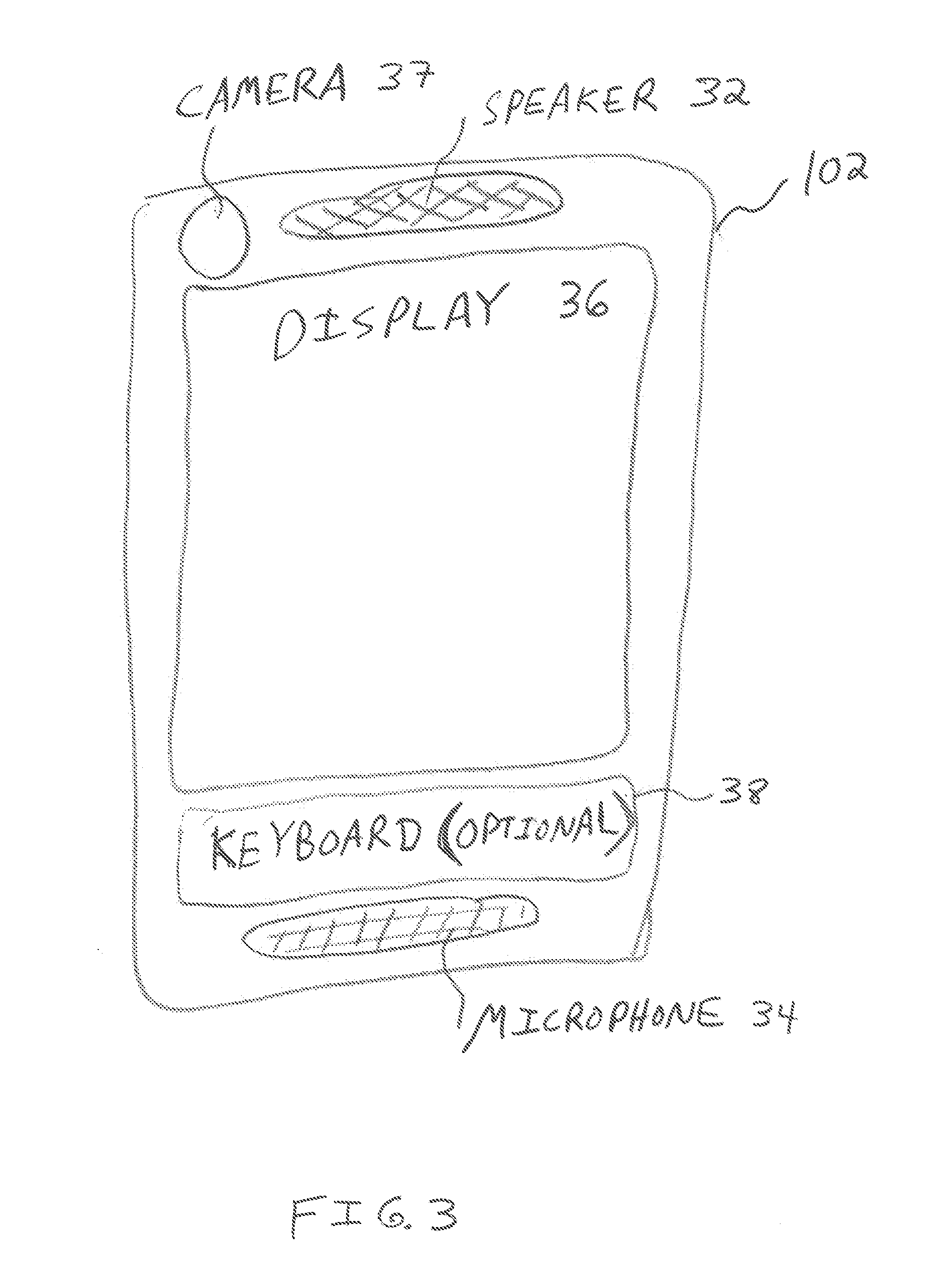

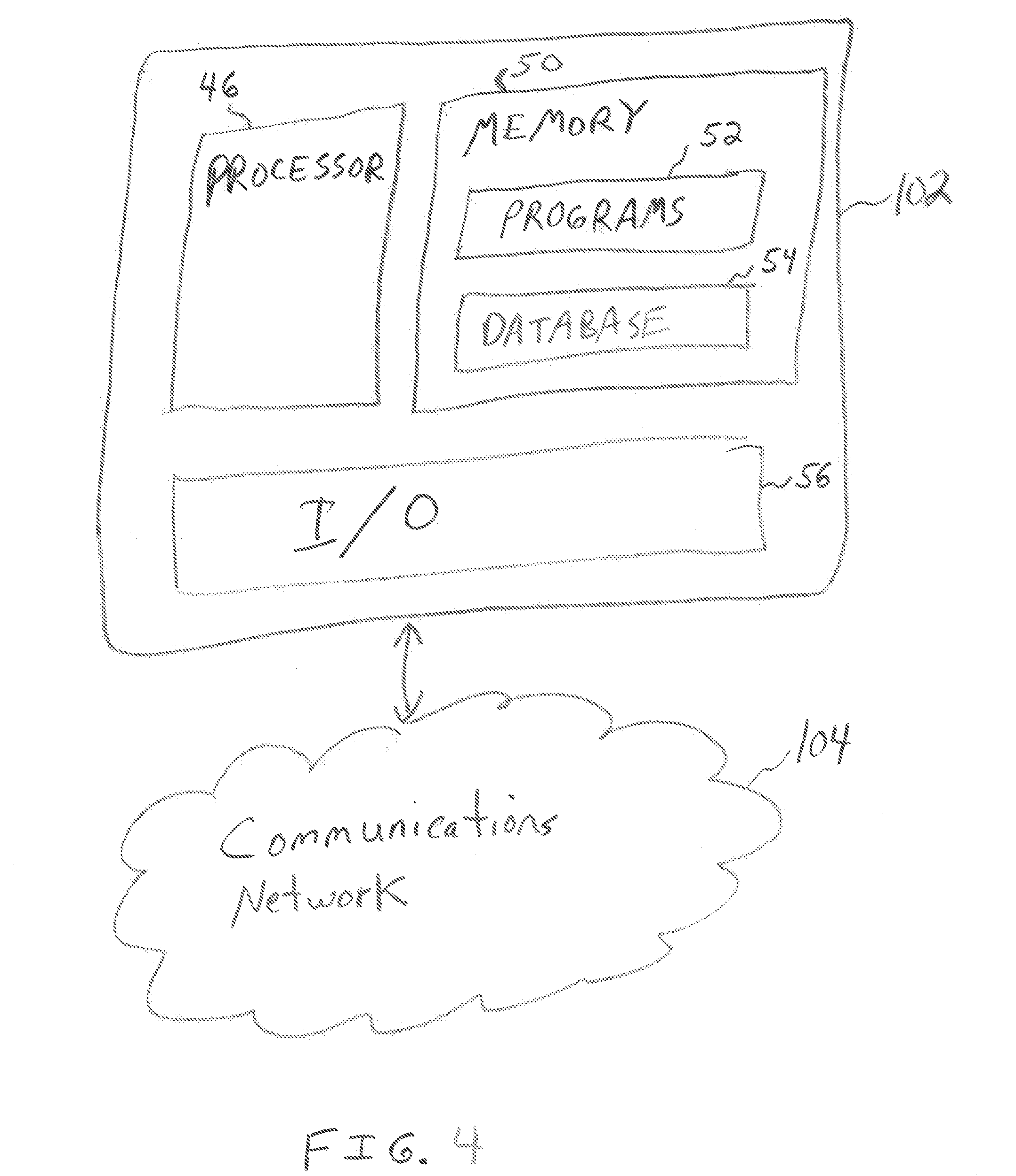

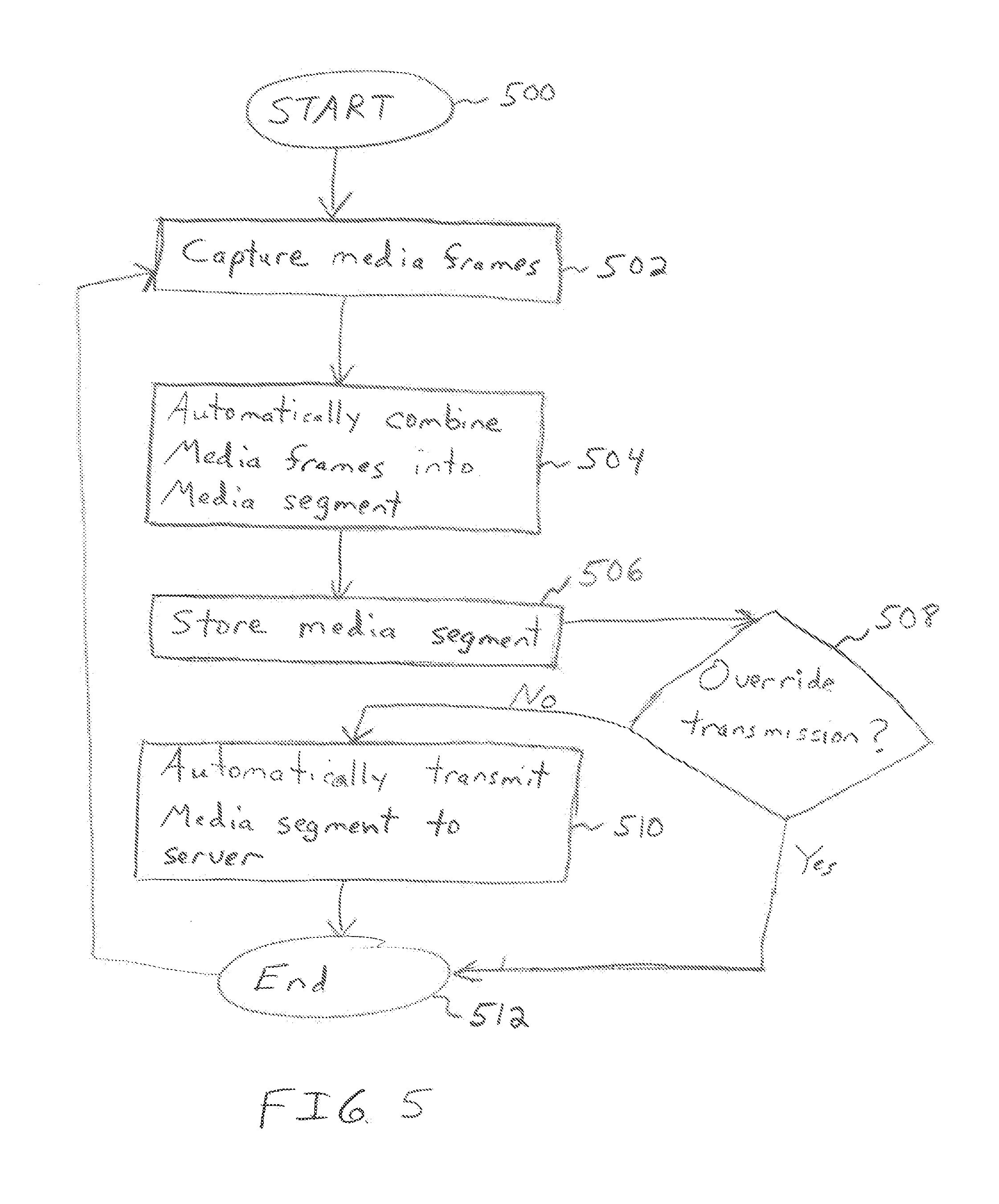

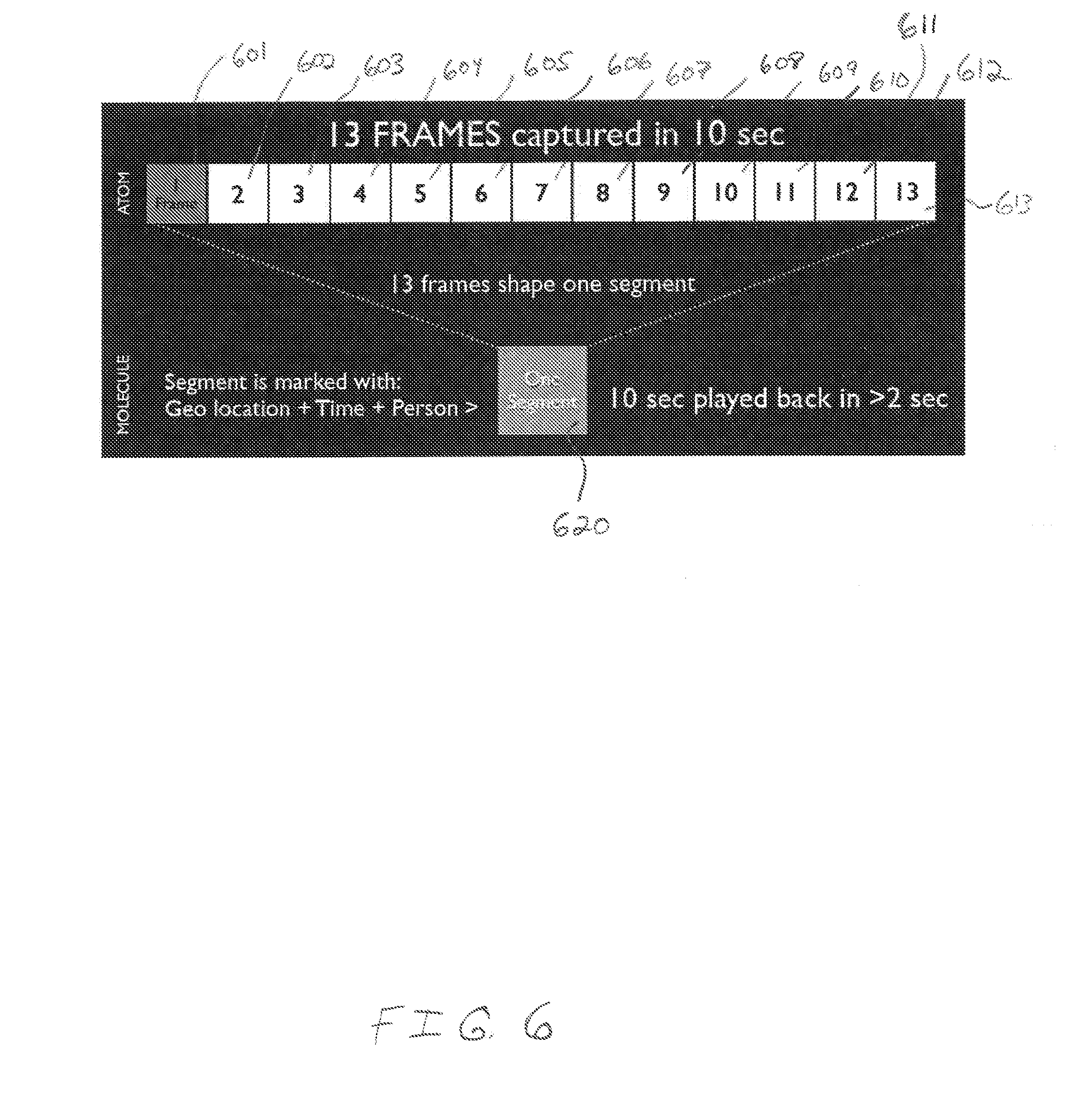

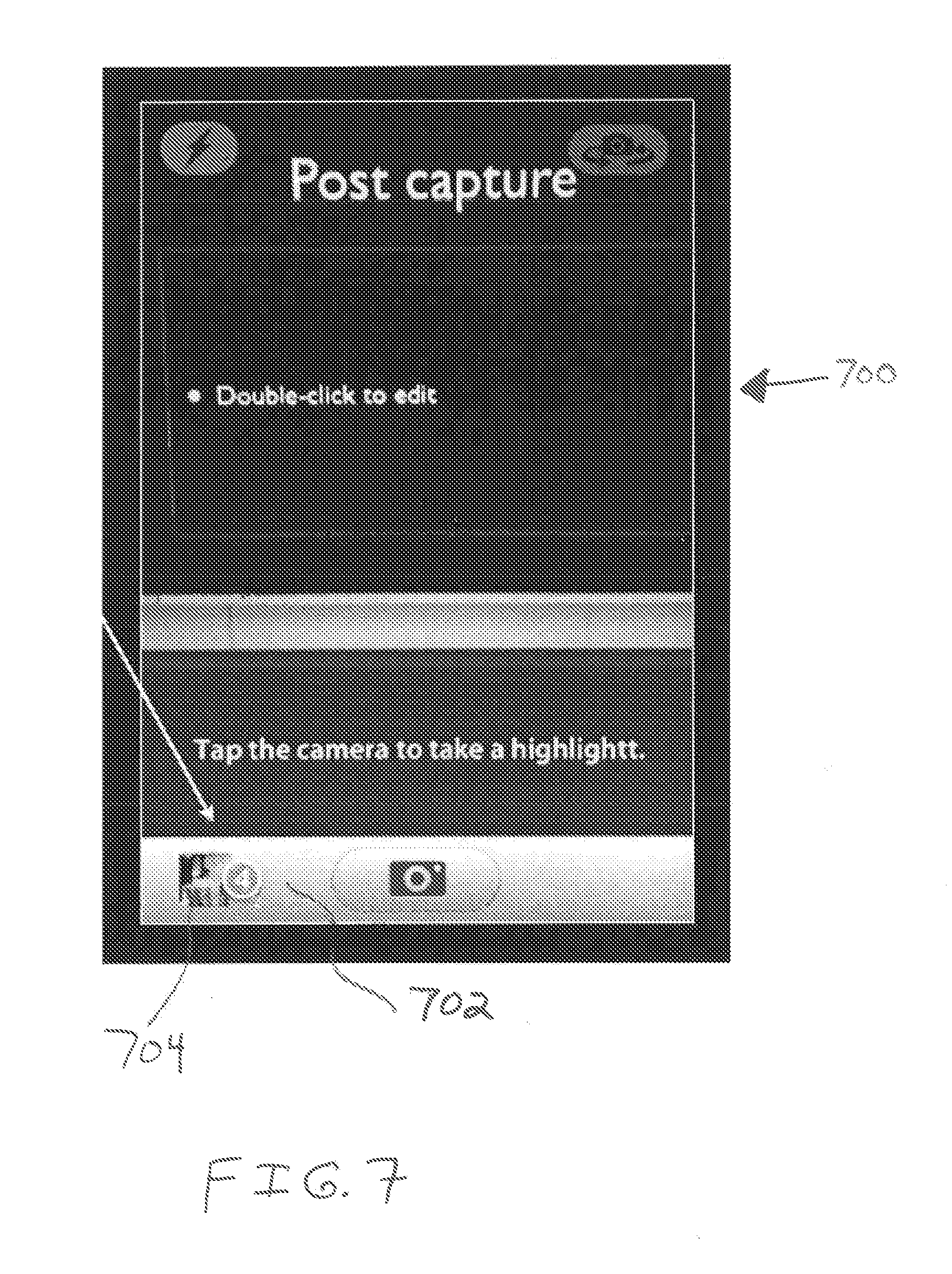

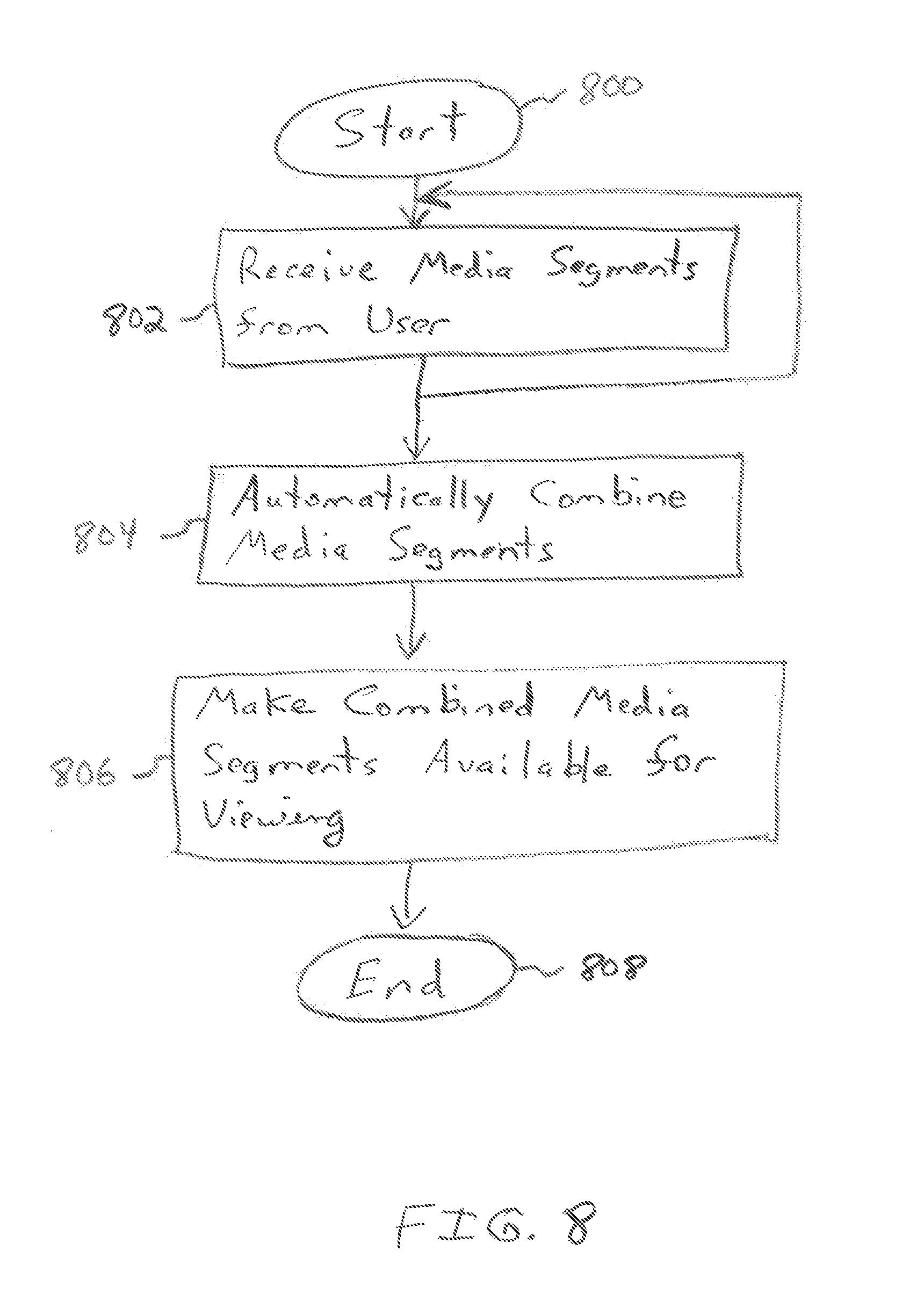

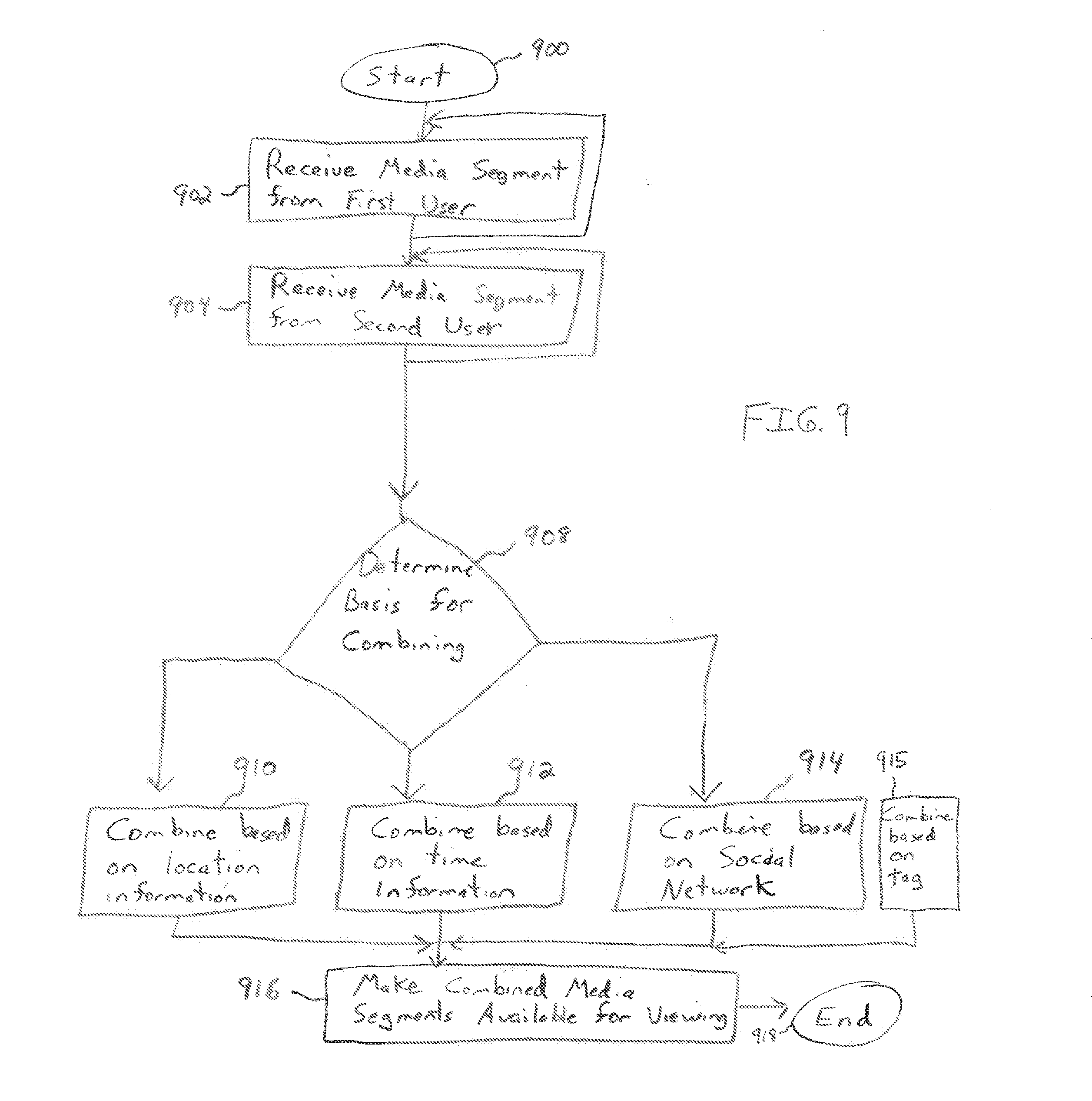

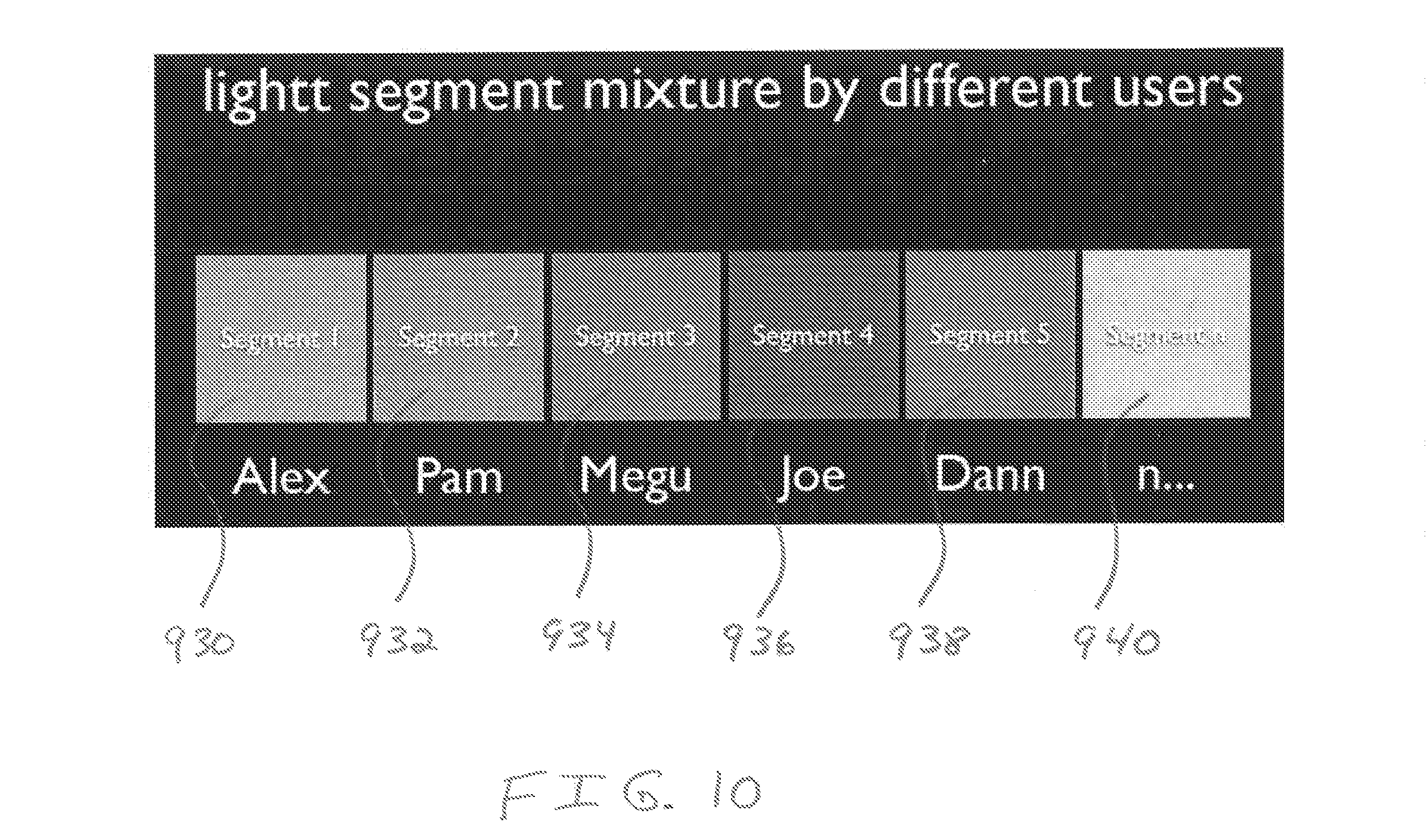

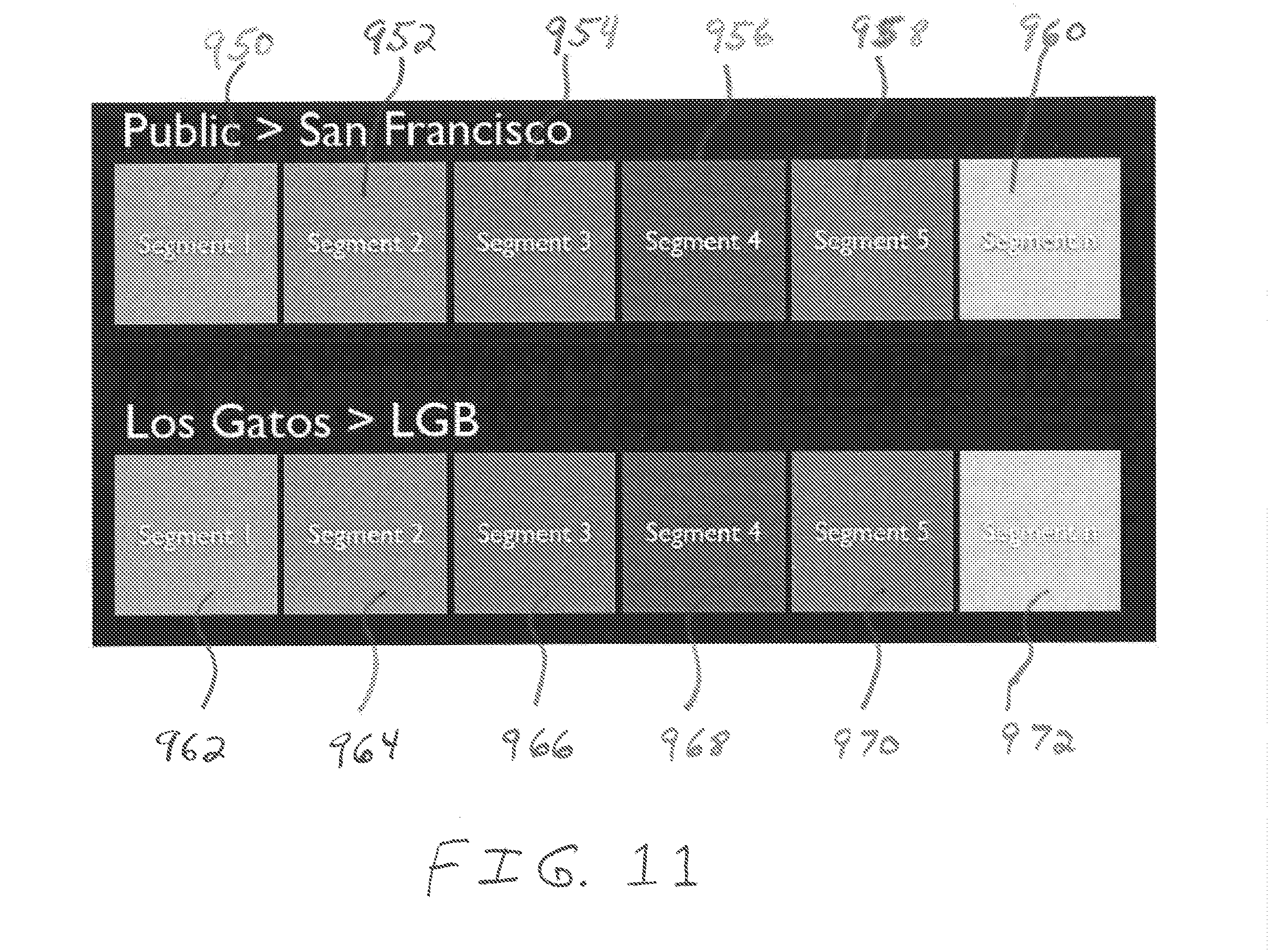

This invention relates generally to the field of computerized user groups, social networks and dynamic media streaming. There are a variety of types of online user groups and social networks in existence today. Users are able to follow members of their social networks and can view pictures, videos, and share information. Typically, users can share pictures or videos by capturing the pictures/videos and then uploading them manually to the online social network. Members of the social network can then view the pictures/videos. Social networks typically do not include any automated ways of uploading pictures/videos. In addition, once pictures/videos are uploaded, there is no way of automatically combining these pictures/videos. A need, therefore, exists for a more flexible method and apparatus for media sharing. According to one embodiment, the invention is a computerized method for creating a composite media program. The method can include receiving from a first user over a network at least a first media segment, wherein the first media segment includes a first plurality of media frames. The method can also include receiving from a second user over the network at least a second media segment, wherein the second media segment includes a second plurality of media frames. Finally, the method includes automatically combining at least the first media segment and the second media segment into the composite media program including a series of media segments, wherein the composite media program is available for viewing by at least a set of members of a user group. The method can be performed in a server that is connected through a network to a plurality of user devices. The media segment can be, for example, a video clip that includes a series of images (which can be media frames). The media segment could also include sound. This method allows the members of the social network to follow the users in an automated manner. The user group can be any group of users that have common interests, common demographics, a common location, or other common characteristics. The user group can also be a social network. Such a social network can have privacy protections to allow users to keep information, including media segments, restricted to only certain users. In other embodiments, the invention can be a computer readable medium that contains instructions that, when executed, perform the steps set forth above. Another embodiment of the invention is also a computerized method for creating a composite media program. In this embodiment, the invention includes receiving from a user over a network at least a first media segment, wherein the first media segment includes a first plurality of media frames. The invention also includes receiving from the user over the network at least a second media segment, wherein the second media segment includes a second plurality of media frames. The first media segment and the second media segment are automatically combined into the composite media program, wherein the composite media program includes a series of media segments. The method also includes making the composite media program available for viewing only by a set of members of a user group that includes the user. In other embodiments, the invention can be a computer readable medium that contains instructions that, when executed, perform the steps set forth above. Yet another embodiment is a computerized method for creating a media segment in a mobile device. This method includes capturing a plurality of media frames with the mobile device and automatically combining the plurality of media frames into the media segment, where each segment includes a plurality of frames. The media segment is then stored in storage located on the mobile device. The media segment can then be automatically transmitted to a network server, wherein a user of the mobile device can override the transmission if not desired. The user can, for example, override the transmission by deleting the media segment prior to transmission. In other embodiments, the invention can be a computer readable medium that contains instructions that, when executed, perform the steps set forth above. The invention is illustrated in the figures of the accompanying drawings which are meant to be exemplary and not limiting, in which like references are intended to refer to like or corresponding part, and in which: To address the need set forth above, according to one aspect, the invention includes a communications network interface, such as a web server, for interacting with a plurality of users for implementing the functionality of some embodiments of the invention. More specifically, as can be seen in As previously mentioned, the user computers 102 are connected to the aggregator computer 106 via communications network 104, which may be a single communications network or comprised of several different communications networks. As such, communications network 104 can be a public or private network, which can be any combination of the internet and intranet systems, that allow a plurality of system users to access the computer 106. For example, communications network 104 can connect all of the system components using the internet, a local area network (“LAN”), e.g., Ethernet or WI-FI, or wide area network (“WAN”), e.g., LAN to LAN via internet tunneling, or a combination thereof, and using electrical cable e.g., HomePNA or power line communication, optical fiber, and radio waves, e.g., wireless LAN, to transmit data. As one skilled in the art will appreciate, in some embodiments, user computers 102 may be networked together using a LAN for a university, home, apartment building, etc., but may be connected to the aggregator computer 104 via an internet tunneling to implement a WAN. In other instances, all of the user computers 102 and the aggregator computer 106 may connect using the internet. Still in other implementations, a user may connect to the aggregator using, e.g., wireless LAN and the internet. Moreover, the term “communications network” is not limited to a single communications network system, but may also refer to separate, individual communications networks used to connect the user computers 102 to aggregator computer 106. Accordingly, though each of the user computers 102 and aggregator computer 106 are depicted as connected to a single communications network, such as the internet, an implementation of the communications network 104 using a combination of communications networks is within the scope of the invention. As one skilled in the art will appreciate, the communications network may interface with the aggregator computer 106 to provide a secure access point for users 101 and to prevent users 101 from accessing the various protected databases in the system. In some embodiments, a firewall may be used, and it may be a network layer firewall, i.e., packet filters, application level firewalls, or proxy servers. In other words, in some embodiments, a packet filter firewall can be used to block traffic from particular source IP addresses, source ports, destination IP addresses or ports, or destination services like www or FTP, though a packet filter in this instance would most likely block certain source IP addresses. In other embodiments, an application layer firewall may be used to intercept all packets traveling to or from the system, and may be used to prevent certain users, i.e., users restricted or blocked from system access, from accessing the system. Still, in other embodiments, a proxy server may act as a firewall by responding to some input packets and blocking other packets. An aggregator computer 106 will now be described with reference to As can be seen, the I/O device 202 is connected to the processor 204. Processor 204 is the “brains” of the aggregator computer 106, and as such executes program product 208 and works in conjunction with the I/O device 202 to direct data to memory 206 and to send data from memory 206 to the various file servers and communications network, including the database 207. Processor 204 can be, e.g., any commercially available processor, or plurality of processors, adapted for use in an aggregator computer 106, e.g., Intel® Xeon® multicore processors, Intel® micro-architecture Nehalem, AMD Opteron™ multicore processors, etc. As one skilled in the art will appreciate, processor 204 may also include components that allow the aggregator computer 106 to be connected to a display [not shown] and keyboard that would allow, for example, an administrative user direct access to the processor 204 and memory 206. Memory 206 may be any computer readable medium that can store the algorithms forming the computer instructions of the instant invention and data, and such memory 206 may consist of both non-volatile memory, e.g., hard disks, flash memory, optical disks, and the like, and volatile memory, e.g., SRAM, DRAM, SDRAM, etc., as required by embodiments of the instant invention. As one skilled in the art will appreciate, though memory 206 is depicted on, e.g., the motherboard, of the aggregator computer 106, memory 206 may also be a separate component or device, e.g., FLASH memory or other storage, connected to the aggregator computer 106. The database 207 can operate on the memory to store media segments and combined media segments in the manner described herein. Referring again to The memory 50 can be any standard memory device (a computer readable medium), such as NAND or NOR flash memory or any other type of memory device known in the art. The memory 50 stores instruction programs 52. These instruction programs 52 can be the code that performs the functions described above and below for the user device 102. During operation, the instructions can be executed by the processor 46 in order to perform the functions described herein. The database 54 can be used to store media frames and media segments captured on the device 102, as described in greater detail below. The I/O device 56 can be used to input or output data to the device 102, such as by wireless transmitting data (either by cellular or by Wi-Fi or other methods) to the network 104. In operation, the code for performing the functions of the device 102 can be loaded onto the device 102. The code to perform these functions can be stored, either before or after being loaded on device 102, on a computer readable medium. When loaded onto a device 102 and executed, the code can perform the logic described above and in the sections below. The media segments can be bundled as compressed packets for efficient transport to the server 106, particularly where the user device is a wireless device that transmits data wirelessly to the network. This allows for efficient transmission over a range of connectivity (i.e., from 3G to WiFi). In addition, in some embodiments, the user device 102 will user power saving methods to conserve power for the transmission of one or more media segments to the server 106. If, for instance, a wireless quality level is low for a wireless network, continually trying to transmit the one or more media segments to the network can wear down the battery of the user's device. The user's device can, therefore, be programmed to determine a quality level of a connection of the mobile device to a network, and then perform the step of automatically transmitting only when the quality level is above a predetermined threshold. As an example, the user can capture a series of visual images/media frames with the camera of the user device 102. In this example, each visual image, or photograph, can be considered a media frame. The user device 102 can combine these media frames into one media segment. In During the capture process, time information and/or location information and/or person information and/or subject matter information (i.e., tag information) can be captured and associated with one or more media segments. For instance, each segment can be marked with the time at which the images were captured. This allows each media segment 620 to have a time associated with it, and after transmission to the server, this time information can be used to assemble media segments captured within the same general time period. Further, the location information that can be appended to each segment 620 can be, for example, geo location information, such as GPS information or other information about the location of the user device 102 at the time of capture. The person information can be the identity of the user of the user device 102. The location information and the person information, like the time information, can be used by the server to assemble media segments for playback. For instance, the server can assemble the media segments with the same location information for playback, and the server can also assemble media segments from the same user for playback. Finally, the subject matter information can include a descriptor from the user relating to the media segment, such as food, poker, fishing, a child, etc. . . . This can allow the server to assemble media segments that have the same subject matter into a composite media program. In addition, a graphical user interface can be presented that allows the user to postpone transmission to the server for some period of time, such as 1, 10, or 24 hours. In typical operation, the user device 102 will transmit a media segment after some set period of time, such as 15 minutes. In other words, the user device 102 will be programmed to automatically upload the media segments to the server as a background service that is invisible (entirely or largely) to the user. During this time window, the user can override this transmission by selecting the segment and either deleting it or changing its time for transmission (changing a transmission time can give the user additional time to decide, for example, to delete the media segment). In still other embodiments, a user can set editing rules. These editing rules can allow for the user device to automatically combine the media frames using the predetermined editing rules. For example, the user can set the editing rules so that no more than 5 or 10 frames are assembled into a media segment, so that no sound is assembled into the media segment, or so that all media segments are assembled in black and white only. In another embodiment of In another embodiment of Next, in block 806, the aggregator computer 106 makes the combined media segments available for viewing. For example, in one embodiment, the aggregator computer 106 can make the combined media segments—also called composite media programs—available for viewing by any user. In other embodiments, the aggregator computer 106 can make the composite media programs available for viewing only by members of a particular user group. Such a user group can be, for example, a particular social network. The phrase social network is used in much of the description below, but this description also applies to a user group, where a user group can have broader applicability than a social network. This can keep the user's media segments somewhat private in that the public at large will not be able to view the composite media programs in this embodiment. The process ends at block 808. Within the embodiments described above, media segments can be combined at the server level for easy viewing by users. This combining of media segments can be done on-the-fly or in advance. For instance, on-the-fly combinations can be performed when a user wishes to view media segments through the system. If the user selects a topic, location, time, or user group, the server can combine the media segments on-the-fly and present them to the user. In addition, the combination of media segments can be performed in advance of a request for viewing by a particular user. In some embodiments, the server can combine media segments along with other types of media segments that are not captured by users of the system. For instance, the media segments captured by users can be combined with media from sources such as the Internet. As one specific example, if a users tag media segments as relating to a home run by the San Francisco Giants, these tagged media segments can be combined along with other media relating to home runs by the San Francisco Giants that is available on the Internet. First, as shown in block 910, media segments can be combined into composite media programs based on location information. For example, if the media segment(s) for the first user and the media segments(s) for the second user (and for additional users if more are present) were captured in the same location, the media segments can be combined based on location to create a composite media program that includes the media segments from the same location. In this example, for instance, media segments from the first and second users can be combined only if they were tagged as being captured in the same location. Second, as shown in block 912 of Third, as shown in block 914 of Fourth, as shown in block 915 of The examples set forth above combine media segments only if a certain criteria is met (i.e., time, location, social network, or tag information). In some embodiments, a subset or all of these criteria can be used to combine media segments. For instance, media segments can be combined based on location information and tag information, such that media segments from the same location and having the same tag information will be combined. In addition, time and/or social network can be factored into the decision as to whether to combine media segments. Any combination of the criteria (i.e., time, location, social network, or tag information) can be used to combine media segments. More than one of the bases for forming combinations described above in connection with steps 910, 912, and 914 of After media segments are combined in the manner set forth above, the aggregator computer 106 can make the composite media program available for viewing. This is shown in block 916 of Several additional features can be used in the methods and system described above. For example, the aggregator computer 106 can unpack and display media segments are based on user selected visibility/privacy settings. For example, if the user who captured a media segment desires that the media segment only be available to a small or selected set of other users, the user can select those users prior to upload to the server 106. For example, a graphical user interface can be displayed to the user to allow the user to select other users who can see the media segment. In addition, if no such selections are made by the user, the media segment can, in one embodiment, be viewed by any user or the public at large after upload. In still other embodiments, after upload to the server 106, users are always able to go back and remove individual media segments so that they will no longer be visible to others. For example, if a first user captured a media segment and uploaded it to the server, that user would be able to go back later and delete the media segment so that other users could not view it. In some embodiments of the invention set forth above, upon making the composite media programs available for viewing, the composite media programs can be distributed from the server 106 to other user devices via the Web. In some embodiments, as described above in connection with As described above, users can decide to mark each media segment as either private or public (via, for example, a graphical user interface at the user device). If marked as public, any user can view that media segment when compiled into a composite media program and made available for viewing at the server 106. If marked as private, in one embodiment, no other user aside from the user who captured the media segment can view it during playback from the server 106. In still other embodiments, when marked as private, only members of a selected social network or networks that include the user who captured it can view the media segment. In some embodiments, public sharing of media segments (i.e., sharing via the server 106 of media segments that are marked for public sharing) can take place in a number of ways. First, if a user decides to follow a person, the user can see media segments from that person. For example, the server 106 can assembled all media segments from that person into a composite media program for viewing by the user. This allows the user to follow the selected person, and if media streams are uploaded in real-time, a user can follow the person in real-time. Second, public media segments can be tagged with a special category indicator that can later be used for forming combinations of media segments at the server 106. For instance, all media segments relating to a specific type of food can be marked with a special tag and then combined at the server, or all media segments related to poker can be tagged and combined. This allows users to following media segments that relate (i.e., tag) media segments to particular topics (food, poker, fishing, a child, etc. . . . ). Third, media segments can be assembled as public streams based on random sampling. In this embodiment, the server 106 can automatically combine media segments based on subject matter information associated with each media segment, where the media segments are combined only if the subject matter information associated with each is the same. The method and system described above can also allow for comment posting by users. For example, if the viewer of a composite media program likes or is interested in certain media segments, the viewer can post comments about those media segments. This allows other viewers of the same media segments to see those comments. In addition, the capturing process can be set up so that each media segment captured by a given user within a predetermined time period (i.e., with the same 10 minutes or same hour) can be tagged as being associated with one another. When a viewer adds a comment for one of these media segments, each associated media segment can have the same comment added. As set forth above, the invention can be a system or platform for capturing and combining media segments for viewing by users. The platform can include a series of services, modules, and application programming interfaces (APIs) that shape methods and elements for viewing. The media segments can be captured on mobile devices and shared via the Web to members of various social networks. This can allow users to dynamically capture and share media segments so that each segment is created and then aggregated so that it can be presented in an integrated manner. Media segments can be uploaded in real-time after being captured and then sorted by various variables (such as time, location, social networks, or tags) for assembly for viewing. This allows, for example, a user to view public media segments for persons visiting a specific restaurant (where the restaurant is identified by either geo location or by a tag). In addition, all media segments relating to home runs at a particular baseball stadium can be tagged so that the server 106 can compile a composite media segment relating to the home runs. The embodiments can allow for the creation of multiple channels for media curation. Although the invention has been described and illustrated in the foregoing illustrative embodiments, it is understood that the present disclosure has been made only by way of example, and that numerous changes in the details of implementation of the invention can be made without departing from the spirit and scope of the invention. Features of the disclosed embodiments can be combined and rearranged in various ways. We claim: The invention can be a computerized method for creating a composite media program. The method can include receiving from a first user over a network at least a first media segment, wherein the first media segment includes a first plurality of media frames. The method can also include receiving from a second user over the network at least a second media segment, wherein the second media segment includes a second plurality of media frames. Finally, the method includes automatically combining at least the first media segment and the second media segment into the composite media program including a series of media segments, wherein the composite media program is available for viewing by at least a set of members of a social network. 1. A computerized method for creating a composite media program comprising:

(a) receiving from a first user over a network at least a first media segment, wherein the first media segment includes a first plurality of media frames; (b) receiving from a second user over the network at least a second media segment, wherein the second media segment includes a second plurality of media frames; and (c) automatically combining at least the first media segment and the second media segment into the composite media program including a series of media segments, wherein the composite media program is combined in an integrated way, wherein the composite media program is available for viewing by at least a set of members of a user group. 2. The computerized method of 3. The computerized method of 4. The computerized method of 5. The computerized method of 6. The computerized method of 7. The computerized method of 8. The computerized method of 9. The computerized method of 10. A computer readable medium containing instructions that, when executed by a processor, performs the following steps to create a composite media program:

(a) receiving from a first user over a network at least a first media segment, wherein the first media segment includes a first plurality of media frames; (b) receiving from a second user over the network at least a second media segment, wherein the second media segment includes a second plurality of media frames; and (c) automatically combining at least the first media segment and the second media segment into the composite media program including a series of media segments, wherein the composite media program is combined in an integrated way, wherein the composite media program is available for viewing by at least a set of members of a user group. 11. The computer readable medium of 12. A computerized method for creating a composite media program comprising:

(a) receiving from a user over a network at least a first media segment, wherein the first media segment includes a first plurality of media frames; (b) receiving from the user over the network at least a second media segment, wherein the second media segment includes a second plurality of media frames; (c) automatically combining at least the first media segment and the second media segment into the composite media program, wherein the composite media program includes a series of media segments; and (d) making the composite media program available for viewing only by a set of members of a user group that includes the user. 13. The computerized method of 14. The computerized method of 15. A computer readable medium containing instructions that, when executed by a processor, performs the following steps to create a composite media program:

(a) receiving from a user over a network at least a first media segment, wherein the first media segment includes a first plurality of media frames; (b) receiving from the user over the network at least a second media segment, wherein the second media segment includes a second plurality of media frames; and (c) automatically combining at least the first media segment and the second media segment into the composite media program, wherein the composite media program includes a series of media segments, and wherein the composite media program is available for viewing by at least a set of members of a user group. 16. A computerized method for creating a media segment in a mobile device, comprising:

(a) capturing a plurality of media frames with the mobile device; (b) automatically combining the plurality of media frames into the media segment, wherein each segment includes a plurality of frames; (c) storing the media segment in storage located on the mobile device; and (d) automatically transmitting the media segment to a network server, wherein a user of the mobile device can override the transmitting if not desired. 17. The computerized method of 18. The computerized method of 19. The computerized method of 20. The computerized method of 21. The computerized method of 22. The computerized method of 23. The computerized method of 24. The computerized method of FIELD OF THE INVENTION

BACKGROUND

SUMMARY OF INVENTION

BRIEF DESCRIPTION OF THE DRAWINGS

DETAILED DESCRIPTION