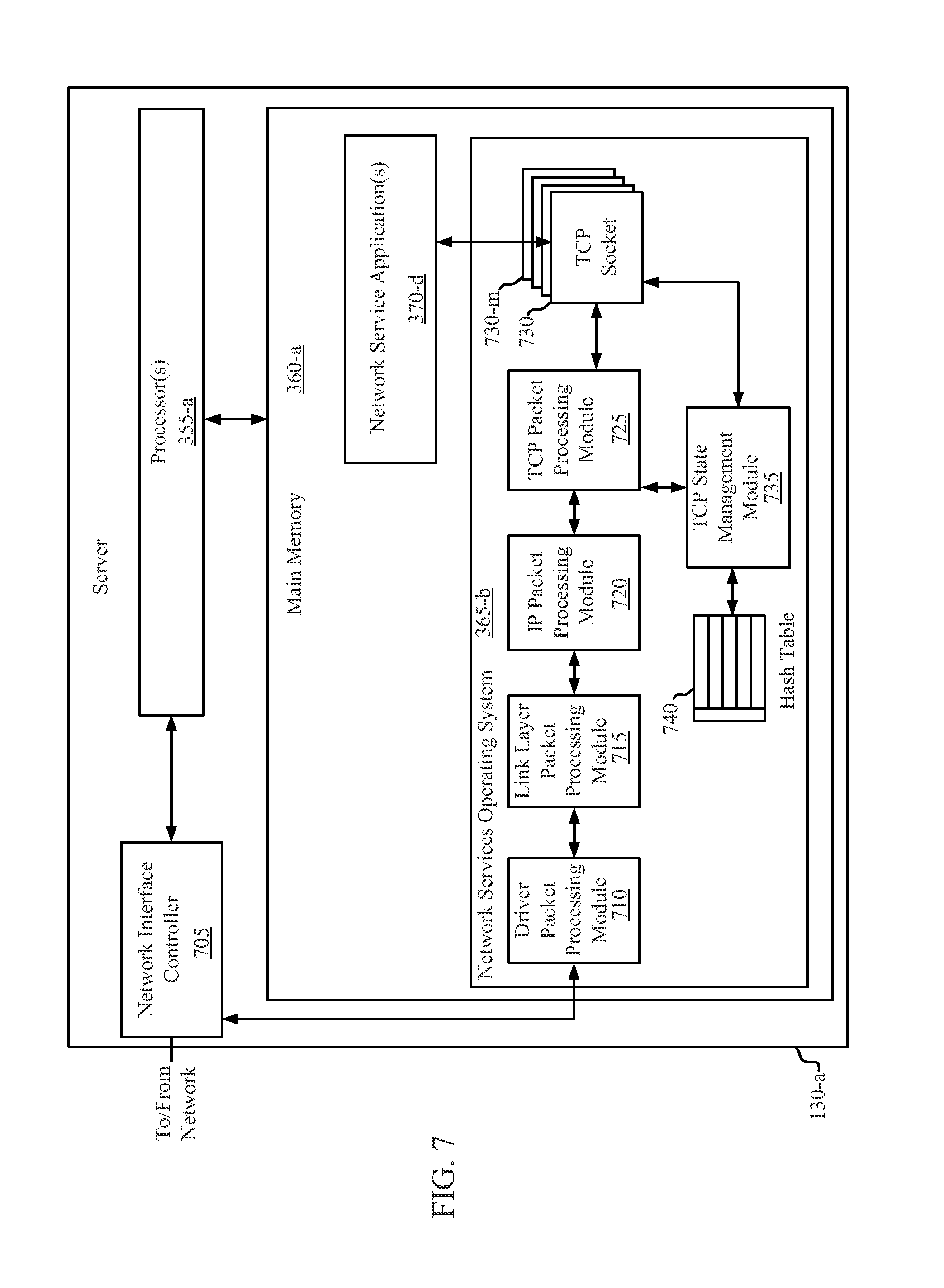

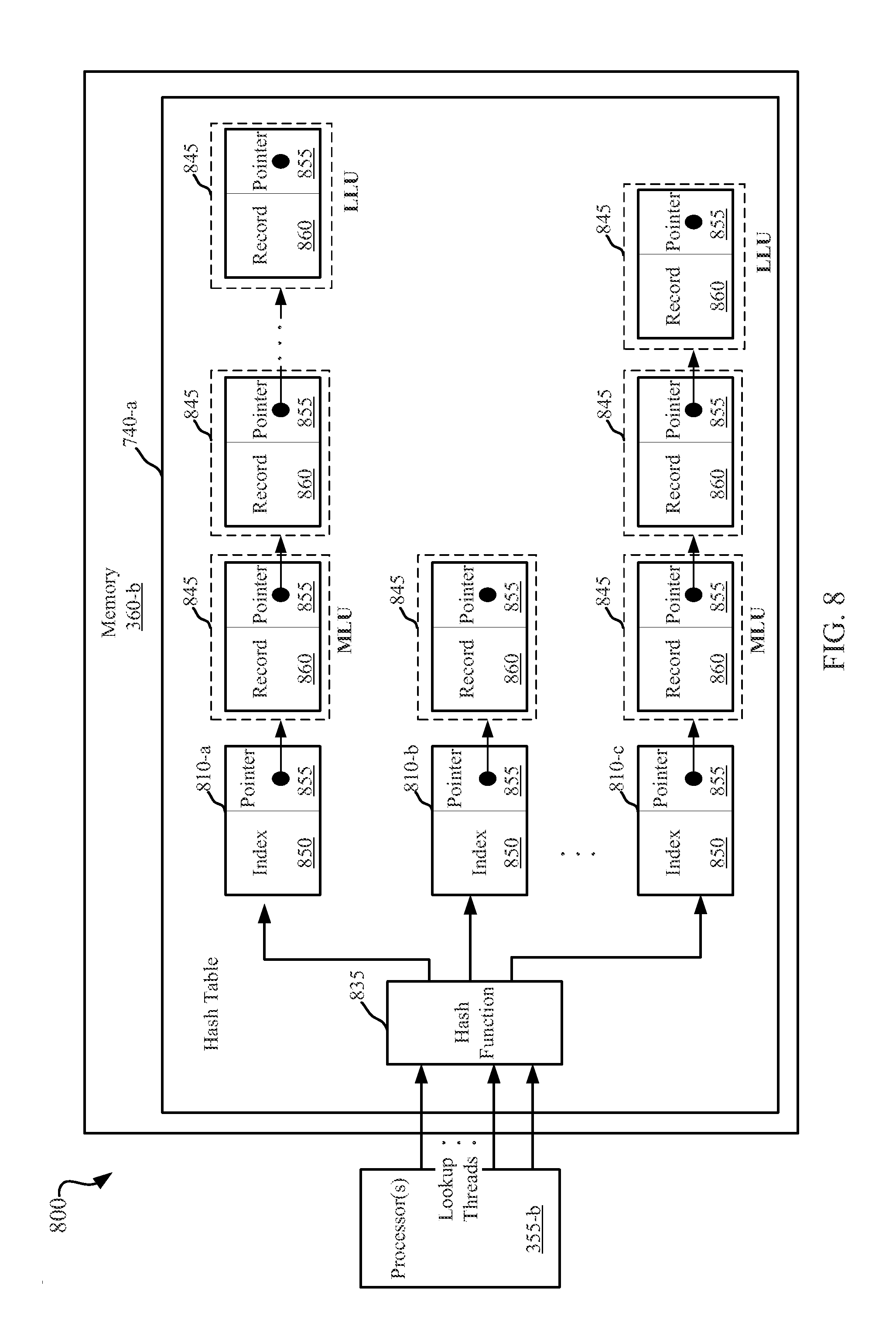

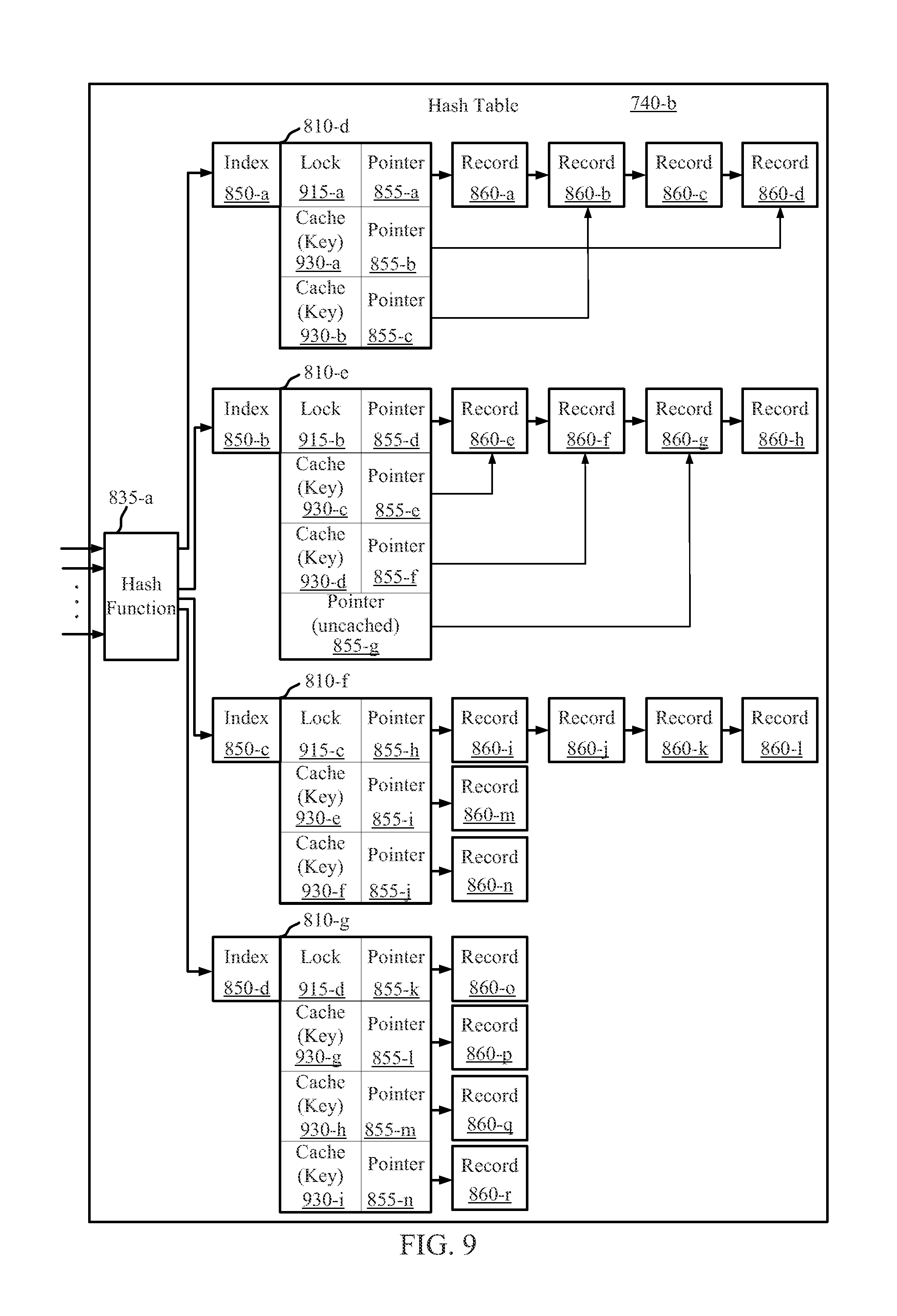

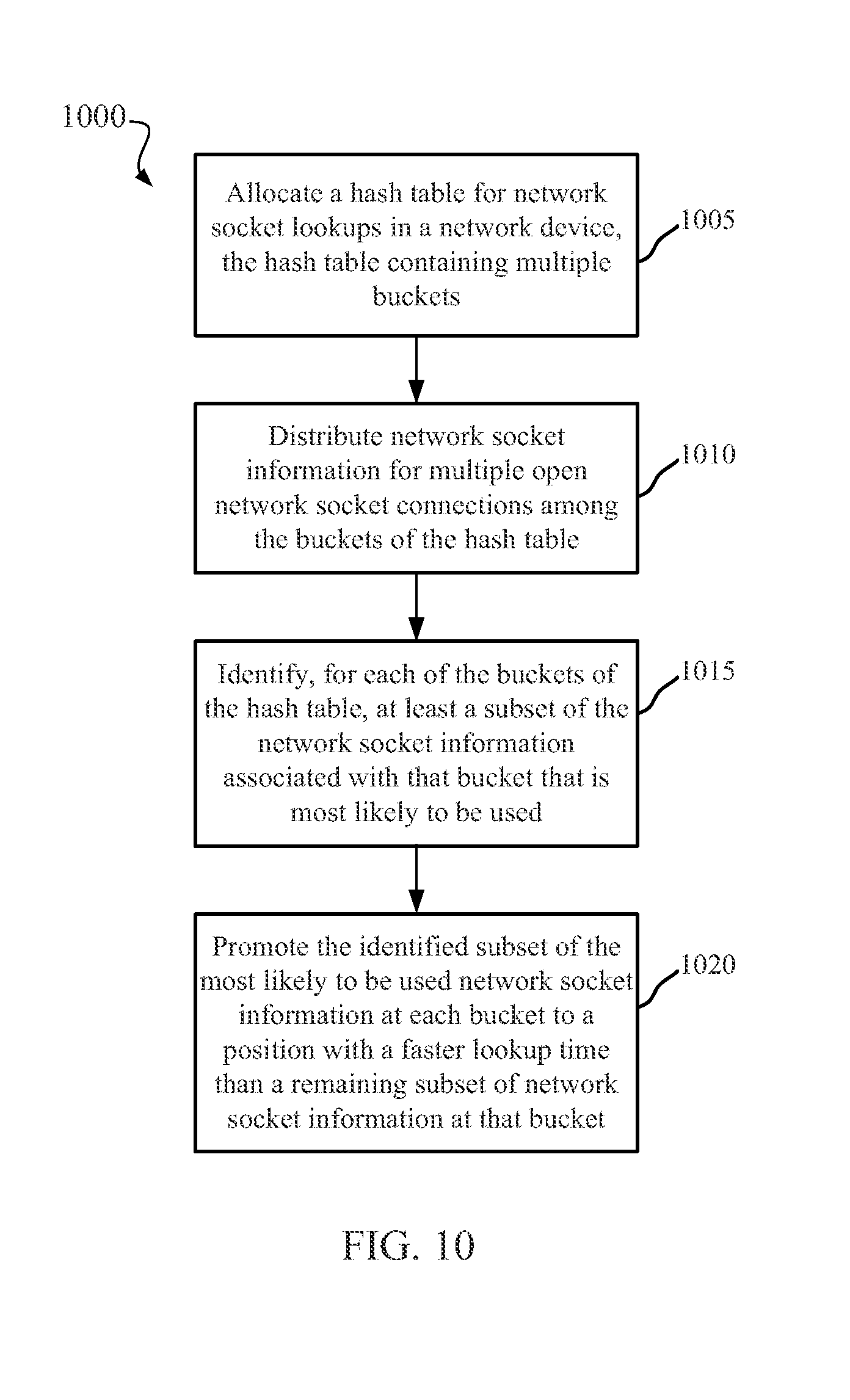

CACHED HASH TABLE FOR NETWORKING

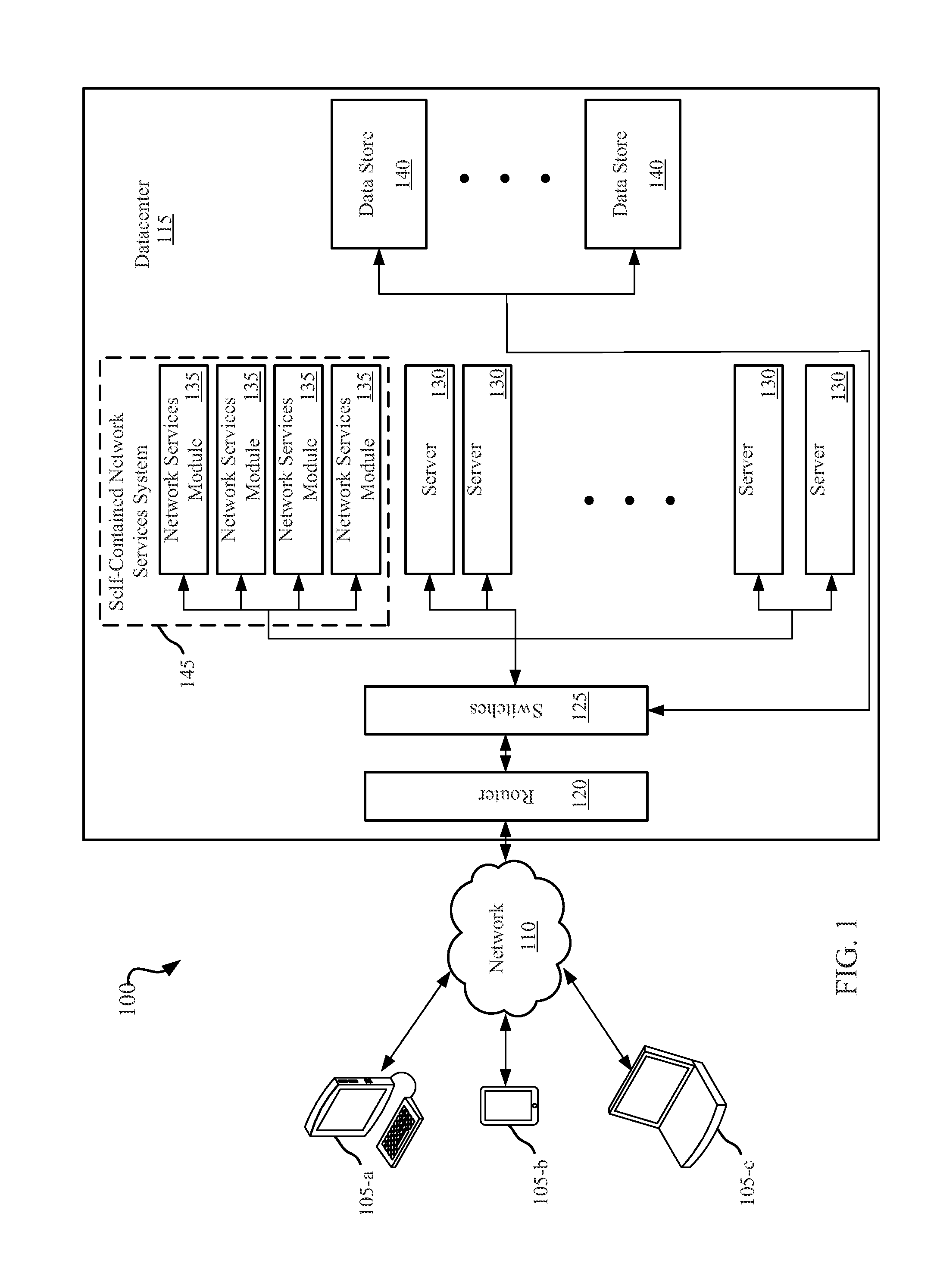

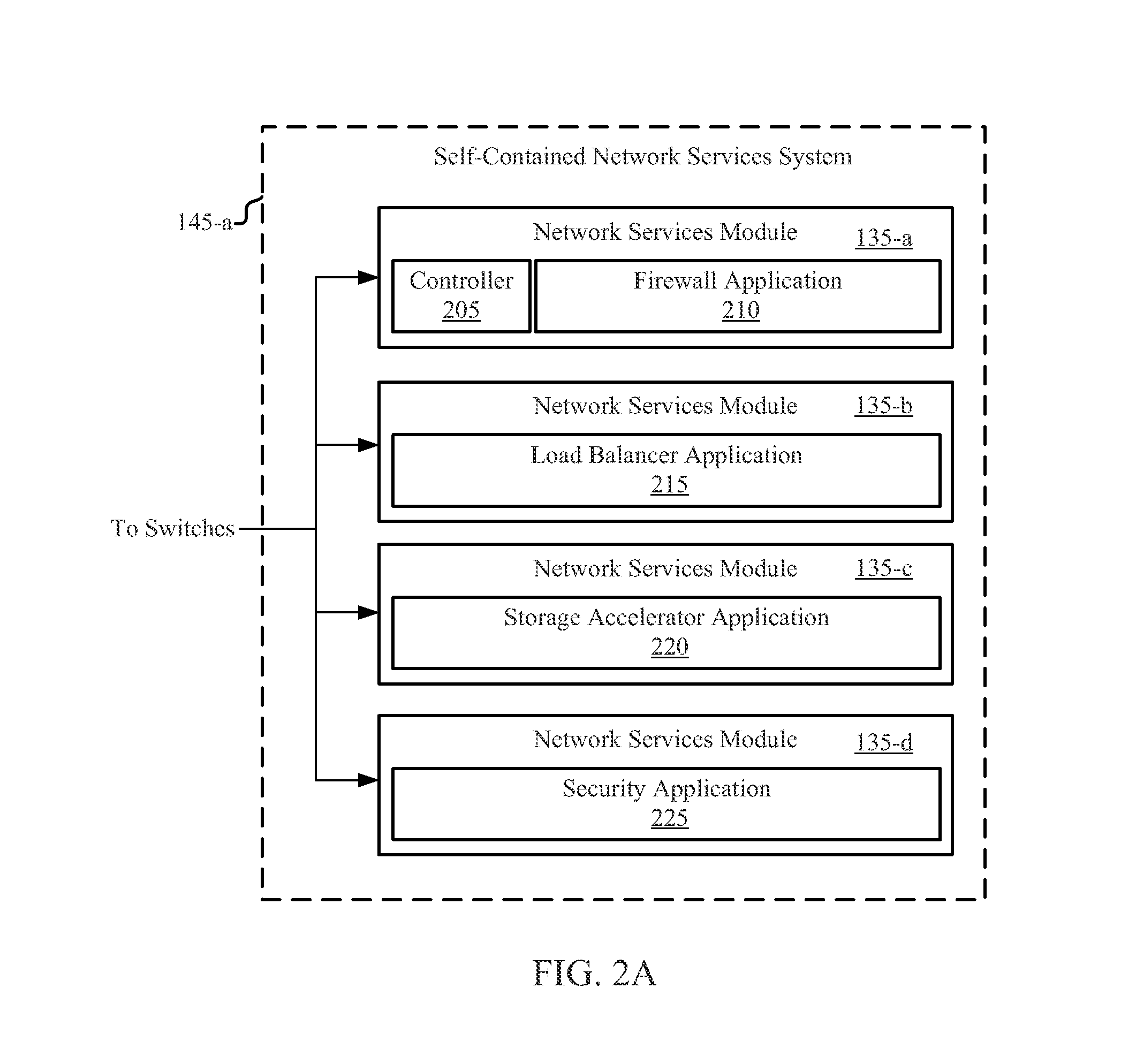

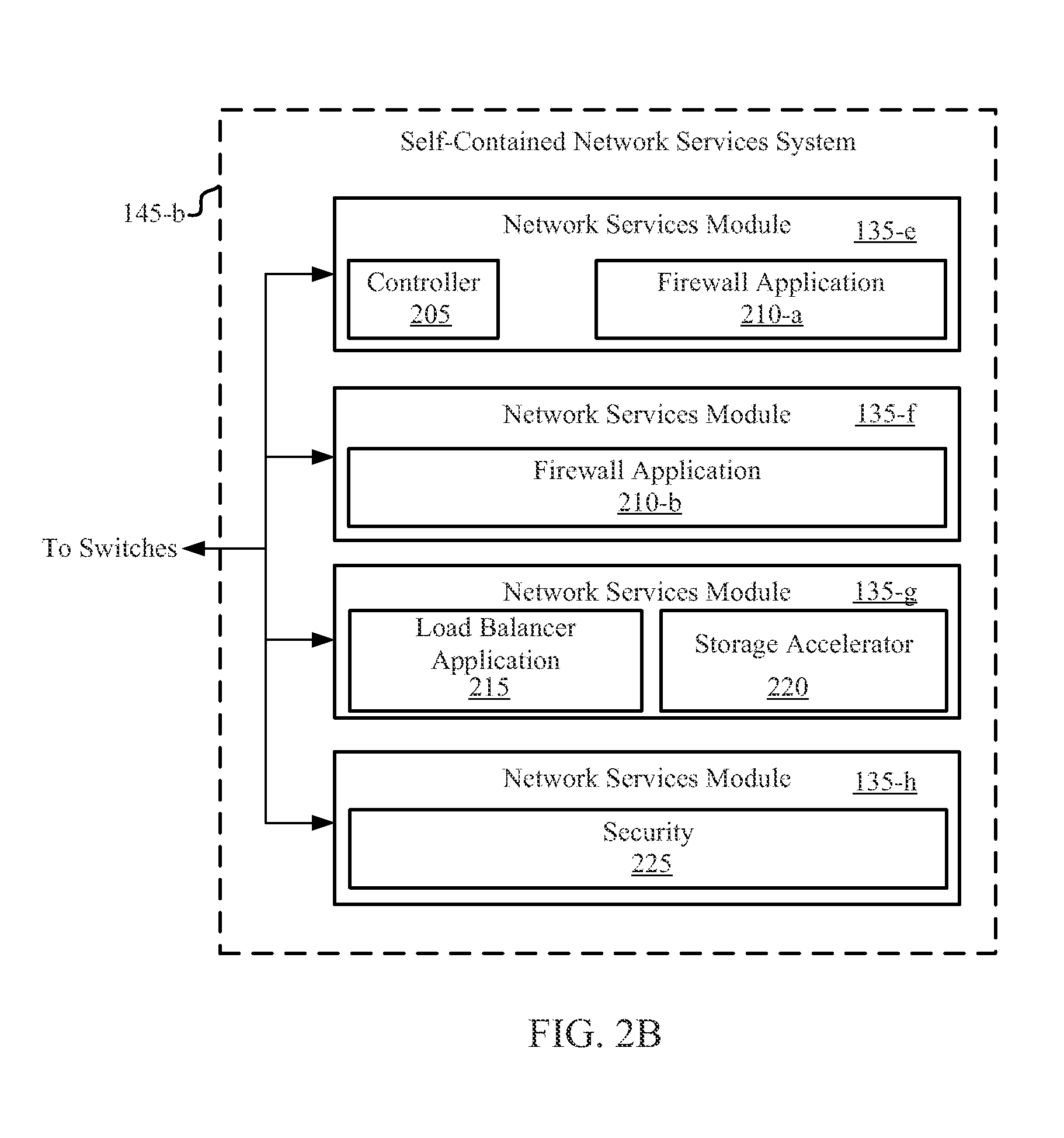

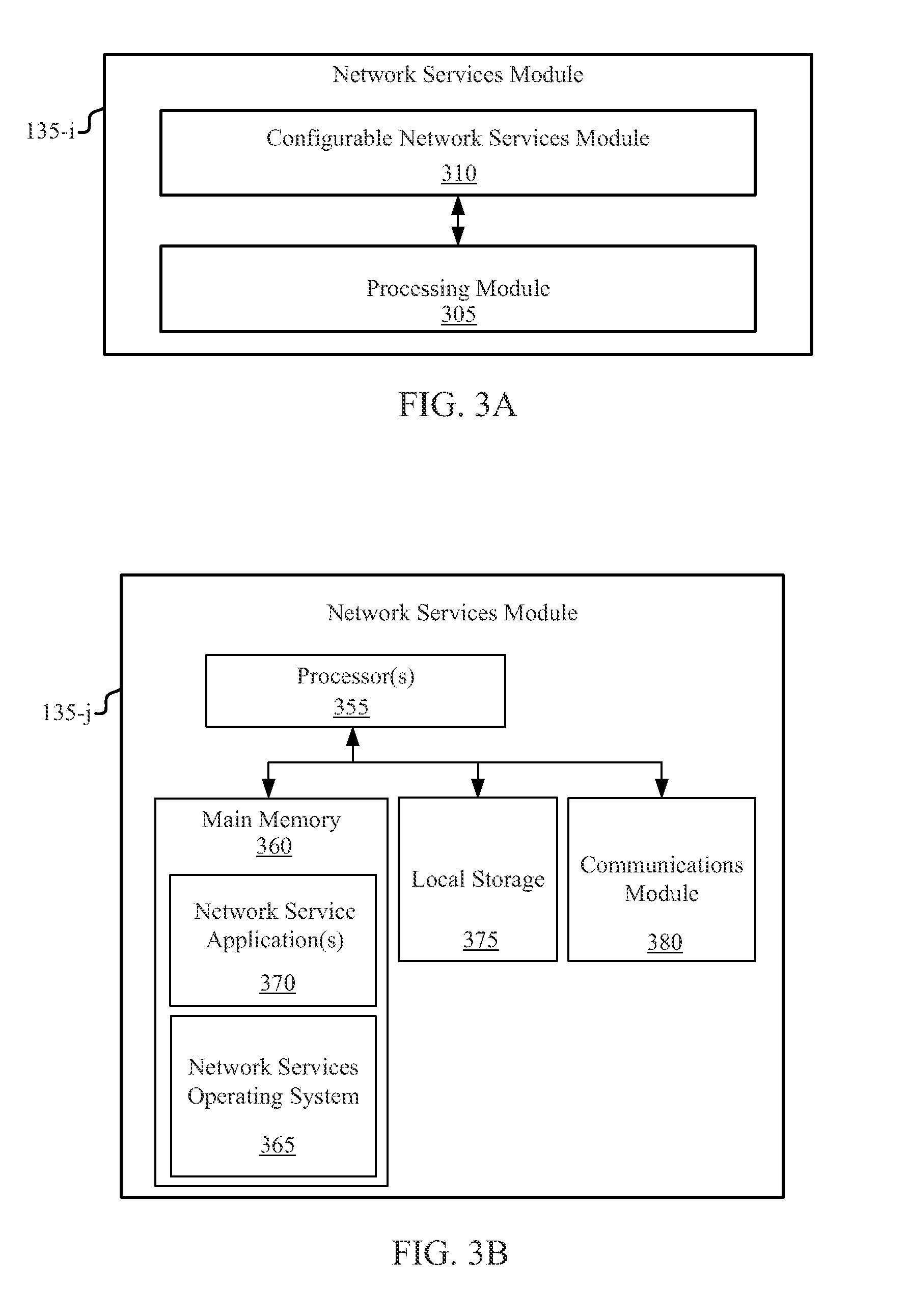

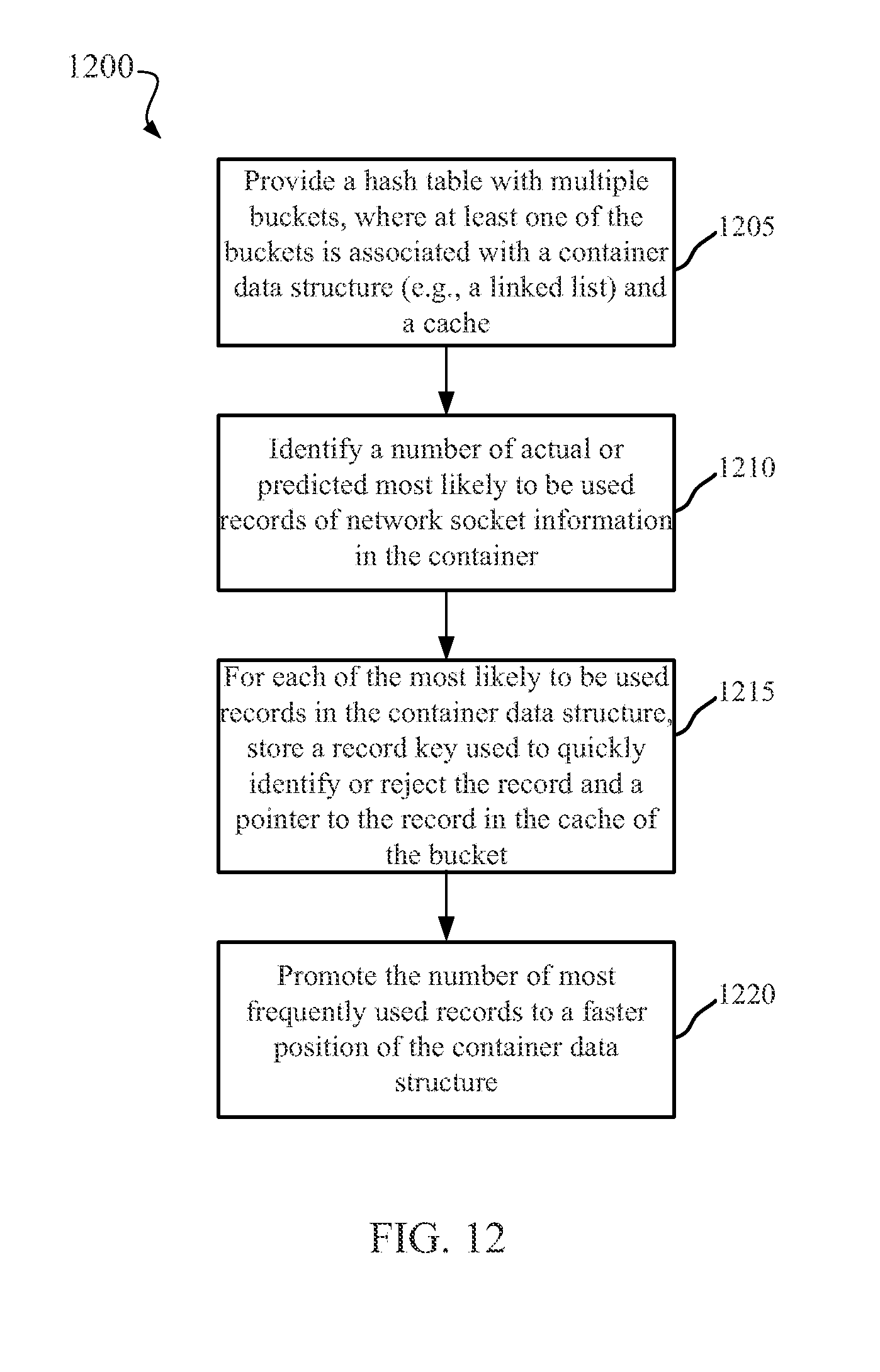

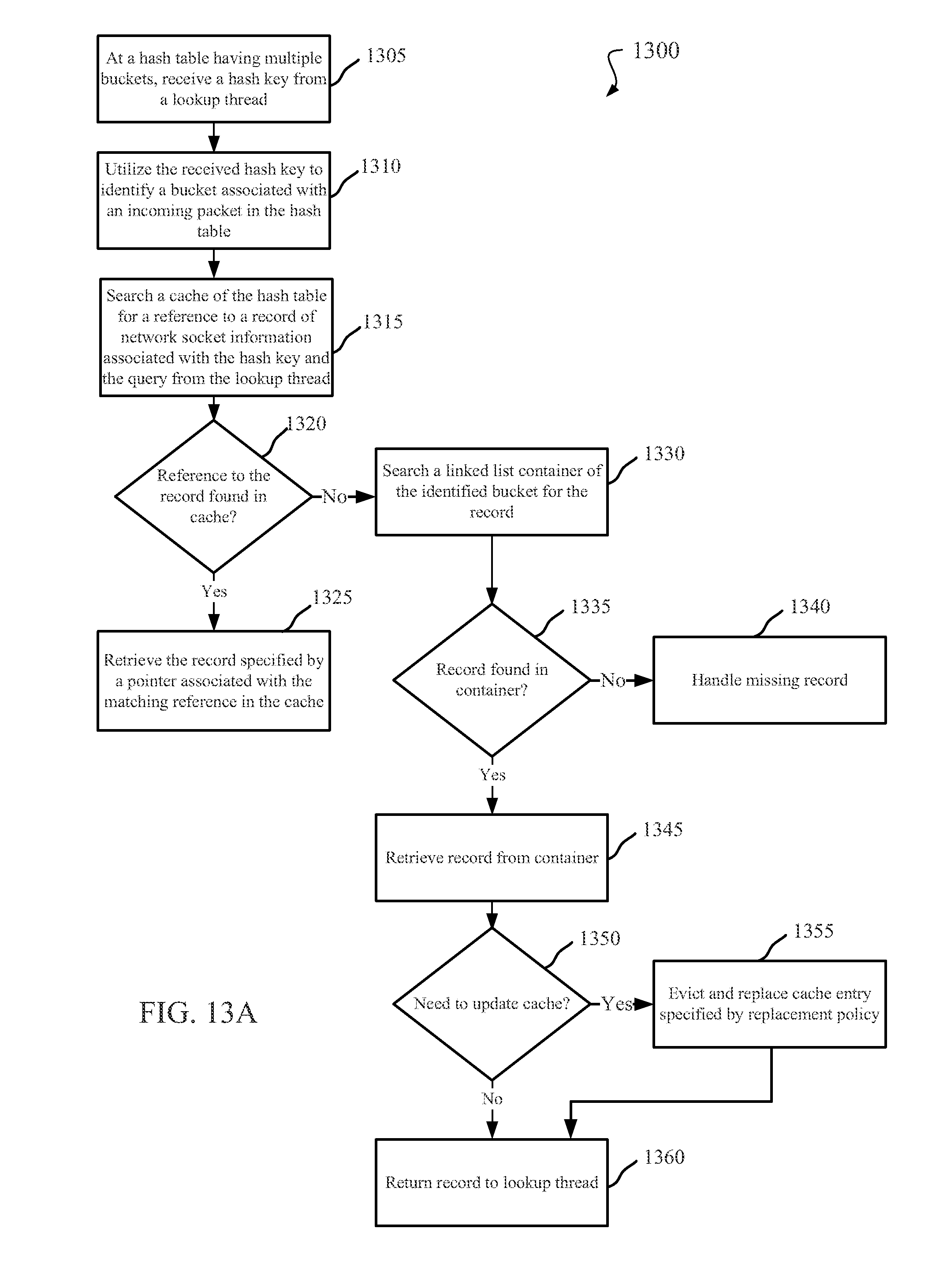

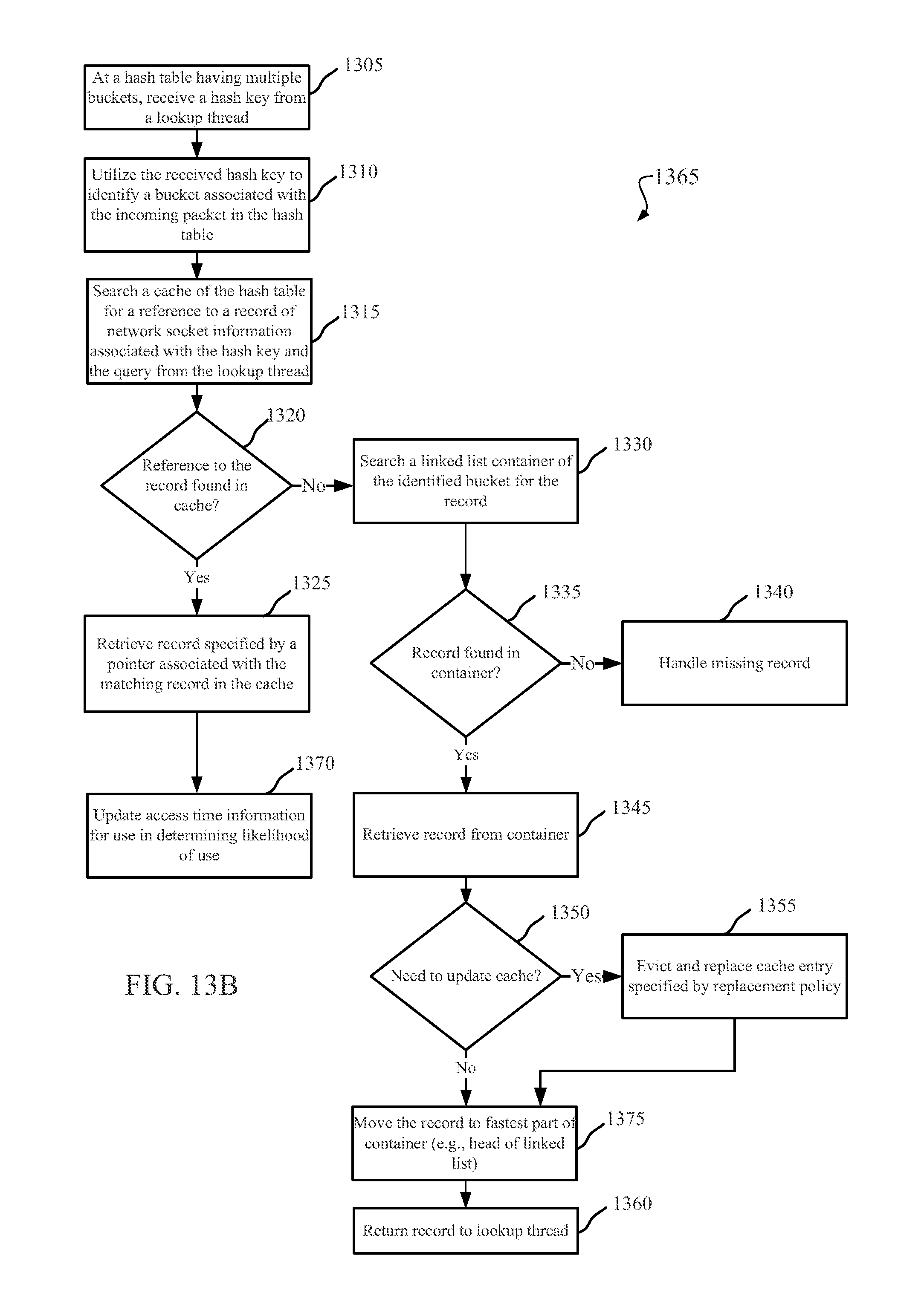

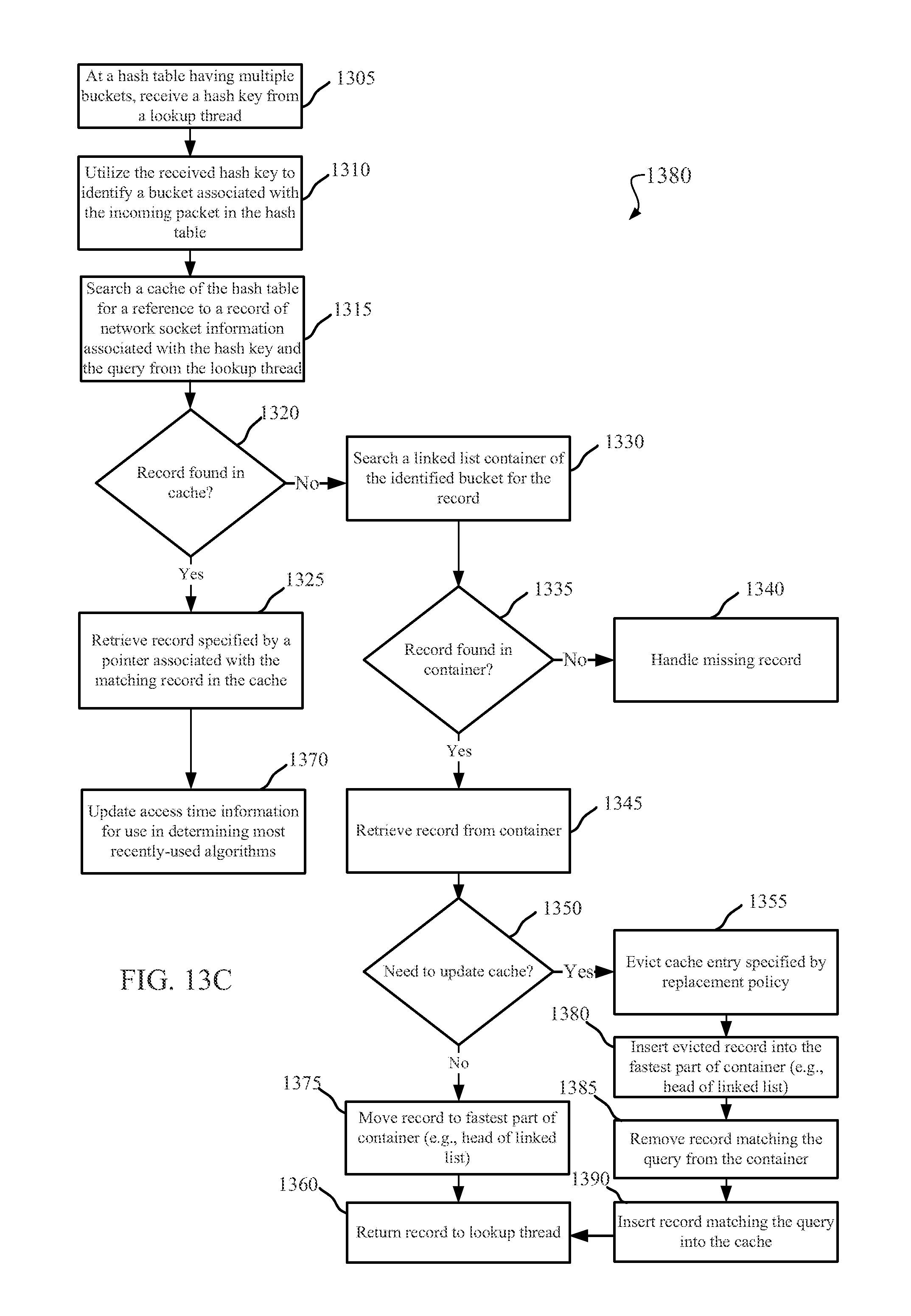

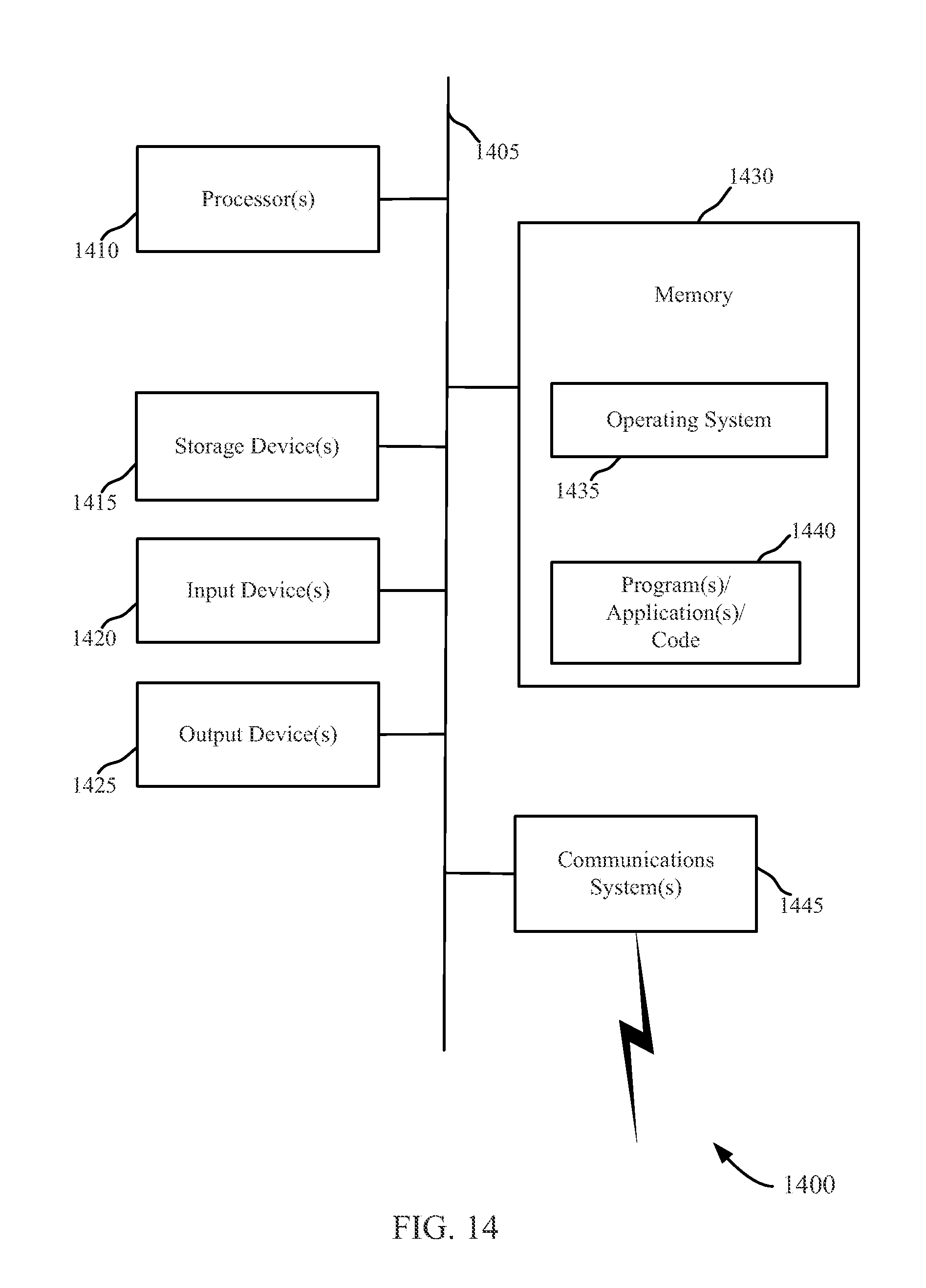

The present application claims priority under 35 U.S.C. § 119 to U.S. Provisional Patent Application Ser. No. 61/587,886, entitled “CACHED HASH TABLE FOR NETWORKING,” which was filed on Jan. 18, 2012, the entirety of which is incorporated by reference herein for all purposes. Aspects of the invention relate to computer networks, and more particularly, providing dynamically configurable high-speed network services for a network of computing devices. Organizations often use multiple computing devices. These computing devices may communicate with each other over a network, such as a local area network or the Internet. In such networks, it may be desirable to provide various types of network services. Examples of such network services include, among others, firewalls, load balancers, storage accelerators, and encryption services. These services may help ensure the integrity of data provided over the network, optimize connection speeds and resource utilization, and generally make the network more reliable and secure. For example, a firewall typically creates a logical barrier to prevent unauthorized traffic from entering or leaving the network, and an encryption service may protect private data from unauthorized recipients. A load balancer may distribute a workload across multiple redundant computers in the network, and a storage accelerator may increase the efficiency of data retrieval and storage. These network services can be complicated to implement, particularly in networks that handle a large amount of network traffic. Often such networks rely on special-purpose hardware appliances to provide network services. However, special-purpose hardware appliances can be costly and difficult to maintain. Moreover, special-purpose hardware appliances may be inflexible with regard to the typical ebb and flow of demand for specific network services. Thus, there may be a need in the art for novel system architectures to address one or more of these issues. Methods, systems, and devices are described for managing network socket information in hash tables. In a first set of embodiments, a method of managing network socket lookups may include allocating a hash table for network socket lookups in a network device, the hash table comprising a plurality of buckets; distributing network socket information for a plurality of open network socket connections among the buckets of the hash table; identifying, for each of the buckets of the hash table, at least a subset of the network socket information associated with that bucket that is most likely to be used; and promoting the identified subset of most likely to be used network socket information at each bucket to a position comprising a faster lookup time than a remaining subset of network socket information associated with that bucket. In a second set of embodiments, a network device for managing network socket information may include a memory configured to store a hash table allocated to network socket lookups, the hash table comprising a plurality of buckets; and at least one processor communicatively coupled with the memory. The processor may be configured to: distribute network socket information for a plurality of open network socket connections among the buckets of the hash table; identify, for each of the buckets of the hash table, at least a subset of the network socket information associated with that bucket that is most likely to be used; and promote the identified subset of most likely to be used network socket information at each bucket to a position comprising a faster lookup time than a remaining subset of network socket information associated with that bucket. In a third set of embodiments, a computer program product for managing network socket information may include a tangible computer readable storage device having a plurality of computer readable instructions stored thereon. The computer-readable instructions may include computer-readable instructions configured to cause at least one processor to allocate a hash table for network socket lookups in a network device, the hash table comprising a plurality of buckets; computer-readable instructions configured to cause at least one processor to distribute network socket information for a plurality of open network socket connections among the buckets of the hash table; computer-readable instructions configured to cause at least one processor to identify, for each of the buckets of the hash table, at least a subset of the network socket information associated with that bucket that is most likely to be used; and computer-readable instructions configured to cause at least one processor to promote the identified subset of most likely to be used network socket information at each bucket to a position comprising a faster lookup time at that bucket than a remaining subset of network socket information associated with that bucket. A further understanding of the nature and advantages of the present invention may be realized by reference to the following drawings. In the appended figures, similar components or features may have the same reference label. Further, various components of the same type may be distinguished by following the reference label by a dash and a second label that distinguishes among the similar components. If only the first reference label is used in the specification, the description is applicable to any one of the similar components having the same first reference label irrespective of the second reference label. Systems, methods, and devices are provided for managing hash table lookups. In certain hash tables, one or more buckets may be associated with a container containing a collection of records containing data. A number of most likely to be used (e.g., based on recency of use, frequency of use, or combinations thereof) records in the container may be identified, and these records may be positioned in the container (e.g. at the head of a linked list) such that the lookup efficiency for the most likely to be used records is greater than that of the remaining records in the container. In certain hash tables, each bucket may include a cache for storing information about a number of identified records in the container associated with the bucket. The cache may store information about the most recently or frequently used nodes in the container, and may include pointers to those nodes in the container. Because cache lookup operations are typically faster than performing lookup operations in a container, lookup operations for the most active nodes in the container may be faster, leading to an overall improvement in hash table efficiency. This description provides examples, and is not intended to limit the scope, applicability or configuration of the invention. Rather, the ensuing description will provide those skilled in the art with an enabling description for implementing embodiments of the invention. Various changes may be made in the function and arrangement of elements. Thus, various embodiments may omit, substitute, or add various procedures or components as appropriate. For instance, it should be appreciated that the methods may be performed in an order different than that described, and that various steps may be added, omitted or combined. Also, aspects and elements described with respect to certain embodiments may be combined in various other embodiments. It should also be appreciated that the following systems, methods, devices, and software may individually or collectively be components of a larger system, wherein other procedures may take precedence over or otherwise modify their application. As used in the present specification and in the appended claims, the term “network socket” or “socket” refers to an endpoint of an inter-process communication flow across a computer network. Network sockets may rely on a transport-layer protocol (e.g., Transmission Control Protocol (TCP), User Datagram Protocol (UDP), etc.) to transport packets of a network layer protocol (e.g., Internet Protocol (IP), etc.) between two applications. Systems, devices, methods, and software are described for providing dynamically configurable network services at high-speeds using commodity hardware. In one set of embodiments, shown in The datacenter 115 may include a router 120, one or more switches 125, a number of servers 130, and a number of data stores 140. For the purposes of the present disclosure, the term “server” may be used to refer to hardware servers and virtual servers. Additionally, the term “switch” may be used to refer to hardware switches, virtual switches implemented by software, and virtual switches implemented at the network interface level. In certain examples, the data stores 140 may include arrays of machine-readable physical data storage. For example, data stores 140 may include one or more arrays of magnetic or solid-state hard drives, such as one or more Redundant Array of Independent Disk (RAID) arrays. The datacenter 115 may be configured to receive and respond to requests from the client devices 105 over the network 110. The network 110 may include a Wide Area Network (WAN), such as the Internet, a Local Area Network (LAN), or any combination of WANs and LANs. Each request from a client device 105 for data from the datacenter 115 may be transmitted as one or more packets directed to a network address (e.g., an Internet Protocol (IP) address) associated with the datacenter 115. Using the network address, the request may be routed over the network 110 to the datacenter 115, where the request may be received by router 120. Each request received by router 120 may be directed over the switches 125 to one of the servers 130 in the server bank for processing. Processing the request may include interpreting and servicing the request. For example, if the request from the client device 105 is for certain data stored in the data stores 140, interpreting the request may include one of the servers 130 identifying the data requested by the client device 105, and servicing the request may include the server 130 formulating an instruction for retrieving the requested data from the data stores 140. This instruction may be directed over one or more of the switches 125 to a data store 140, which may retrieve the requested data. In certain examples, the request may be routed to a specific data store 140 based on the data requested. Additionally or alternatively, the data stores 140 may store data redundantly, and the request may be routed to a specific data store 140 based on a load balancing or other functionality. Once the data store 140 retrieves the requested data, the switches 125 may direct the requested data retrieved by the data store 140 back to one of the servers 130, which may assemble the requested data into one or more packets addressed to the requesting client device 105. The packet(s) may then be directed over the first set of switches 125 to router 120, which transmits the packet(s) to the requesting client device 105 over the network 110. In certain examples, the datacenter 115 may implement the back end of a web site. In these examples, the data stores 140 may store Hypertext Transfer Markup Language (HTML) documents related to various component web pages of the web site, in addition to data (e.g., images, metadata, media files, style sheets, plug-in data, and the like) embedded in or otherwise associated with the web pages. When a user of one of the client devices 105 attempts to visit a web page of the website, the client device 105 may contact a Domain Name Server (DNS) to look up the IP address associated with a domain name of the website. The IP address may be the IP address of the datacenter 115. The client device 105 may then transmit a request for the web page to the datacenter 115 and receive the web page in the aforementioned manner. Datacenters 115 and other network systems may be equipped to handle large quantities of network traffic. To effectively service this traffic, it may be desirable to provide certain network services, such as firewall services, security services, load balancing services, and storage accelerator services. Firewall services provide logical barriers to certain types of unauthorized network traffic according to a set of rules. Security services may implement encryption, decryption, signature, and/or certificate functions to prevent unauthorized entities from viewing network traffic. Load balancing services may distribute incoming network traffic among the servers 130 to maximize the productivity and efficiency. Storage accelerator services distribute requests for data among data stores 140 and cache recently or frequently requested data for prompt retrieval. In some datacenters, these network services may be provided using special purpose hardware appliances. For example, in some datacenters similar in scope to datacenter 115, a special-purpose firewall appliance and a special-purpose security appliance may be placed in-line between the router and the first set of switches. Additionally, a special-purpose load balancing appliance may be placed between the first set of switches and the servers, and a special-purpose storage accelerator appliance may be placed between the second set of switches and the data stores. However, the use of special-purpose hardware appliances for network services may be undesirable for a number of reasons. Some special-purpose hardware appliances may be expensive, and can costing orders of magnitude more than commodity servers. Special purpose hardware appliances may also be difficult to manage, and may be unable to dynamically adapt to changing network environments. Moreover, special-purpose hardware appliances often may be unable to leverage the continuously emerging optimizations for commodity server architectures. The datacenter 115 of Use of commodity servers 130 in the datacenter 115 may allow for elastic scalability of network services. Network services may be dynamically added, removed, or modified in the datacenter 115 by reprogramming one or more of the network services modules 135 in the self-contained network services system 145 with different configurations of special-purpose code according to the changing needs of the datacenter 115. Furthermore, because the network services are provided by programming commodity servers with special-purpose code, some of the servers 130 in the server bank of the datacenter 115 may be allocated to the self-contained network services system 145 and configured to function as virtual network services modules 135. Thus, in certain examples, the number of servers 130 allocated to the self-contained network services system 145 may grow as the datacenter 115 experiences increased demand for network services. Conversely, as demand for network services wanes, the number of servers 130 allocated to the self-contained network services system 145 may shrink to more efficiently use the processing resources of the datacenter 115. The self-contained network services system 145 may be dynamically configurable. In some embodiments, the type and scope of network services provided by the network services system 145 may be modified on-demand by a datacenter administrator or other authorized individual. This reconfiguration may be accomplished by interacting with a network services controller application using a Graphical User Interface (GUI) or Command Line Interface (CLI) over the network (110) or by logging into one of the network services modules 135 locally. The configuration of the network services system 145 may be quite adaptable. As described above, network services applications may be dynamically loaded and removed from individual network services modules 135 to add or remove different types of network services functionality. Beyond the selection of which network services applications to execute, other aspects of the network services system 145 operations may be customized to suit a particular set of network services needs. One such customizable aspect is the computing environment (e.g., dedicated hardware, virtual machine within a hypervisor, virtual machine within an operating system) in which a particular network services application is executed. Other customizable aspects of the network services system 145 may include the number of network services applications executed by each instance of an operating system, the number of virtual machines (if any) implemented by the network services modules 135, the total number of instances of each network services application to be executed concurrently, and the like. In certain examples, one or more of these aspects may be statically defined for the network services system 145. Additionally or alternatively, one or more of these aspects may be dynamically adjusted (e.g., using a rules engine and/or in response to dynamic input from an administrator) in real-time to adapt to changing demand for network services. Each of the servers 130 implementing a network services module 135 may function as a virtual network appliance in the self-contained network services system 145 and interact with other components of the datacenter 115 over the one or more switches 125. For example, one or more network services modules 135 may function as a firewall by receiving all packets arriving at the router 120 over the one or more switches 125, applying one or more packet filtering rules to the incoming packets, and directing approved packets to a handling server 130 over the one or more switches 125. Similarly, one or more network services modules 135 may function as a storage accelerator by receiving data storage commands over the one or more switches 125. Thus, because the network services can be performed directly from the server bank through the use of switches 125 there is no need to physically reconfigure the datacenter 115 when network services are added, modified, or removed. The network services implemented by each network services module 135 are determined by special-purpose applications executed by the network services modules 135. In the present example, network services module 135- Additionally, network services module 135- The processing module 305 may be configured to execute code to execute the one or more network service applications 370 (e.g., applications 205, 210, 215, 220, 225 of In certain examples, the processing module 305 may include a dedicated hardware processor. Additionally or alternatively, the processing module 305 may include a virtual machine implemented by a physical machine through a hypervisor or an operating system. In still other examples, the processing module 305 may include dedicated access to shared physical resources and/or dedicated processor threads. The processing module 305 may be configured to interact with the network service applications 370 to implement one or more network services. The network service applications 370 may include elements of software and/or hardware that enable the processing module 305 to perform the functionality associated with at least one selected network service. In certain examples, the processing module 305 may include an x86 processor and one or more memory modules storing the one or more network service applications 370 executed by the processor to implement the at least one selected network service. In these examples, the network services implemented by the network services module 135- In additional or alternate examples, the processing module 305 may include an FPGA and the network service applications 370 may include code that can be executed by the FPGA to configure logic gates within the FPGA, where the configuration of the logic gates determines the type of network service(s), if any, implemented by the FPGA. In these examples, the network services implemented by the network services module 135- The processor 355 may include a dedicated hardware processor, a virtual machine executed by a hypervisor, a virtual machine executed within an operating system environment, and/or shared access to one or more hardware processors. In certain examples, the processor 355 may include multiple processing cores. The processor 355 may be configured to execute machine-readable code that includes a series of instructions to perform certain tasks. The machine-readable code may be modularized into different programs. In the present example, these programs include a network services operating system 365 and a set of one or more network service applications 370. The operating system 365 may coordinate access to and communication between the physical resources of the network services module 135- The operating system 365 may further coordinate communications for applications 370 executed by the processor 355. For example, the operating system 365 may implement internal application-layer communications, such as communication between two network service applications 370 executed in the same environment, and external application-layer communications, such as communication between a network service applications 370 executed within the operating system 365 and a network service applications 370 executed in a different environment using network protocols. As described in more detail below, in certain examples the operating system 365 may be a custom operating system with optimizations and features that allow the processor 355 to perform network processing services at speeds matching or exceeding that of special-purpose hardware appliances designed to provide equivalent network services. Each network service application 370 executed from main memory 360 by the processor may cause the processor 355 to implement a specific type of network service functionality. As described above, network service applications 370 may exist to implement firewall functionality, load balancing functionality, storage acceleration functionality, security functionality, and/or any other network service that may suit a particular application of the principles of this disclosure. Thus, the network services module 135- The local storage 375 of the network services module 135- The communications module 380 of the network services module 135- As described above, each network services module 135 in a self-contained network services system 145 may be configured to execute one or more instances of a custom operating system with optimizations and features that allow the processor 355 to perform network processing services at speeds matching or exceeding that of special-purpose hardware appliances designed to provide equivalent network services. The operating system 365- The accelerated kernel 405 may support the inter-process communication and system calls of a traditional Unix, Unix-like (e.g., Linux, OS/X), Windows, or other operating system kernel. However, the accelerated kernel 405 may include additional functionality and implementation differences over traditional operating system kernels. For example, the additional functionality and implementation differences may substantially increase the speed and efficiency of access to the network stack, thereby making the performance of real-time network services possible within the operating system 365- The accelerated kernel 405 may dynamically manage network stack resources in the accelerated kernel 405 to ensure efficient and fast access to network data during the performance of network services. For example, the accelerated kernel 405 may optimize parallel processing of network flows by performing load balancing operations across network stack resources. In certain embodiments, the accelerated kernel 405 may dynamically increase or decrease the number of application layer threads or driver/network layer threads accessing the network stack to balance work loads and optimize throughput by minimizing blocking conditions. The network services controller 410 may implement a database that stores configuration data for the accelerated kernel 405 and other modules in the network services operating system 365- The management API may communicate with the network services controller 410 and provide access to the network services controller 410 for the health monitor 430, the HA monitor 435, the command line interface 440, the graphical user interface 445, the HTTPS/REST interface 450, and the SNMP interface 455. The health monitor 430 and the high availability monitor 435 may monitor conditions in the network services operating system 365- In additional or alternative examples, the management API 425 may also receive instructions to dynamically load or remove one or more network services applications 370- The management API 425 may communicate with an administrator or managing process by way of the command line interface 440, the graphical user interface 445, the HTTPS/REST interface 450, or the SNMP interface 455. Additionally, the network services operating system 365- By way of the cluster manager 460, the network services operating system 365- The network services operating system 365- The system libraries 420 may include various libraries specific to a particular operating system class implemented by the network services operating system 365- In the present example, a network stack 515 includes data related to network communications made at the Internet Protocol (IP) level, data related to network communications made at the Transmission Control Protocol (TCP) level (e.g., TCP state information), and data related to TCP sockets. Incoming network flows that arrive at one or more input threads 510 network ports may be added to the network stack 515 and dynamically mapped to one or more application threads 525. The application threads 525 may be mapped to one or more stages of running applications 370. The mapping of incoming network flows to application threads 525 may be done in a way that balances the work load among the various application threads 525. For example, if one of the application threads 525 becomes overloaded, new incoming network flows may not be mapped to that application thread 525 until the load on that application thread is reduced. For example, consider the case where the operating system executes network services applications 370 for a web site and a command is received (e.g., at management API 425 of Additionally, the network stack 515 of the present example may be configured to allow for concurrent access by multiple processor threads 510. In previous solutions, each time a thread accesses a network resource (e.g., TCP state information in the network stack 515), other threads are locked out of accessing that collection of network resource (typically the entire set). As the number of network connections increases, contention for the shared network resource may increase resulting in head of line blocking and thereby effectively serializing network connection processes that are intended to occur in parallel. By including the use of a large hash table with fine-grained locking, the probability of contention for shared network resources approaches zero. Further, by dynamically balancing the processing load between application threads 525, the operating system of the present example may evenly distribute the demand for network stack resources across the total number of threads 510, thereby improving data flow These types of optimizations to the network stack 515 of the present example may be implemented without altering the socket interfaces of the operating system. Thus, where the network operating system is running on a standard general-purpose processor architecture (e.g., the x86 architecture), any network application designed for that architecture may receive the benefits of increased throughput and resource efficiency in this environment without need of altering the network application. As further shown in In one example, application threads 525 may all equally process and handle new incoming network flows. By contrast, in another example, application threads 525- In additional or alternative examples, it may be desirable to increase or decrease the number of application threads 525. Such an increase or decrease may occur dynamically in response to changing demand for network services. For example, an application thread 525 may be added by allocating processing resources to the new application thread 525, associating the new application thread 525 with an appropriate application stage 605, and updating the distribution function 660 such that incoming network flows are distributed to the new application thread 525. Conversely, an application thread 525 may be dynamically removed to free up processing resources for another process by allowing the application thread 525 to finish any pending processing tasks assigned to the application thread, updating the distribution function 660, and reallocating the resources of the application thread 525 somewhere else. This dynamic increase or decrease of application threads 525 may occur without need of rebooting or terminating network services. As further shown in It is worth noting that while an entire system for providing network services using commodity servers has been described as a whole for the sake of context, the present specification is directed to methods, systems, and apparatus that may be used with, but are not tied to the system of Referring next to The network services operating system 365- The hash table 740- As shown in In the present example, each of the buckets 810 may include a pointer 855 associated with the index 850 for that bucket 810. The pointer 855 associated with the index 850 may point to a memory location of a container (e.g., linked list, binary tree, other data structure, etc.) of records 860 associated with the bucket 810. In examples, where more than one record is stored in a bucket 810, the pointer 855 associated with the index 850 may point to a record 860 that is associated with a first node 845 in a linked list. The linked list may include a number of nodes 845, where each node contains at least a record 860 and a pointer 855 to a next node 845 in the linked list. The pointer 855 of the last node 845 in the linked list of a bucket may point to a null value to indicate the end of the linked list. Thus, in the example of In certain examples, much of the data stored in the hash table 740- It can be computationally expensive to search containers in hash table buckets 810 that contain large numbers of records 860. Thus, large containers associated with hash table buckets 810 may result in delays during lookup operations, and increase the probability of delays. In light of these considerations, the hash table 740- As shown in In certain examples, the ordering of the linked list nodes 845 for each bucket 810 may be updated each time a lookup thread accesses the bucket 810 to retrieve data from a node 845. Thus, where likelihood of use is determined or predicted by how recently each node is used, the link list may be updated to position a node 845 at the beginning of the linked list when the node 845 is accessed by a lookup thread. Alternatively, the ordering of the nodes 845 in the linked list for the bucket 810 may be updated periodically, for example, at the expiration of a set time period. It should be noted that while certain principles of the present disclosure are described in Referring next to As shown in In the hash table 740- The cached entries 830 and their associated pointers 855 may increase the speed of some lookup operations in the hash table 740- Iterating through a number of cached entries 830 at each bucket to identify an record 860 applicable to a query of a lookup thread can be computationally less expensive than traversing the linked list to identify the applicable record 860. Consequently, lookup operations performed on records 860 having associated cache entries 830 and pointers 855 in the bucket 810 can be measurably faster than lookup operations performed on other records 860 that are only found by navigating the linked list. Thus, by caching information associated with the most active records 860 in the linked list at a bucket 810, the average lookup time for records 860 associated with that bucket 810 may be reduced. The buckets 810 shown in In the first use case, demonstrated at the first bucket 810- In the second use case, demonstrated at the second bucket 810- In the third use case, demonstrated at the third bucket 810- In the fourth use case, demonstrated at the fourth bucket 810- In certain examples, the cache entries 830, pointers 855 associated with the cache entries 830, and/or the ordering of the records 860 in the linked lists may be dynamically updated as records 860 are accessed by lookup threads. Additionally or alternatively, the cache entries 830, the pointers 855 associated with the cache entries 830, and/or the ordering of the records 860 in the linked lists may be updated periodically at the expiration of a predetermined amount of time. Referring next to At block 1005, a hash table may be allocated for network socket lookups in a network device, the hash table containing multiple buckets. At least one of the buckets may be associated with a container configured to store multiple records, such as a linked list, binary tree, or other container. At block 1010, network socket information for multiple open network socket connections may be distributed among the buckets of the hash table. At block 1015, at least a subset of the network socket information stored at each bucket may be identified as most likely to be used. At block 1020, the identified subset of the most likely to be used network socket information may be promoted to a position with a faster lookup time than a remaining subset of network socket information at that bucket. In certain examples, the network socket information associated with each bucket may be stored in a linked list associated with that bucket. In such examples, the identified subset for at least one of the buckets may be promoted by reordering the linked list of that bucket such that the identified subset of most likely to be used network socket information is stored at an earlier position in the linked list than the remaining subset of network socket information at that bucket. In certain examples, the identified subset of most likely to be used network socket information may be stored in a cache separate from the linked list or other container associated with that bucket. In such examples, the identified subset of most likely to be used network socket information may be removed from the linked list of that bucket in response to the storage of the identified subset of most likely to be used network socket information in the cache. Alternatively, the identified subset of network socket information may reside in both the cache and the linked list. In certain examples, the remaining noncached subset of network socket information stored in the linked list or other container of at least one of the buckets based on likelihood of use. In certain examples, multiple packets may be received at a network device related to multiple different network sockets. Multiple processor threads may concurrently access the network socket information stored in the hash table for each of the different network sockets in parallel. The packet data from the packets may then be passed on to a next layer of packet processing based on the network socket information stored in the hash table. Referring next to At block 1105, a hash key may be received from a lookup thread at a hash table having multiple buckets. At block 1110, the received hash key may be hashed to identify a bucket associated with a network socket connection from a lookup thread. At block 1115, a linked list associated with the identified bucket may be traversed to identify a record of socket information in the linked list associated with the hash key and/or other selection criteria of the lookup thread. At block 1120, the identified record is promoted to the beginning of the linked list associated with the identified bucket. Referring next to At block 1205, a hash table may be provided. The hash table has multiple buckets, and at least one of the buckets may be associated with a container data structure (e.g., a linked list) and a cache. At block 1210, a number of actual or predicted most likely to be used nodes in the container data structure may be identified. At block 1215, for each of the most likely to be used records in the container data structure, a record key and a pointer to the record may be stored in the cache of the bucket. The record key may be used by a lookup thread to quickly identify or reject the record based. At block 1220, the number of most likely to be used records may be positioned at a slower portion of the container data structure (e.g., at the end of the linked list). In one example, the cached most likely to be used records may be removed from the container data structure or positioned at the slower portion of the container data structure. In another example, a number of least likely to be used nodes in the linked list may be identified and positioned at the faster portion of container data structure, and information and pointers for the remaining nodes may be stored in the cache. The remaining nodes may also be removed from the container data structure. In still another example, the number of most likely to be used records may be positioned at one portion of the container data structure, and the cache may store a pointer to the remaining portion of the container data structure to provide access to the uncached records. Referring next to At block 1305, a hash key may be received from a lookup thread at a hash table having multiple buckets. At block 1310, the received hash key may be hashed to identify a bucket associated with an incoming packet in the hash table. The bucket may be associated with a record container data structure (e.g., a linked list) and a cache. At block 1315, the cache may be searched for reference to a record of network socket information associated with the hash key and the query from the lookup thread. If the reference to the record is found in the cache (block 1320, YES), the record specified by a pointer associated with the reference to the record in the cache may be retrieved at block 1325. If reference to the record is not found in the cache (block 1320, NO) the linked list container may be searched for a record associated with the query from the lookup thread. If no matching record is found in the container (block 1335, NO), a process for handling a missing record may be invoked at block 1340. If the record is found in the container (block 1335, YES), the record may be retrieved from the container at block 1345. A determination may be made at block 1350 as to whether the cache needs updating. If so (block 1350, YES), one or more cache entries specified by a replacement policy may be evicted and replaced at block 1355. If no updating is needed (block 1350, NO), the record may be returned to the lookup thread at block 1360. Referring next to At block 1305, a hash key may be received from a lookup thread at a hash table having multiple buckets. At block 1310, the received hash key may be hashed to identify a bucket associated with an incoming packet in the hash table. The bucket may be associated with a record container data structure (e.g., a linked list) and a cache. At block 1315, the cache may be searched for reference to a record of network socket information associated with the hash key and the query from the lookup thread. If the reference to the record is found in the cache (block 1320, YES), the record specified by a pointer associated with the reference to the record in the cache may be retrieved at block 1325, and access time information may be updated for use in determining the likelihood of use for that record, as described above. If reference to the record is not found in the cache (block 1320, NO) the linked list container may be searched for a record associated with the query from the lookup thread. If no matching record is found in the container (block 1335, NO), a process for handling a missing record may be invoked at block 1340. If the record is found in the container (block 1335, YES), the record may be retrieved from the container at block 1345. A determination may be made at block 1350 as to whether the cache needs updating. If so (block 1350, YES), one or more cache entries specified by a replacement policy may be evicted and replaced at block 1355. If no updating is needed (block 1350, NO), or following enforcement of the replacement policy, the record may be moved to the fastest part of the container (e.g., the head of a linked list) at block 1375, and the record may be returned to the lookup thread at block 1360. Referring next to At block 1305, a hash key may be received from a lookup thread at a hash table having multiple buckets. At block 1310, the received hash key may be hashed to identify a bucket associated with an incoming packet in the hash table. The bucket may be associated with a record container data structure (e.g., a linked list) and a cache. At block 1315, the cache may be searched for reference to a record of network socket information associated with the hash key and the query from the lookup thread. If the reference to the record is found in the cache (block 1320, YES), the record specified by a pointer associated with the reference to the record in the cache may be retrieved at block 1325, and access time information may be updated for use in determining the likelihood of use for that record, as described above. If reference to the record is not found in the cache (block 1320, NO) the linked list container may be searched for a record associated with the query from the lookup thread. If no matching record is found in the container (block 1335, NO), a process for handling a missing record may be invoked at block 1340. If the record is found in the container (block 1335, YES), the record may be retrieved from the container at block 1345. A determination may be made at block 1350 as to whether the cache needs updating. If so (block 1350, YES), one or more cache entries specified by a replacement policy may be evicted at block 1355, the evicted record may be inserted into the fastest part of the container (e.g., the head of a linked list) at block 1380, and the record matching the query may be removed from the container at block 1385. The record matching the query may then be inserted into the cache at block 1390, and the record may be returned to the lookup thread at block 1360. If no updating is needed (block 1350, NO), the record may be moved to the fastest part of the container (e.g., the head of a linked list) at block 1375, and the record is returned to the lookup thread at block 1360. A device structure 1400 that may be used for one or more components of server 130 of This drawing broadly illustrates how individual system elements of each of the aforementioned devices may be implemented, whether in a separated or more integrated manner. Thus, any or all of the various components of one of the aforementioned devices may be combined in a single unit or separately maintained and can further be distributed in multiple groupings or physical units or across multiple locations. The example structure shown is made up of hardware elements that are electrically coupled via bus 1405, including processor(s) 1410 (which may further comprise a digital signal processor (DSP) or special-purpose processor), storage device(s) 1415, input device(s) 1420, and output device(s) 1425. The storage device(s) 1415 may be a machine-readable storage media reader connected to any machine-readable storage medium, the combination comprehensively representing remote, local, fixed, or removable storage devices or storage media for temporarily or more permanently containing computer-readable information. The communications system(s) interface 1445 may interface to a wired, wireless, or other type of interfacing connection that permits data to be exchanged with other devices. The communications system(s) interface 1445 may permit data to be exchanged with a network. In certain examples, the communications system(s) interface 1445 may include a switch application-specific integrated circuit (ASIC) for a network switch or router. In additional or alternative examples, the communication systems interface 1445 may include network interface cards and other circuitry or physical media configured to interface with a network. The structure 1400 may also include additional software elements, shown as being currently located within working memory 1430, including an operating system 1435 and other code 1440, such as programs or applications designed to implement methods of the invention. It will be apparent to those skilled in the art that substantial variations may be used in accordance with specific requirements. For example, customized hardware might also be used, or particular elements might be implemented in hardware, software (including portable software, such as applets), or both. It should be noted that the methods, systems and devices discussed above are intended merely to be examples. It must be stressed that various embodiments may omit, substitute, or add various procedures or components as appropriate. For instance, it should be appreciated that, in alternative embodiments, the methods may be performed in an order different from that described, and that various steps may be added, omitted or combined. Also, features described with respect to certain embodiments may be combined in various other embodiments. Different aspects and elements of the embodiments may be combined in a similar manner. Also, it should be emphasized that technology evolves and, thus, many of the elements are exemplary in nature and should not be interpreted to limit the scope of the invention. Specific details are given in the description to provide a thorough understanding of the embodiments. However, it will be understood by one of ordinary skill in the art that the embodiments may be practiced without these specific details. For example, well-known circuits, processes, algorithms, structures, and techniques have been shown without unnecessary detail in order to avoid obscuring the embodiments. Also, it is noted that the embodiments may be described as a process which is depicted as a flow diagram or block diagram. Although each may describe the operations as a sequential process, many of the operations can be performed in parallel or concurrently. In addition, the order of the operations may be rearranged. A process may have additional steps not included in the figure. Moreover, as disclosed herein, the term “memory” or “memory unit” may represent one or more devices for storing data, including read-only memory (ROM), random access memory (RAM), magnetic RAM, core memory, magnetic disk storage mediums, optical storage mediums, flash memory devices or other computer-readable mediums for storing information. The term “computer-readable medium” includes, but is not limited to, portable or fixed storage devices, optical storage devices, wireless channels, a SIM card, other smart cards, and various other mediums capable of storing, containing or carrying instructions or data. Furthermore, embodiments may be implemented by hardware, software, firmware, middleware, microcode, hardware description languages, or any combination thereof When implemented in software, firmware, middleware or microcode, the program code or code segments to perform the necessary tasks may be stored in a computer-readable medium such as a storage medium. Processors may perform the necessary tasks. Having described several embodiments, it will be recognized by those of skill in the art that various modifications, alternative constructions, and equivalents may be used without departing from the spirit of the invention. For example, the above elements may merely be a component of a larger system, wherein other rules may take precedence over or otherwise modify the application of the invention. Also, a number of steps may be undertaken before, during, or after the above elements are considered. Accordingly, the above description should not be taken as limiting the scope of the invention. Systems, methods, and devices are provided for managing hash table lookups. In certain network devices, a hash table having multiple buckets may be allocated for network socket lookups. Network socket information for multiple open network socket connections may be distributed among the buckets of the hash table. For each of the buckets of the hash table, at least a subset of the network socket information that is most likely to be used may be identified, and the identified subset of most likely to be used network socket information may be promoted at each bucket to a position having a faster lookup time than a remaining subset of the network socket information at that bucket. 1. A method of managing network socket lookups, comprising:

allocating a hash table for network socket lookups in a network device, the hash table comprising a plurality of buckets; distributing network socket information for a plurality of open network socket connections among the buckets of the hash table; identifying, for each of the buckets of the hash table, at least a subset of the network socket information associated with that bucket that is most likely to be used; and promoting the identified subset of most likely to be used network socket information at each bucket to a position comprising a faster lookup time than a remaining subset of network socket information associated with that bucket. 2. The method of storing at least a portion of the network socket information associated with each bucket in a linked list associated with that bucket. 3. The method of reordering the linked list such that the identified subset of most likely to be used network socket information is stored at an earlier position in the linked list than the remaining subset of network socket information associated with the at least one of the buckets. 4. The method of storing the identified subset of most likely to be used network socket information in a cache separate from the linked list associated with that bucket. 5. The method of removing the identified subset of most likely to be used network socket information from the linked list of the at least one of the buckets in response to storing the identified subset of most likely to be used network socket information in the cache. 6. The method of reordering the subset of remaining network socket information in the linked list of at least one of the buckets based on a likelihood of use of the remaining network socket information. 7. The method of 8. The method of 9. The method of receiving a plurality of packets related to a plurality of different network sockets; and concurrently accessing the network socket information stored in the hash table for each of the different network sockets in parallel using the multiple processor threads. 10. The method of passing packet data from the plurality of packets on to a next layer of packet processing based on the network socket information stored in the hash table. 11. A network device for managing network socket information, comprising:

a memory configured to store a hash table allocated to network socket lookups, the hash table comprising a plurality of buckets; and at least one processor communicatively coupled with the memory, the processor configured to:

distribute network socket information for a plurality of open network socket connections among the buckets of the hash table; identify, for each of the buckets of the hash table, at least a subset of the network socket information associated with that bucket that is most likely to be used; and promote the identified subset of most likely to be used network socket information at each bucket to a position comprising a faster lookup time than a remaining subset of network socket information associated with that bucket. 12. The network device of store at least a portion of the network socket information associated with each bucket in a linked list associated with that bucket. 13. The network device of reordering the linked list such that the identified subset of most likely to be used network socket information is stored at an earlier position in the linked list than the remaining subset of network socket information associated with the at least one of the buckets. 14. The network device of storing the identified subset of most likely to be used network socket information in a cache separate from the linked list associated with that bucket. 15. The network device of remove the identified subset of most likely to be used network socket information from the linked list of the at least one of the buckets in response to storing the identified subset of most likely to be used network socket information in the cache. 16. The network device of reorder the subset of remaining network socket information in the linked list of at least one of the buckets based on a likelihood of use of the remaining network socket information. 17. The network device of 18. The network device of 19. The network device of receive a plurality of packets related to a plurality of different network sockets; concurrently access the network socket information stored in the hash table for each of the different network sockets in parallel using the multiple processor threads; and pass packet data from the plurality of packets on to a next layer of packet processing based on the network socket information stored in the hash table. 20. A computer program product for managing network socket information, comprising:

a tangible computer readable storage device comprising a plurality of computer readable instructions stored thereon, the computer-readable instructions comprising: computer-readable instructions configured to cause at least one processor to allocate a hash table for network socket lookups in a network device, the hash table comprising a plurality of buckets; computer-readable instructions configured to cause at least one processor to distribute network socket information for a plurality of open network socket connections among the buckets of the hash table; computer-readable instructions configured to cause at least one processor to identify, for each of the buckets of the hash table, at least a subset of the network socket information associated with that bucket that is most likely to be used; and computer-readable instructions configured to cause at least one processor to promote the identified subset of most likely to be used network socket information at each bucket to a position comprising a faster lookup time at that bucket than a remaining subset of network socket information associated with that bucket.CROSS-REFERENCE

BACKGROUND

SUMMARY

BRIEF DESCRIPTION OF THE DRAWINGS

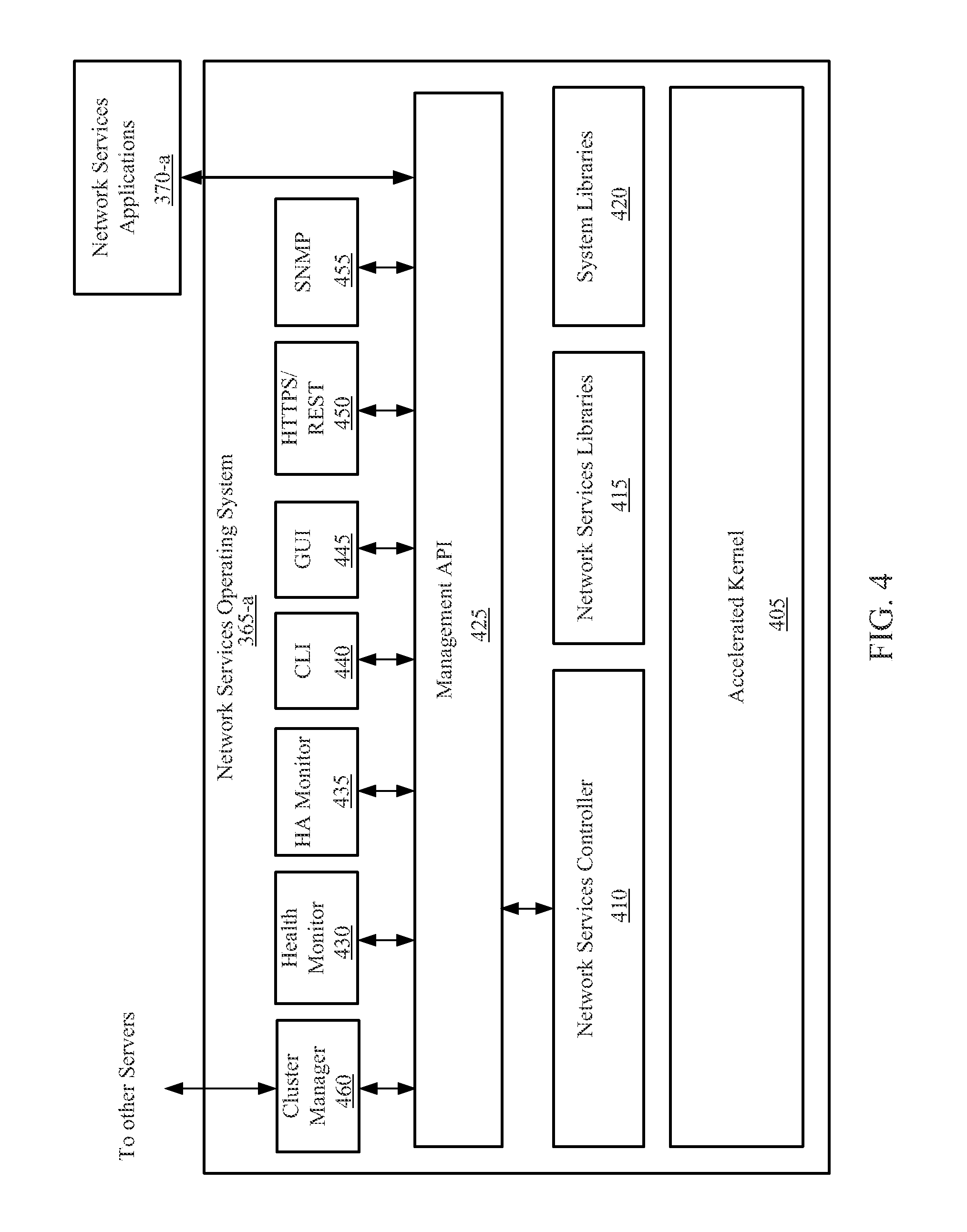

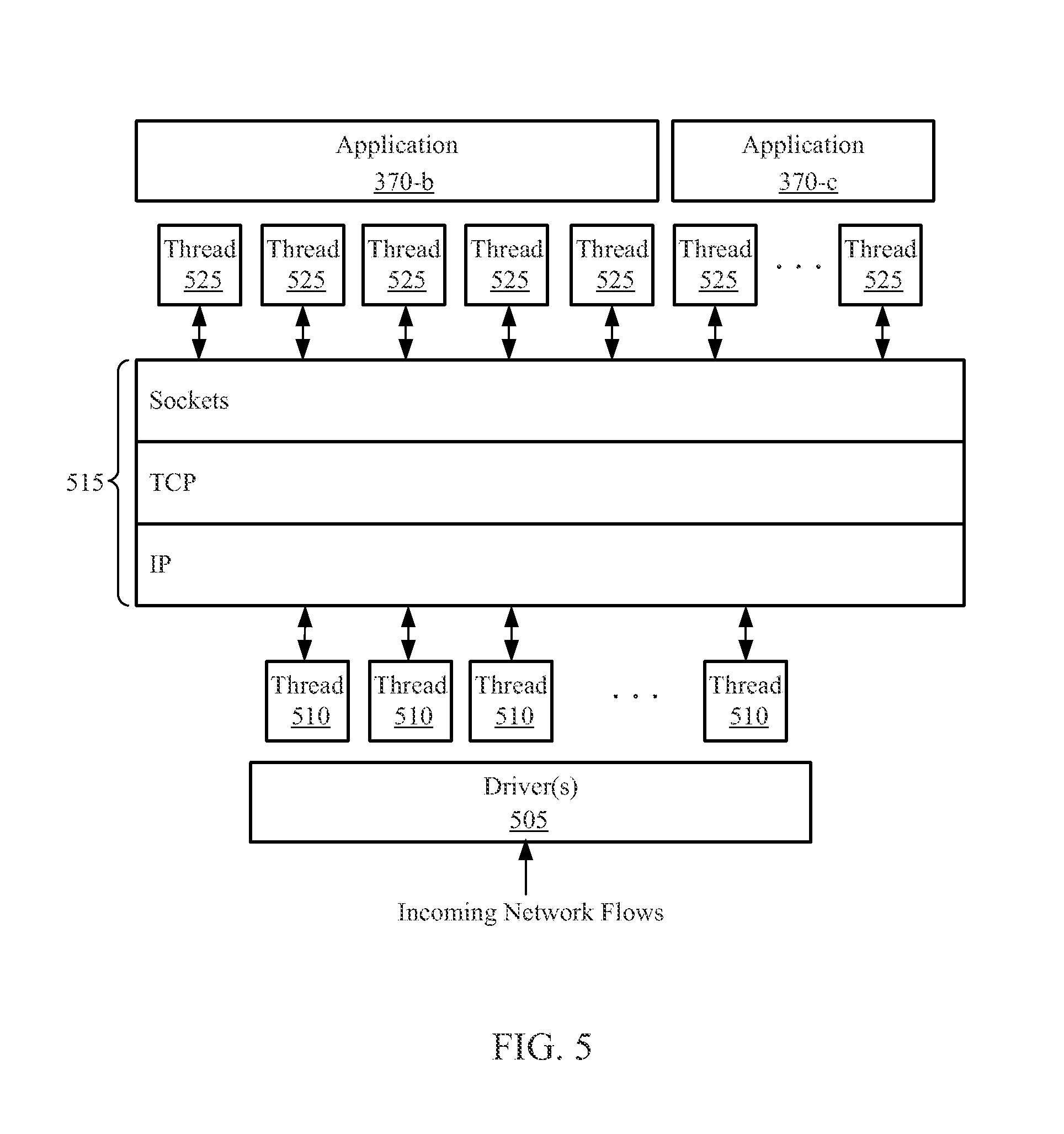

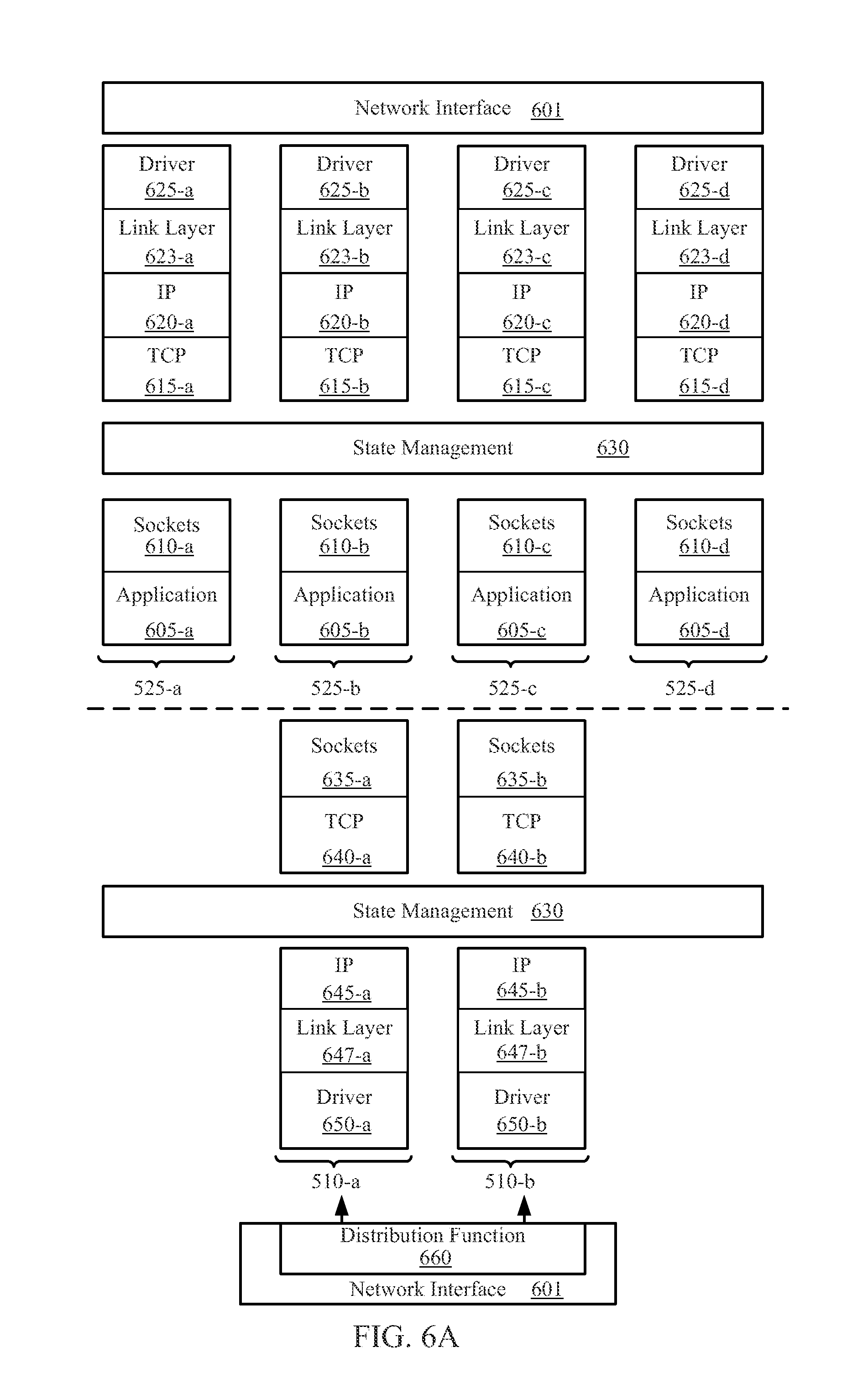

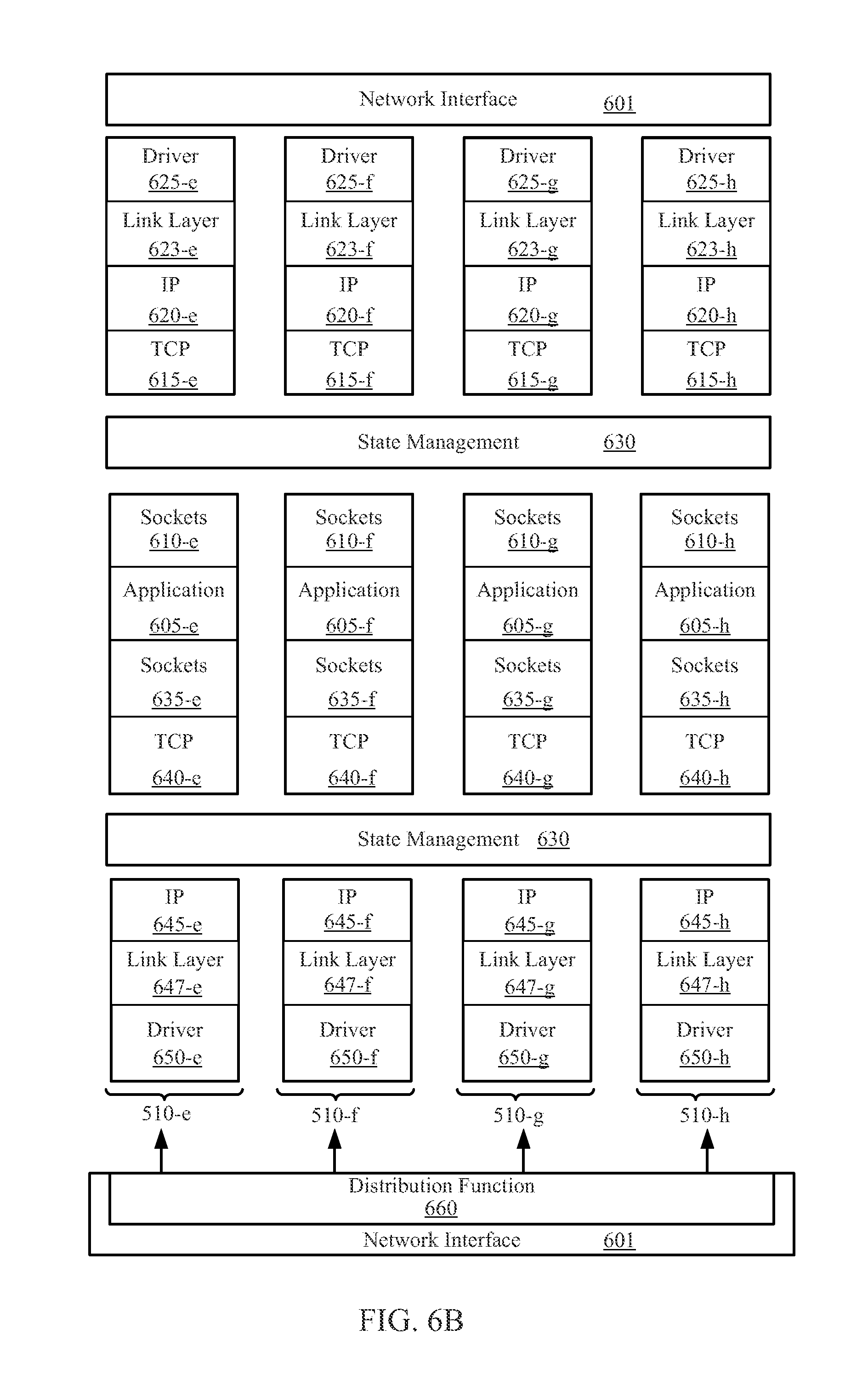

DETAILED DESCRIPTION OF THE INVENTION